Here you'll find an assorted mix of content from yours truly. I post about a lot

of things, but primarily

Day 16: Rubin's Bitcoin Advent Calendar

13 Dec 2021

Welcome to day 16 of my Bitcoin Advent Calendar. You can see an index of all

the posts here or subscribe at

judica.org/join to get new posts in your inbox

Who here has some ERC-20s or 721s? Anyone? No one? Whatever.

The Punchline is that a lotta fuss goes into Ethereum smart contracts being

Turing Complete but guess what? Neither ERC-20 nor 721 really have anything to

do with being Turing Complete. What they do have to do with is having a

tightly defined interface that can integrate into other applications nicely.

This is great news for Bitcoin. It means that a lot of the cool stuff happening

in eth-land isn’t really about Turing Completeness, it’s about just defining

really kickass interfaces for the things we’re trying to do.

In the last few posts, we already saw examples of composability. We took a bunch

of concepts and were able to nest them inside of each other to make

Decentralized Coordination Free Mining Pools. But we can do a lot more with

composability than just compose ideas togehter by hand. In this post I’ll give

you a little sampler of different types of programmatic composability and interfaces,

like the ERC-20 and 721.

Address Composability

Because many Sapio contracts can be made completely noninteractively (with CTV

or an Oracle you’ll trust to be online later), if you compile a Sapio contract

and get an address you can just plug it in somewhere and it “composes” and you

can link it later. We saw this earlier with the ability to make a channel

address and send it to an exchange.

However, for Sapio if you just do an Address it won’t necessarily have the

understanding of what that address is for so you won’t get any of the Sapio

“rich” features.

Pre-Compiled

You can also take not just an address, but an entire (json-serialized?) Compiled

object that would include all the relevant metadata.

Rust Generic Types Composability

Well, if you’re a rust programmer this basically boils down to rust types rule!

We’ll give a couple examples.

The simplest example is just composing directly in a function:

#[then]

fn some_function(self, ctx: Context) {

ctx.template()

.add_output(ctx.funds(), &SomeOtherContract{/**/}, None)?

.into()

}

What if we want to pass any Contract as an argument for a Contract? Simple:

struct X {

a : Box<dyn Contract>

}

What if we want to restrict it a little bit more? We can use a trait bound.

Now only Y (or anything implementing GoodContract) can be plugged in.

trait GoodContract : Contract {

decl_then!{some_thing}

}

struct Y {

}

impl GoodContract for Y {

#[then]

fn some_thing(self, ctx: Context) {

empty()

}

}

impl Contract for Y {

declare!{then, Self::some_thing}

}

struct X<T: GoodContract> {

a : Box<dyn GoodContract>,

// note the inner type of a and b don't have to match

b : Box<dyn GoodContract>

}

Boxing gives us some power to be Generic at runtime, but we can also do some

more “compile time” logic. This can have some advantages, e.g., if we want to

guarantee that types are the same.

struct X<T : Contract, U: GoodContract> {

a : T,

b : T

// a more specific concrete type -- could be a T even

c: U,

d: U,

}

Sometimes it can be helpful to wrap things in functions, like we saw in the Vaults post.

struct X<T: Contract>

// This lets us stub in whatever we want for a function

a : Box<Fn(Self, Context) -> TxTmplIt>,

// this lets us get back any contract

b : Box<Fn(Self, Context) -> Box<dyn Contract>>,

// this lets us get back a specific contract

c : Box<Fn(Self, Context) -> T,

}

Clearly there’s a lot to do with the rust type system and making components.

It would even be possible to make certain types of ‘unchecked’ type traits,

for example:

trait Reusable {}

struct AlsoReusable<T> {

a: T,

}

// Only reusable if T Reusable

impl<T> Reusable for AlsoReusable<T> where T: Reusable {}

The Reusable tag could be used to tag contract components that would be “reuse

safe”. E.g., an HTLC or HTLC containing component would not be reuse safe since

hashes could be revealed. While reusability isn’t “proven” – that’s up to the

author to check – these types of traits can help us reason about the properties

of compositions of programs more safely. Unfortunately, Rust lacks negative

trait bounds (i.e., Not-Reusable), so you can’t reason about certain types of things.

Inheritence

We don’t have a fantastic way to do inheritence in Sapio presently. But stay

tuned! For now, then best you get is that you can do traits (like

GoodContract).

Cross Module Composability & WASM

One of the goals of Sapio is to be able to create contract modules with a

well-defined API Boundary that communicates with JSONs and is “typed” with

JSONSchema. This means that the Sapio modules can be running anywhere (e.g., a

remote server) and we can treat it like any other component.

Another goal of Sapio is to make it possible to compile modules into standalone

WASM modules. WASM stands for Web Assembly, it’s basically a small deterministic

computer emulator program format so we can compile our programs and run them

anywhere that the WASM interpreter is available.

Combining these two goals, it’s possible for one Sapio program to dynamically

load another as a WASM module. This means we can come up with a component,

compile it, and then link to it later from somewhere else. For example, we could

have a Payment Pool where we make each person’s leaf node a WASM module of their

choice, that could be something like a Channel, a Vault, or anything that

satisfies a “Payment Pool Payout Interface”.

For example, suppose we wanted to a generic API for making a batched

payment.

Defining the Interface

First, we define a payment that we want to batch.

/// A payment to a specific address

pub struct Payment {

/// # Amount (btc)

/// The amount to send

pub amount: AmountF64,

/// # Address

/// The Address to send to

pub address: bitcoin::Address,

}

Next, we define the full API that we want. Naming and versioning is still a

something we need to work on in the Sapio ecosystem, but for now it makes sense

to be verbose and include a version.

pub struct BatchingTraitVersion0_1_1 {

pub payments: Vec<Payment>,

/// # Feerate (Bitcoin per byte)

pub feerate_per_byte: AmountF64

}

Lastly, to finish defining the API, we have to do something really gross looking

in order to make it automatically checkable – this is essentially this is what the

user defined BatchingTraitVersion0_1_1 is going to verify modules are able to

understand. This is going to be improved in Sapio over time for better typechecking!

impl SapioJSONTrait for BatchingTraitVersion0_1_1 {

fn get_example_for_api_checking() -> Value {

#[derive(Serialize)]

enum Versions {

BatchingTraitVersion0_1_1(BatchingTraitVersion0_1_1),

}

serde_json::to_value(Versions::BatchingTraitVersion0_1_1(

BatchingTraitVersion0_1_1 {

payments: vec![],

feerate_per_byte: Amount::from_sat(0).into(),

},

))

.unwrap()

}

}

Implementing the Interface

Let’s say that we want to make a contract like TreePay implement

BatchingTraitVersion0_1_1. What do we need to do?

First, let’s get the boring stuff out of the way, we need to make the TreePay

module understand that it should support BatchingTraitVersion0_1_1.

/// # Different Calling Conventions to create a Treepay

enum Versions {

/// # Standard Tree Pay

TreePay(TreePay),

/// # Batching Trait API

BatchingTraitVersion0_1_1(BatchingTraitVersion0_1_1),

}

REGISTER![[TreePay, Versions], "logo.png"];

Next, we just need to define logic converting the data provided in

BatchingTraitVersion0_1_1 into a TreePay. Since BatchingTraitVersion0_1_1

is really basic, we need to pick values for the other fields.

impl From<BatchingTraitVersion0_1_1> for TreePay {

fn from(args: BatchingTraitVersion0_1_1) -> Self {

TreePay {

participants: args.payments,

radix: 4,

// estimate fees to be 4 outputs and 1 input + change

fee_sats_per_tx: args.feerate_per_byte * ((4 * 41) + 41 + 10),

timelock_backpressure: None,

}

}

}

impl From<Versions> for TreePay {

fn from(v: Versions) -> TreePay {

match v {

Versions::TreePay(v) => v,

Versions::BatchingTraitVersion0_1_1(v) => v.into(),

}

}

}

Using the Interface

To use this BatchingTraitVersion0_1_1, we can just define a struct as follows,

and when we deserialize it will be automatically verified to have declared a

fitting API.

pub struct RequiresABatch {

/// # Which Plugin to Use

/// Specify which contract plugin to call out to.

handle: SapioHostAPI<BatchingTraitVersion0_1_1>,

}

The SapioHostAPI handle can be either a human readable name (like

“user_preferences.batching” or “org.judica.modules.batchpay.latest”) and looked

up locally, or it could be an exact hash of the specific module to use.

We can then use the handle to resolve and compile against the third party module.

Because the module lives in an entirely separate WASM execution context,

we don’t need to worry about it corrupting our module or being able to access

information we don’t provide it.

Call to Action

ARE YOU A BIG BRAIN PROGRAMMING LANGUAGE PERSON?

PLEASE HELP ME MAKE THIS SAPIO

HAVE A COOL AND USEFUL TYPE SYSTEM I AM A SMALL BRAIN BOI AND THIS STUFF IS

HARD AND I NEED FRENZ.

EVEN THE KIND OF “FRENZ” THAT YOU HAVE TO PAY FOR wink.

CLICK HERE

In the posts coming Soon™, we’ll see some more specific examples of contracts

that make heavier use of having interfaces and all the cool shit we can get done.

That’s all I have to say. See you tomorrow.

Day 15: Rubin's Bitcoin Advent Calendar

12 Dec 2021

Welcome to day 15 of my Bitcoin Advent Calendar. You can see an index of all

the posts here or subscribe at

judica.org/join to get new posts in your inbox

Long time no see. You come around these parts often?

Let’s talk mining pools.

First, let’s define some things. What is a pool? A pool is a way to take a

strongly discontinuous income stream and turn it into a smoother income stream.

For example, suppose you are a songwriter. You’re a dime a dozen, there are 1000

songwriters. If you get song of the year, you get $1M Bonus. However, all the

other songwriters are equally pretty good, it’s a crapshoot. So you and half the

other songwriters agree to split the prize money whoever wins. Now, on average,

every other year you get $2000, instead of once every thousand years. Since

you’re only going to work about 50 years, your “expected” amount of winnings

would be $50,000 if you worked alone. But expected winnings don’t buy bread. By

pooling, your expected winnings are $2000 every other year for 50 years, so also

$50,000. But you expect to actually have some spare cash laying around. However,

if you got lucky and won the contest the year you wrote a hit, you’d end up way

richer! but the odds are 1:20 of that ever happening in your life, so there

aren’t that many rich songwriters (50 out of your 1000 peers…).

Mining is basically the same as our songwriter contest, just instead of silver

tongued lyrics, it’s noisy whirring bitcoin mining rigs. Many machines will

never mine a block. Many miners (the people operating it) won’t either! However,

by pooling their efforts together, they can turn a once-in-a-million-years

chance into earning temperatureless immaterial bitcoin day in and day out.

Who Pissed In your Pool?

The problem with pooling is that they take an extremely decentralized process

and add a centralized coordination layer on top. This layer has numerous issues

including but not limited to:

- Weak Infrastructure: What happens if e.g. DNS goes down as it did recently?

- KYC/AML requirements to split the rewards

- Centralized “block policies”

- Bloating chain space with miner payouts

- Getting kicked out of their home country (happened in China recently)

- Custodial hacking risk.

People are working on a lot of these issues with upgrades like “Stratum V2”

which aspire to give the pools less authority.

In theory, mining pool operators should be against things that limit their

business operations. However, we’re in a bit “later stage bitcoin mining”

whereas pooling is seen more as a necessary evil, and most pools are anchored by

big mining operations. And getting rid of pools would be great for Bitcoin,

which would increase the value of folks holdings/mining rigs. So while it might

seem irrational, it’s actually perfectly incentive compatible that mining pools

operators consider mining pools to be something to make less of a centralization

risk. Even if pools don’t exist in their current form, mining service providers

can still make really good business offerring all kinds of support. Forgive me

if i’m speaking out of turn, pool ops!

Making Mining Pools Better

To make mining pools better, we can set some ambitious goals:

- Funds should not be centrally custodied, ever, if at all.

- No KYC/AML.

- No “Extra network” software required.

- No blockchain bloat.

- No extra infrastructure.

- The size of a viable pool should be smaller. Remember our singer – if you

just pool with one other songwriter it doesn’t make your expected time till

payout in your lifetime. So bigger the pools, more regular the payouts. We want

the smallest possible “units of control” with the most regular payouts possible.

Fuck. That’s a huge list of goals.

But if you work with me here, you’ll see how we can nail every last one of them.

And in doing so, we can clear up some major Privacy hurdles and Decentralization

issues.

Building the Decentralized Coordination Free Mining Pool

We’ll build this up step by step. We probably won’t look at any Sapio code

today, but as a precursor I really must insist read the last couple posts first:

- Congestion Control

- Payment Pools

- Channels

You read them, right?

Right?

Ok.

The idea is actually really simple, but we’ll build it up piece by piece by piece.

Part 1: Window Functions over Blocks.

A window function is a little program that operates over the last “N” things and

computes something.

E.g., a window function could operate over the last 5 hours and count how many

carrots you ate. Or over the last 10 cars that pass you on the road.

A window function of bitcoin blocks could operate over a number of different things.

- The last 144 blocks

- The last 24 hours of blocks

- The last 100 blocks that meet some filter function (e.g, of size > 500KB)

A window function could compute lot of different things too:

- The average time difference between blocks

- The amount of fees paid in those blocks

- A subset of the blocks that pass another filter.

A last note: window functions need, for something like Bitcoin, a start height

where we exclude things prior (e.g., last 100 blocks since block 500,000)

Part 2: Giving presents to all our friends

Let’s do a window function over the last 100 Blocks and collect the 1st address

in the output of the coinbase transaction.

Now, in our block, instead of paying ourselves a reward, let’s divvy it up among

the last 100 blocks and pay them out our entire block reward, split up.

We’re so nice!

Part 3: Giving presents to our nice friends only

What if instead of paying everyone, we do a window function over the last 100

blocks and filter for only blocks that followed the same rule that we are

following (being nice). We take the addresses of each of them, and divvy up our

award to them too like before.

We’re so nice to only our nice friends!

Now stop and think a minute. All the “nice” blocks in the last 100 didn’t get a

reward directly, but they got paid by the future nice blocks handsomely. Even

though we don’t get any money from the block we mined, if our nice friends keep

on mining then they’ll pay us too returning the favor.

Re-read the above till it makes sense. This is the big idea. Now onto the “small”

ideas.

Part 4: Deferring Payouts

This is all kinda nice, but now our blocks get really really big since we’re

paying all our friends. Maybe we can be nice, but a little mean too and tell

them to use their own block space to get their gift.

So instead of paying them out directly, we round up all the nice block addresses

like before and we toss it in a Congestion Control Tree.

Now our friends do likewise too. Since the Congestion Control Module is

deterministic, everyone can generate the same tree and both verify that our

payout was received and generate the right transaction.

Now this gift doesn’t take up any of our space!

Part 5: Compacting

But it still takes up space for someone, and that blows.

So let’s do our pals a favor. Instead of just peeping the 1st address (which

really could be anything) in the coinbase transaction, let’s use a good ole

fashioned OP_RETURN (just some extra metadata) with a Taproot Public Key we want

to use in it.

Now let’s collect all the blocks that again follow the rule defined here, and

take all their taproot keys.

Now we gift them into a Payment Pool, instead of into just a Congestion Control

tree with musig aggregated keys at every node. It’s a minor difference – a

Congestion Control tree doesn’t have a taproot key path – but that difference

means the world.

Now instead of having to expand to get everyone paid, they can use it like a

Payment Pool! And Pools from different runs can even do a many-to-one

transaction where they merge balances.

For example, imagine two pools:

UTXO A from Block N: 1BTC Alice, 1BTC Carol, 1BTC Dave

UTXO B Block N+1: 1BTC Alice, 1BTC Carol, 1BTC Bob

We can do a transaction as follows to merge the balances:

Spends:

UTXO A, B

Creates:

UTXO C: 2BTC Alice, 2BTC Carol, 1BTC Dave, 1BTC Bob

Compared to doing the payments directly, fully expanding this creates only 4

outputs instead of 6! It gets even better the more miners are involved.

We could even merge many pools at the same time, and in the future, benefit from

something like cross-input-signature aggregation to make it even cheaper and

create even fewer outputs.

Part 6: Channels

But wait, there’s more!

We can even make the terminal leafs of the Payment Pool be channels instead of direct UTXOs.

This has a few big benefits.

- We don’t need to do any compaction as urgently, we can immediately route funds around.

- We don’t need to necessarily wait 100 blocks to spend out of our coinbase since we can use the channel directly.

- Instead of compaction, we can just “swap” payments around across channels.

How channel balancing might look.

How channel balancing might look.

This should be opt-in (with a tag field to opt-in/out) since if you didn’t want

a channel it could be annoying to have the extra timeout delays, especially if you

wanted e.g. to deposit directly to cold storage.

Part 7: Selecting Window Functions

What’s the best window function?

I got no freakin’ clue. We can window over time, blocks, fee amounts,

participating blocks, non participating blocks, etc.

Picking a good window function is an exercise in itself, and needs to be

scrutinized for game theoretic attacks.

Part 8: Payout Functions

Earlier we showed the rewards as being just evenly split among the last blocks,

but we could also reward people differently. E.g., we could reward miners who

divided more reward to the other miners more (incentivize collecting more fees),

or really anything deterministic that we can come up with.

Again, I don’t know the answer here. It’s a big design space!

Part 9: Voting on Parameters

One last idea: if we had some sort of parameter space for the window functions,

we could perhaps vote on-chain for tweaking it. E.g., each miner could vote to

+1 or -1 from the window length.

I don’t particularly think this is a good idea, because it brings in all sorts

of weird attacks and incentives, but it is a cool case of on-chain governance so

worth thinking more on.

Part 10: End of Transmission?

No more steps. Now we think a bit more about the implications of this.

Solo mining?

Well the bad news about this design is that we can’t really do solo mining.

Remember, most miners probably will never mine a block. So they would never be

able to enter the pool.

We could mess around with including things like partial work shares (just a

few!) into blocks, but I think the best bet is to instead to focus on

micro-pools. Micro-pools would be small units of hashrate (say, 1%?) that are

composed of lots of tiny miners.

The tiny miners can all connect to each other and gossip around their work

shares, use some sort of conesnsus algorithm, or use a pool operator. The blocks

that they mine should use a taproot address/key which is a multisig of some

portion of the workshares, that gets included in the top-level pool as a part of

Payment Pool.

So while we don’t quite make solo mining feasible, the larger the window we use

the tinier the miners can be while getting better de-risking.

Analysis?

A little out of scope for here, but it should work conceptually!

A while back I analyzed this kind of setup, read more

here. Feel free to experiment

with window and payout functions and report back!

chart showing that the rewards are smoother over time

chart showing that the rewards are smoother over time

Now Implement it!

Well we are not gonna do that here, since this is kinda a mangum opus of Sapio

and it would be wayyyy too long. But it should be somewhat conceptually

straightforward if you paid close attention to the “precursor” posts. And you

can see some seeds of progress for an implementation on

github,

although I’ve mostly been focused on simpler applications (e.g. the constituent

components of payment pools and channels) for the time being… contributions welcome!

TL;DR: Sapio + CTV makes pooled mining more decentralized and more private.

Day 14: Rubin's Bitcoin Advent Calendar

11 Dec 2021

Welcome to day 14 of my Bitcoin Advent Calendar. You can see an index of all

the posts here or subscribe at

judica.org/join to get new posts in your inbox

Lightning Lightning Lightning

Everybody loves Lightning. I love Lightining, you love Lightning. We love

everyone who works on Lightning. Heck, even

Chainalysis loves

Lightning these days :(…

We all love Lightning.

But what if I told you we could love Lightning even more? Crazy, right?

With CTV + Sapio we can improve on Lightning is some pretty cool ways you

may not have heard too much about before. Buckle up, we’re in for another doozy

of a post.

Let a thousand channels bloom

The main thing we’re going to talk about in this post is the opening and closing

of channels. There are some other things that CTV/Sapio can do that are a bit

more niche to talk about, but there will always be future posts.

How do we open channels today?

Let’s say I want to open a channel up with you. I shoot you a text on signal or

something and say “hey what’s up, happy holidays friend. I would like to open a

payment channel with you”. You say back, “Tis the season! Let’s do it, my Tor

Hidden Service address is ABCXYZ”. Then I connect to your node from my computer

and then I say I want to open a channel with you for 500,000 sats (at writing in

2021 this was $250 US Dollars, not $250 Million Dollars). Then, you might

authorize opening up the channel with me, or your node might just roll the dice

and do it without your permission (IDK how the nodes actually work, depends on

your client, and maybe in the future some reputation thingy).

So now we have agreed to create a channel.

Now, I ask you for a key to use in the channel and you send it to me. Then, I

create an unsigned transaction F that is going to create and fund our channel.

The channel is in Output C. I send you F and C. Then, I ask you to pre-sign a

transaction spending from C that doesn’t yet exist, but would refund me and give

you nothing in the event you go offline. This is basically just using the

channel like it exists already for a payment 0 paying me. After I get those

sweet sweet signatures from you, then I send you the signatures as well in case

you want to close things out like normal.

Houston, we have a channel.

Now we can revoke old states and stuff and sign new states and all that fancy

channel HTLC routing jazz. We don’t really need to know how a lot of that works

down in the details so don’t ask.

Something a little more nifty, perhaps?

Technically I presented you how single funded channels work, but you can also

dual fund where we both contribute some funds. It’s relatively new feature to

land and was a lot of work… Dual funded channels are important because when I

opened the channel to you I had all the sats and I couldn’t receive any Bitcoin.

Dual funded channels means you can immediately send both directions.

What can we do with CTV?

With CTV, the single funded channel opening story is a bit simpler. I ask you if

you want to open a channel, you say “sure!” (maybe I even look up your key from

a Web-of-Trust system), and send me a key. I then use Sapio to compile a channel

for 500k sats to our keys, I send Bitcoin to it. The channel is created. I send

you the Outpoint + the arguments to the channel, either through email,

connecting to your node, or pigeon with a thumbdrive, and later you verify that

I paid to the channel for our keys that Sapio output by running the compiler

with the same arguments (500k sats to our keys).

This is called a non-interactive channel open. Why’s that? Beyond having to do

some basics (e.g., I have to know a key for you, which could be on a public

Web-of-Trust), there is no step in the flow that requires any back-and-forth

negotiation to create the channel. I just create it unilaterally, and then I

could tell you about it a year later. You’d be able to verify it fine!

For dual-funded channels, I send you a transaction you can pay into to finish

opening it and I can go offline. Once opened, the channel works for us both

recovering our funds.

sounds niche

It kinda is. It’s an esoteric nerdy property. But I promise you it’s really

cool! Let’s look at some examples:

Cafe Latte Anyone?

Let’s say that I go to a cafe I’ve never been to and there is a QR code posted

on the wall. I then go about my business, ordering a 10,000 sat breakfast combo.

To pay, I scan the QR-code, and then it has a XPUB for Non Interactive Channels on it.

I can then plug in that XPUB into my Sapio Channel Creator and create a channel

with a first payment of 10k sats and a total balance of 100k sats. I show a QR

code on my phone to the barista, who scans it, getting the details of the

channel I made. Barista says looks good, acknowledging both the payment and the

channel open. The details get backed up to The Cloud.

But just then something happens: a masked figure comes in with a gun and tells

the barista, “GIVE ME ALL YOUR SATOSHIS”. A child begins to cry, their parent

covering their mouth with their hand. The bad guy barks, “GIVE ME ALL YOUR

SATOSHIS… and no one gets hurt,” tapping the muzzle of the gun on the

countertop. The barista smirks and snarls, “stupid thief, surely you’ve been

reading the post on non-interactive lightning channels on Rubin’s Bitcoin Advent

Calendar.” The robber adjusts the straps on their mask for some relief from the

ear irritation. “If you had been reading it, you would know that I don’t need to

have a key online in order for someone to create a channel with me! I just need

the XPUB to verify they are made correctly. This is not those old-school

channels. I have no ability to spend. We keep our keys colder than our cold

brew.” The robbers shoulders sag and they mutter, “fine, in that case, I’ll have

a medium cold brew coffee, one sugar with a splash of oat milk. And that big

chocolate chip cookie”.

That’s right. Because our cafe used non-interactive channels, they didn’t

have to have a key online to create a channel with me! They just needed durable

storage for the channel definition.

And when I go to spend a bit extra for a bottle of Topo Chico™ later, they still

don’t need to be online, I can start making payments without them

counter-signing.

Where did my corn come from?

How did I get the bitcoin for the channel I’m opening? Usually this is an

assumption for Lightning (you have Bitcoin!), but in this case it’s central to

the plot here. You probably got them from an exchange, mining, or something else.

This means that in order to open a channel to someone, I need to do two transactions:

- Get some money

- Make the channel

It’s possible, if I had a really legit hip exchange, they’d let me directly open

a channel by offering me a transaction unsigned with the channel output C that I

can presign with you! But then they can’t really batch payments (otherwise one

user going offline can be a DoS attack on the batch payout) and they can also

get DoS’d unbatched since we can “lock up” a coin while we run the protocol.

If instead, we had CTV we could just generate an address for the channel we

wanted and request the exchange pay to it the appropriate amount of coin. The

exchange could pay the channel address however they want, and we’d be able to

use it right away.

However they want?

Yes. Let’s look at some options:

- A normal transaction – Works great.

- A batch transaction – No Problemo.

- A Congestion Control Tree – Even that!

What was that last one? You read it right, a channel can be created in a Congestion Control tree,

and be immediately usable!

How’s this work? Well, because you can fully verify you’d receive a payment in a

congestion control tree, you can likewise fully verify that your channel will be

created.

This is big. This means that you can just directly request a channel from a third party

without even telling them that you’re making a channel!

And this technique – channels in congestion control tree – generalizes

beautifully. It means you could create as many immediately usable channels as

you like and lazily fully open them over their lifetime whenever blockspace is

affordable.

I Lied (a little)

If the exchange doesn’t follow your payment instructions to the T, e.g. if they

split it into two UTXOs then it won’t work. Exchanges should probably not do

anything other than what you asked them to do (this should be something to ensure

in the exchanges terms of service…).

Come on in the water’s warm?

This concept also composes nicely with the Payment

Pools we saw yesterday. Imagine you embed

channels as the terminal outputs after a full-ejection from the pool. Then,

what you can do is have the N-of-N agree to an on-chain state update that

respects (or preserves) any channel updates before you switch. Embedding the

channels inside means that Payment Pools would only need to do on-chain

transactions when they need to make an external payment or re-configure

liquidity among participants.

For example, imagine a pool with Alice, Bob, Carol, and Dave each having one

coin in a channel. We’ll do some channel updates, and then reconfigure.

Start:

Pool(Channel([A, 1], [B, 1]), Channel([C, 1], [D, 1]))

Channel Update (off-chain):

Pool(Channel([A, 0.4], [B, 1.6]), Channel([C, 1], [D, 1]))

Channel Update (off-chain):

Pool(Channel([A, 0.4], [B, 1.6]), Channel([C, 1.3], [D, 0.7]))

Pool Reconfigure (on-chainl swap channel partners):

Pool(Channel([A, 0.4], [D, 0.7]), Channel([C, 1.3], [B, 1.6]))

Pool Reconfigure (on-chain; add Eve/Bob Channel):

Pool(Channel([A, 0.4], [D, 0.7]), Channel([C, 1.3], [B, 0.6]), Channel([E, 0.5], [B, 0.5]))

Pretty neat, right?

This is particularly a big win for Scalability and Privacy, since we’re now

containing tons of activity within a single UTXO, and even within that UTXO

most of the information doesn’t need to be known to all participants.

I’m not going to show you all of these integrations directly (Congestion Control, Pools, etc),

because you gotta cut an article somewhere. But we do have enough…

Time to Code

OK enough ‘how it works’ and ‘what it can do’. Let’s get cracking on a basic

channel implementation so you know I’m not bullshitting you.

First, let’s define the basic information we’ll need:

/// Information for each Participant

struct Participant {

/// signing key

key: PublicKey,

/// amount of funds

amount: AmountF64,

}

/// A Channel can be either in an Open or Closing state.

enum State {

Open,

Closing

}

/// Channel definition.

struct Channel {

/// If it is opening or closing

state: State,

/// Each participant's balances

parties: [Participant; 2],

/// Amount of time transactions must be broadcast within

timeout: AnyRelTimeLock,

}

Pretty straightforward.

Now, let’s define the API:

impl Contract for Channel {

declare!{then, Self::finish_close, Self::begin_close}

declare!{updatable<Update>, Self::update}

}

Next, we’ll define the being_close logic. Essentially all it’s going to do is,

if we’re in the Open state allow transitioning the pool to the Closing state.

impl Channel {

#[compile_if]

fn if_open(self, ctx: Context) {

if let State::Open = self.state {

ConditionalCompileType::Required

} else {

ConditionalCompileType::Never

}

}

#[then(compile_if = "[Self::if_open]")]

fn begin_close(self, ctx: Context) {

// copy the channel data and change to closing state

// begin_close can happen at any time

let mut close = self.clone();

close.state = State::Closing;

ctx.template()

.add_output(Amount::from(self.parties[0].amount) +

Amount::from(self.parties[1].amount),

&close, None)?

.into()

}

}

Next we’ll define the logic for the Closing state. Essentially, if the state

as been in Closing and the timeout expires, then we allow a transaction to

return the funds to the initial state. We’ll only add an output for a

participant if they have any money!

impl Channel {

#[compile_if]

fn if_closing(self, ctx: Context) {

if let State::Closing = self.state {

ConditionalCompileType::Required

} else {

ConditionalCompileType::Never

}

}

#[then(compile_if = "[Self::if_closing]")]

fn finish_close(self, ctx: Context) {

// only allow finish_close after waiting for timelock

let mut tmpl = ctx.template().set_sequence(-1, self.timelock)?;

// add party 0 if they have funds

if Amount::from(self.parties[0].amount).as_sat() != 0 {

tmpl = tmpl.add_output(self.parties[0].amount.into(), &self.parties[0].key, None)?;

}

// add party 1 if they have funds

if Amount::from(self.parties[1].amount).as_sat() != 0 {

tmpl = tmpl.add_output(self.parties[1].amount.into(), &self.parties[1].key, None)?;

}

tmpl.into()

}

}

Almost lastly, we’ll add the updating logic. The updating logic has to be used in a very

particular way in this contract, but it’s pretty basic by itself!

// updating a channel

enum Update {

// nothing to do!

None,

// An update that can later 'burned'

Revokable(Revokable),

// An update that is formed to terminate a channel

Cooperate([Participants; 2])

}

impl Channel {

#[guard]

fn both_signed(self, ctx: Context) {

Clause::And(vec![Clause::Key(self.parties[0].key),

Clause::Key(self.parties[1].key)])

}

#[continuation(guarded_by = "[Self::both_signed]")]

fn update(self, ctx: Context, u: Update) {

match u {

// don't do anything

Update::None => empty(),

// send funds to the revokable contract

Update::Revokable(r) => {

// note -- technically we only need to sign revokables where

// state == State::Closing, but we do both for efficiency

ctx.template()

.add_output(Amount::from(self.parties[0].amount) +

Amount::from(self.parties[1].amount), &r, None)?

.into()

},

// Terminate the channel into two payouts.

Update::Cooperate(c) => {

ctx.template()

.add_output(c[0].amount.into(), &c[0].key, None)?

.add_output(c[1].amount.into(), &c[1].key, None)?

.into()

}

}

}

}

Now to finish we need to define some sort of thing for Revokable. Revokables

are used to update a channel from one set of balances to another. This will

depend on your payment channel implementation. I’ve defined a basic one below,

but this could be anything you like.

Essentially, a Revokable is an offer from party A to party B to close the

channel such that B can later provably “reject” the offer. If B uses a rejected

offer, A can take the entire balance of the channel.

How to use this to update a channel? To start, all parties agree on the new

balances with a timeout.

Next, party one gets a hash H(V) from party two that party two knows V and party

one does not. Party one then creates a Revokable with from_idx = 0, the

updated balances, timelock, and hash H(V). They feed the update arguments to

Channel::update and sign the resulting transaction, sending the signed

transaction to party two. In particular in non-interactive channels, party one

only has to sign revokable updates at the branch where state ==

State::Closing, but it’s better for cases where your counterparty might not be

malicious and just offline if you sign updates on both Open and Closing.

Just signing on Open would be insecure.

Then, we repeat this with roles reversed with one generating a hash and two

signing transactions.

Lastly, both reveals the hash preimage (V to H(V)) from any prior round to

revoke the state from their counterparty.

If either party ever broadcasts the Revokable that they received by signing the

other half of the Channel::update after revealing their Hash preimage, the

other party can take all the funds in the channel.

Kinda a bit tough to understand, but you don’t really need to get it, you can

embed whatever protocol like this inside that you want.

struct Revokable {

// updated balances

parties: [Participant; 2],

// preimage from the other party

hash: Hash,

// how long the other party has to revoke

timelock: AnyRelTimeLock,

// who is this update from

from_idx: u8,

}

impl Contract for Revokable {

declare!{then, Self::finish}

declare!{finish, Self::revoked}

}

impl Revokable {

/// after waiting for the timeout, close the balances out at the appropriate values.

#[then]

fn finish(self, ctx: Context) {

let mut tmpl = ctx.template().set_sequence(-1, self.timelock)?;

if Amount::from(self.parties[0].amount).as_sat() != 0 {

tmpl = tmpl.add_output(self.parties[0].amount.into(), &self.parties[0].key, None)?;

}

if Amount::from(self.parties[1].amount).as_sat() != 0 {

tmpl = tmpl.add_output(self.parties[1].amount.into(), &self.parties[1].key, None)?;

}

tmpl.into()

}

/// if this was revoked by the other party

/// we can sweep all the funds

#[guard]

fn revoked(self, ctx: Context) {

Clause::And(vec![

Clause::Sha256(self.hash),

Clause::Key(self.parties[self.from_idx])])

}

}

And now some closing remarks:

CTV Required?

You don’t need CTV for these channel specs to work, but you do need CTV for the

channels to be non-interactive. Without CTV you just use a multi-sig oracle of

both parties, and the contracts come out logically similar to an existing

lightning channel. Does that mean we’re going to enter…

The Era of Sapio Lightning?

It’s probably going to be a while/never before this actually becomes a

“Lightning” standard thing, even if you could use this with self-hosted oracles

today, although perhaps one day it could be!

However, it’s possible! One path towards that would be if, perhaps, Sapio gets

used to help define the “spec” that all lightning protocols should implement.

Then it’d be theoretically possible to use Sapio for a channel implementation!

Or maybe Sapio becomes a “plugin engine” for negotiating channels and updates can

just be shipping some WASM.

What didn’t make the cut?

Some ideas to mention, but not fully flesh out (yet?):

Eltoo

So, so very much. To start CTV+CSFS can do something like Eltoo, no need for

AnyPrevout. Very neat! If we had some Eltoo primitive available, I could show you

revocation-free channels.

Embedded Sapio States

Instead of making the channel state a boring “pay X to 0, pay Y to 1”

resolution, we can actually embed all sorts of contracts inside of channels.

E.g., imagine if you have a channel whereby if you contested close it your

counterparty’s funds (who is offline conceivably) go to a cold-storage vault.

Or imagine if you had some sort of oracle resolved synthetic bitcoin settled

derivative contract, like a DLC, embedded inside. You could then use this to HFT

your synths!

Or what if there were some new-fangled token protocol that lived inside state

transition to state transition, and you could update you and your counterparty’s

stake into those?

You can really put anything you want. We’ll see in a couple days how you can

define a Channel Plugin Interface so that you can dynamically link a logic

module into a contract, rather than compiling it in.

Embedded Channels

We saw a little bit of embedded channels. Channels embedded in congestion

control, or in payment pools. But the concept can be a lot more diverse.

Remember our Vaults and inheritence schemes? We could make the hot-wallet

payouts from those go directly into Channels with some channel operator hub. Or

what about making channels directly out of coinjoins? Not having to pre-sign

everything really helps. Don’t sleep on this.

Embedded Channel Creation Args

We said earlier that channel creation required some sort of email. But it’s also

sometimes possible to embed the channel metadata into e.g. an op_return on the

channel creation. Perhaps as an IPFS hash or something. In this case, you would

just need to scan over txs, download the relevant data, and then attempt

plugging it into WASM (heck – the WASM could just receive the txn in question

and do all the heavy lifting). If the WASM spits out a matching output/channel

address, you now have a channel you can detect automatically. This doesn’t have

to be bad for privacy if the data is encrypted somehow!

How will this impact the world?

Non interactive channel creation is going to, for many users, dramatically

decrease the cost of channel opening. Firstly you can defer paying fees when you

open many channels (big news)! In fact, if the channel is long lived enough, you

may never pay fees if someone else does first! That incentive to wait is called

backpressure. It’s also going to “cut through” a lot of cases (e.g., exchange

withdraw, move from cold storage, etc) that would otherwise require 2

transactions. And channels in Payment Pools have big opportunities to leverage

cooperative actions/updates to dramatically reduce chain load in the happy-case.

This is a gigantic boon not just for scalability, but also for privacy. The less

that happens on chain the better!

I think it’s also likely that with non-interactive channels, one might always

(as was the case with our cafe) opportunistically open channels instead of

normal payments. Removing the “counterparty online” constraint is huge. Being

able to just open it up and bet that you’ll be able to route is a big win. This

is similar to “PayJoin”, whereby you try to always coin-join transactions on all

payments for both privacy and fee savings.

Tomorrow, we’ll see sort of a magnum opus of using non-interactive channels, so

stay tuned folks, that’s all for today.

Day 13: Rubin's Bitcoin Advent Calendar

10 Dec 2021

Welcome to day 13 of my Bitcoin Advent Calendar. You can see an index of all

the posts here or subscribe at

judica.org/join to get new posts in your inbox

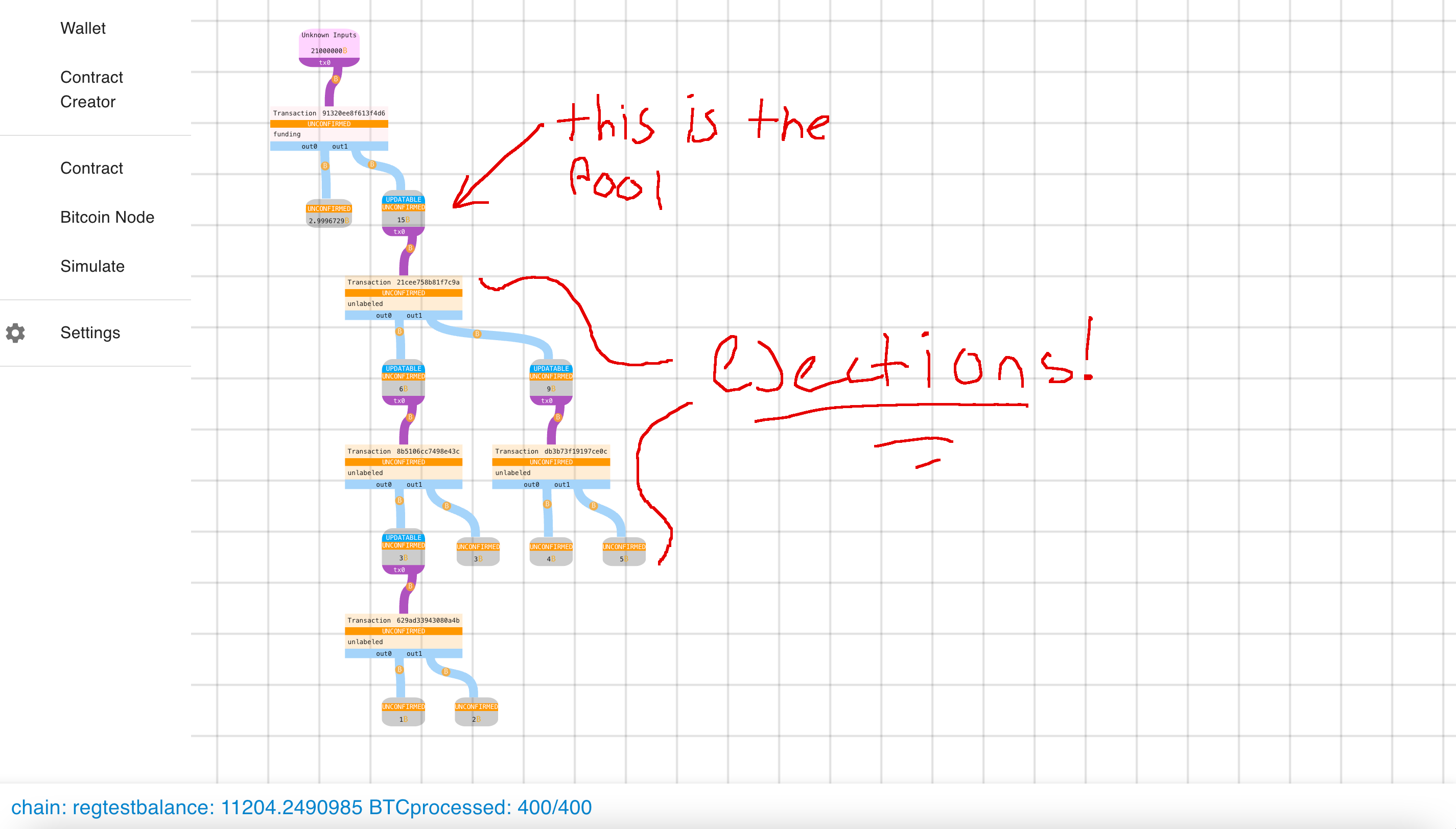

Payment Pools are a general concept for a technique to share a single UTXO among

a group. They’ve been discussed for a couple years, but now that

Taproot is active are definitely more relevant! In this post we’ll go through

some really simple Payment Pool designs before turning it up a little bit :)

Mechanistically, all that is required of a Payment Pool is that:

- It’s a single (shared) UTXO

- Every user can get their funds out unilaterally

- A set of users can authorize spend the funds

- Unspent funds/change stays in the pool

Why Pool?

Pools are really great for a number of reasons. In particular, Payment Pools are

fantastic for Scalability since they mean 1 utxo can serve many masters, and

also each txn only requires one signature to make a batched payment from a

group. Payment Pools are kinda a killer version of a coin-join where you roll

the funds from coinjoin to coinjoin automatically, giving you great privacy.

We’ll also see how they benefit decentralization in a couple of days.

What’s the simplest design that can satisfy this?

Imagine a coin that is either N-of-N multisig OR a transaction distributing the

coins to all users. The Sapio would look a bit like this:

struct SimplePool {

/// list of all initial balances

members: HashMap<PublicKey, Amount>

}

impl SimplePool {

/// Send their balances to everyone

#[then]

fn ejection(self, ctx: Context) {

let mut t = ctx.template();

for (key, amount) in self.members.iter() {

t = t.add_output(amt, &key, None)?;

}

t.into()

}

/// all signed the transaction!

#[guard]

fn all_signed(self, ctx: Context) {

Clause::Threshold(self.members.len(),

self.members

.keys()

.map(Clause::Key)

.collect())

}

}

impl Contract for SimplePool {

declare!{then, Self::ejection}

declare!{finish, Self::all_signed}

}

Let’s check our list:

- It’s a single UTXO – Check

- Every user can get their funds out unilaterally – Check, with SimplePool::ejection

- A set of users can authorize spend the funds – Check, unanimously

- Unspent funds/change stay in the pool – We’ll give this a Check, just don’t sign transaction that don’t meet this contstraint.

So we’re good! This is all we need.

But is it really all we need?

It’d be nice if the Payment Pool had a little bit more structure around the

updating so that a little bit less was left to the user to do correctly.

Luckily, Sapio has tools for that. Let’s define a transition function in Sapio

that generates what we should do with Simple::all_signed.

The transition function should take a list of signed updates per participant and

generate a transaction for signing (signing the inputs helps with coordinating

not signing the incorrect transaction). Any leftover funds should be sent into a

new instance of the Payment Pool for future use.

We’ll also make one more change for efficient ejections: In the version I gave

above, the unilateral ejection option exits everyone out of the pool, which

kinda sucks.

However, we will ‘hybridize’ the payment pool with the tree payment. Then, you

would have “hierarchical” pools whereby splitting would keep pools alive. E.g.,

if you had 30 people in a pool with a splitting radix of 2, 1 person

force-ejecting themselves would create something like 1 pool of size 15, 1 pool

of size 7, 1 pool of size 4, 1 pool of size 2, and 2 ejected people. They can

always re-join a pool again after!

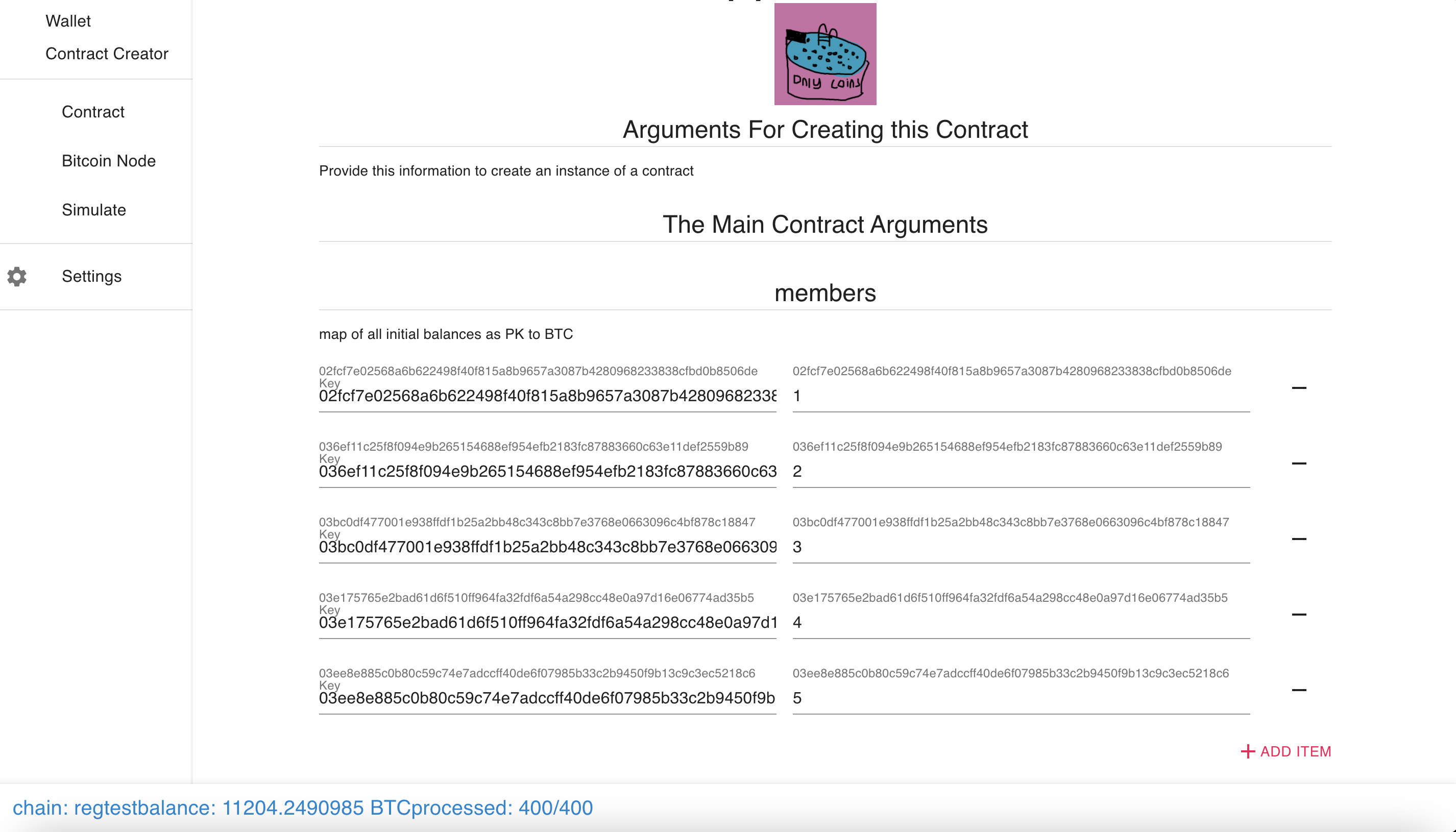

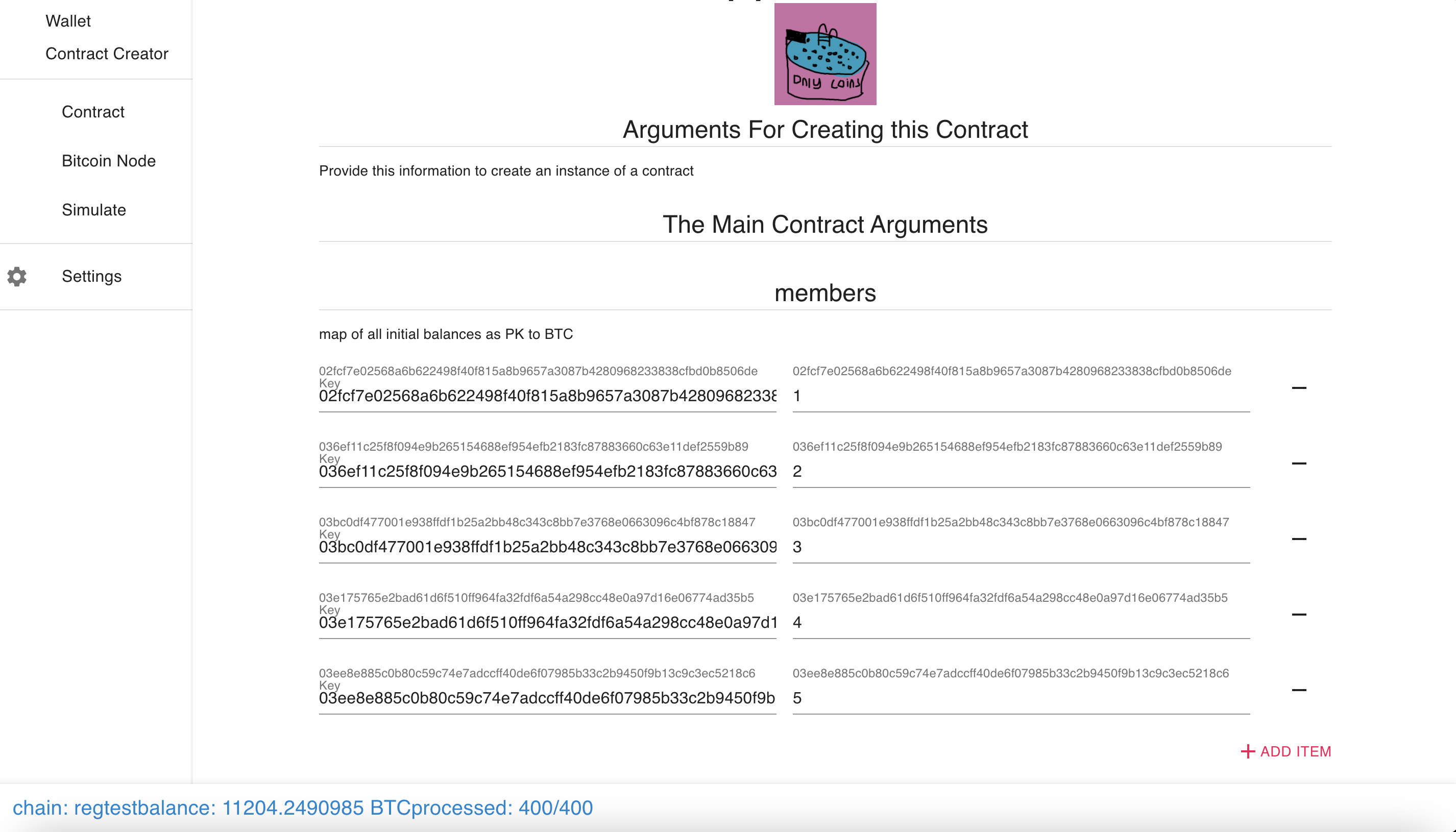

First, we’ll define the basic Pool data and interface:

#[derive(Deserialize, JsonSchema, Clone)]

struct NextTxPool {

/// map of all initial balances as PK to BTC

members: BTreeMap<PublicKey, AmountF64>,

/// The current sequence number (for authenticating state updates)

sequence: u64,

/// If to require signatures or not (debugging, should be true)

sig_needed: bool,

}

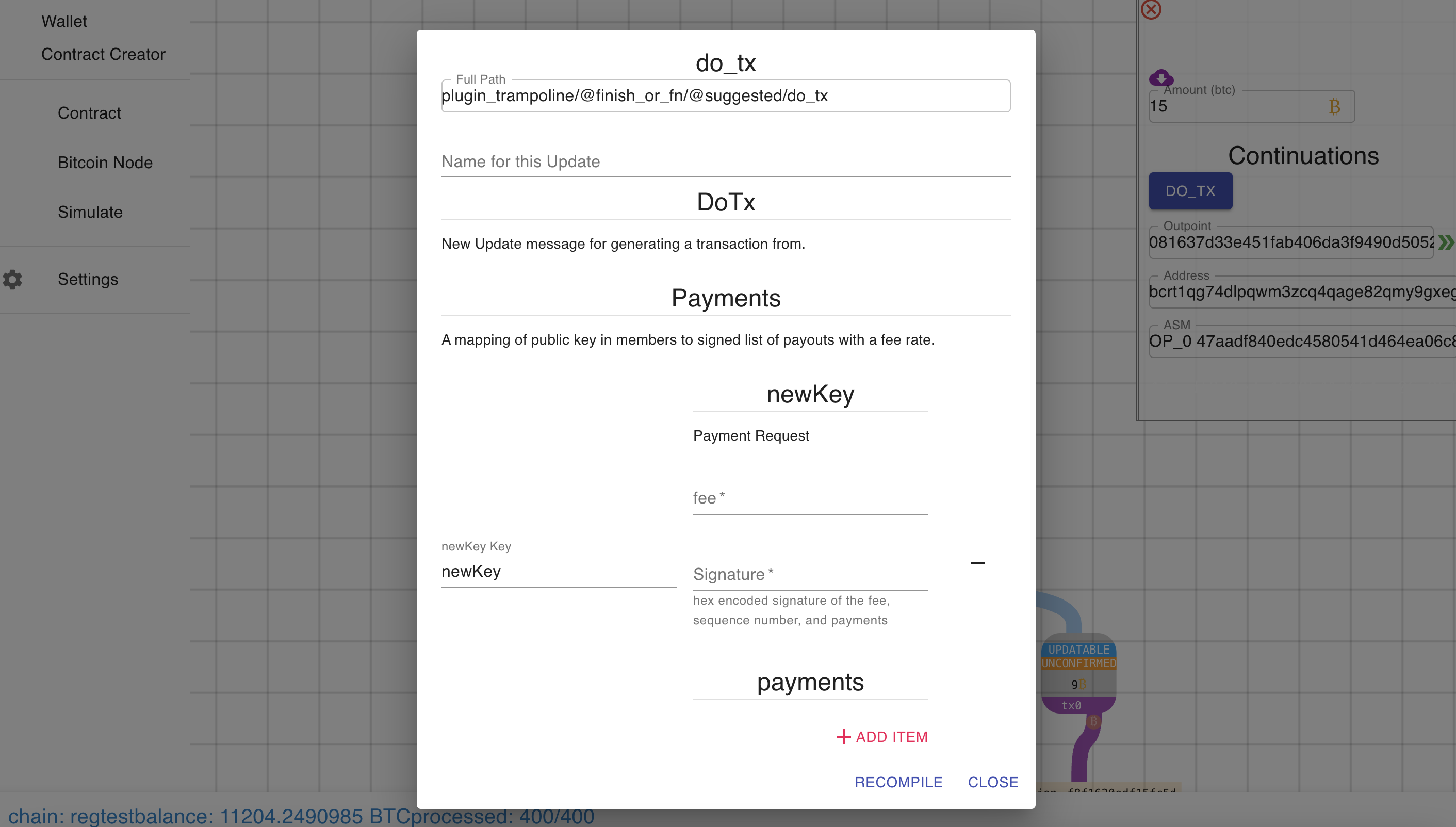

impl Contract for NextTxPool {

declare! {then, Self::ejection}

declare! {updatable<DoTx>, Self::do_tx}

}

Now we’ll define the logic for ejecting from the pool:

impl NextTxPool {

/// Sum Up all the balances

fn total(&self) -> Amount {

self.members

.values()

.cloned()

.map(Amount::from)

.fold(Amount::from_sat(0), |a, b| a + b)

}

/// Only compile an ejection if the pool has other users in it, otherwise

/// it's base case.

#[compile_if]

fn has_eject(self, ctx: Context) {

if self.members.len() > 1 {

ConditionalCompileType::Required

} else {

ConditionalCompileType::Never

}

}

/// Split the pool in two -- users can eject multiple times to fully eject.

#[then(compile_if = "[Self::has_eject]")]

fn ejection(self, ctx: Context) {

let mut t = ctx.template();

let mid = (self.members.len() + 1) / 2;

// find the middle

let key = self.members.keys().nth(mid).expect("must be present");

let mut pool_one: NextTxPool = self.clone();

pool_one.sequence += 1;

let pool_two = NextTxPool {

// removes the back half including key

members: pool_one.members.split_off(&key),

sequence: self.sequence + 1,

sig_needed: self.sig_needed,

};

let amt_one = pool_one.total();

let amt_two = pool_two.total();

t.add_output(amt_one, &pool_one, None)?

.add_output(amt_two, &pool_two, None)?

.into()

}

}

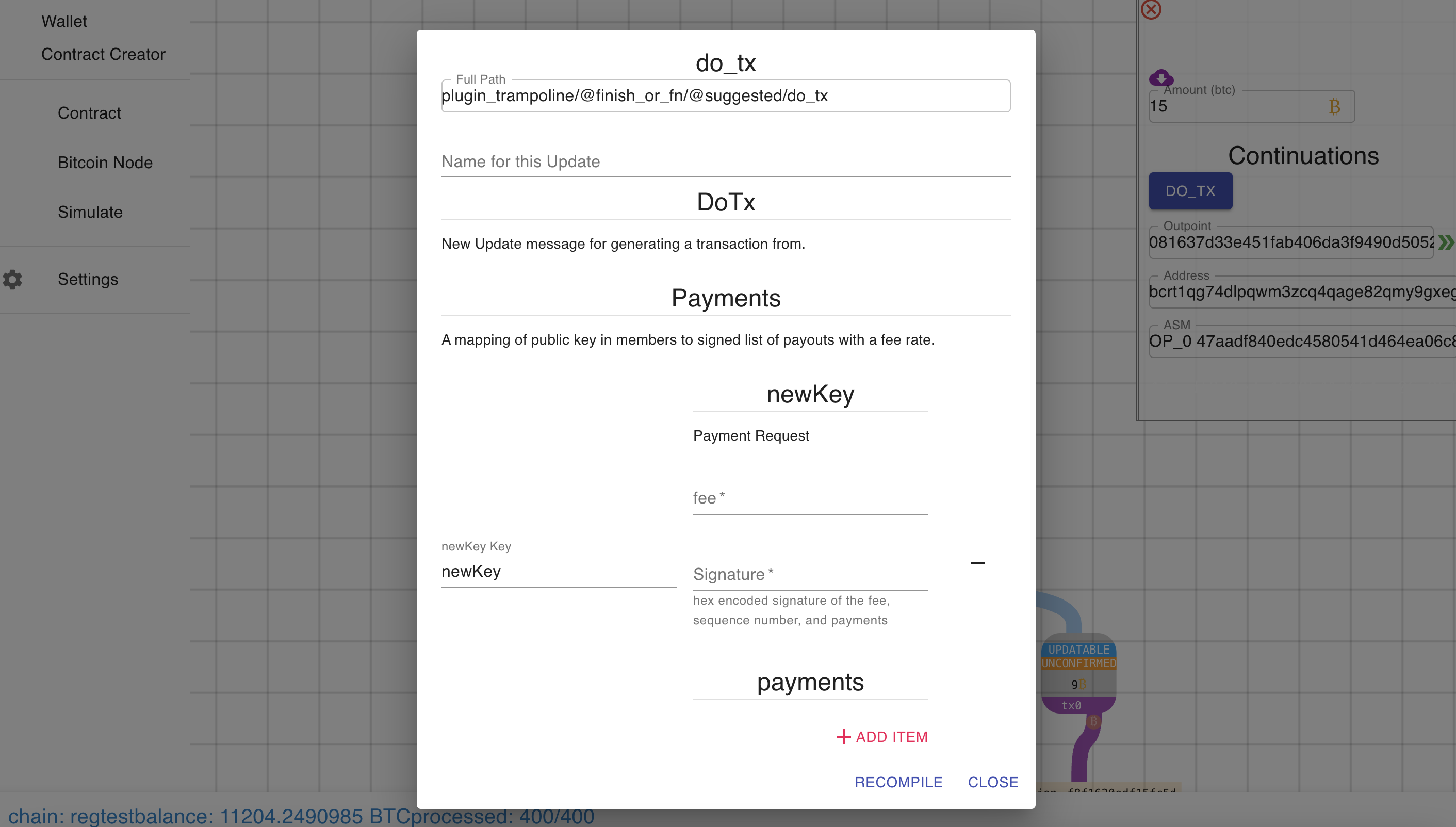

Next, we’ll define some data types for instructing the pool to update:

/// Payment Request

#[derive(Deserialize, JsonSchema)]

struct PaymentRequest {

/// # Signature

/// hex encoded signature of the fee, sequence number, and payments

hex_der_sig: String,

fee: AmountF64,

payments: BTreeMap<Address, AmountF64>,

}

/// New Update message for generating a transaction from.

#[derive(Deserialize, JsonSchema)]

struct DoTx {

/// # Payments

/// A mapping of public key in members to signed list of payouts with a fee rate.

payments: HashMap<PublicKey, PaymentRequest>,

}

/// required...

impl Default for DoTx {

fn default() -> Self {

DoTx {

payments: HashMap::new(),

}

}

}

impl StatefulArgumentsTrait for DoTx {}

/// helper for rust type system issue

fn default_coerce(

k: <NextTxPool as Contract>::StatefulArguments,

) -> Result<DoTx, CompilationError> {

Ok(k)

}

Lastly, we’ll define the logic for actually doing the update:

impl NextTxPool {

/// all signed the transaction!

#[guard]

fn all_signed(self, ctx: Context) {

Clause::Threshold(

self.members.len(),

self.members.keys().cloned().map(Clause::Key).collect(),

)

}

/// This Function will create a proposed transaction that is safe to sign

/// given a list of data from participants.

#[continuation(

guarded_by = "[Self::all_signed]",

coerce_args = "default_coerce",

web_api

)]

fn do_tx(self, ctx: Context, update: DoTx) {

// don't allow empty updates.

if update.payments.is_empty() {

return empty();

}

// collect members with updated balances here

let mut new_members = self.members.clone();

// verification context

let secp = Secp256k1::new();

// collect all the payments

let mut all_payments = vec![];

let mut spent = Amount::from_sat(0);

// for each payment...

for (

from,

PaymentRequest {

hex_der_sig,

fee,

payments,

},

) in update.payments.iter()

{

// every from must be in the members

let balance = self

.members

.get(from)

.ok_or(CompilationError::TerminateCompilation)?;

let new_balance = Amount::from(*balance)

- (payments

.values()

.cloned()

.map(Amount::from)

.fold(Amount::from_sat(0), |a, b| a + b)

+ Amount::from(*fee));

// check for no underflow

if new_balance.as_sat() < 0 {

return Err(CompilationError::TerminateCompilation);

}

// updates the balance or remove if empty

if new_balance.as_sat() > 0 {

new_members.insert(from.clone(), new_balance.into());

} else {

new_members.remove(from);

}

// collect all the payment

for (address, amt) in payments.iter() {

spent += Amount::from(*amt);

all_payments.push(Payment {

address: address.clone(),

amount: Amount::from(*amt).into(),

})

}

// Check the signature for this request

// came from this user

if self.sig_needed {

let mut hasher = sha256::Hash::engine();

hasher.write(&self.sequence.to_le_bytes());

hasher.write(&Amount::from(*fee).as_sat().to_le_bytes());

for (address, amt) in payments.iter() {

hasher.write(&Amount::from(*amt).as_sat().to_le_bytes());

hasher.write(address.script_pubkey().as_bytes());

}

let h = sha256::Hash::from_engine(hasher);

let m = Message::from_slice(&h.as_inner()[..]).expect("Correct Size");

let signed: Vec<u8> = FromHex::from_hex(&hex_der_sig)

.map_err(|_| CompilationError::TerminateCompilation)?;

let sig = Signature::from_der(&signed)

.map_err(|_| CompilationError::TerminateCompilation)?;

let _: () = secp

.verify(&m, &sig, &from.key)

.map_err(|_| CompilationError::TerminateCompilation)?;

}

}

// Send any leftover funds to a new pool

let change = NextTxPool {

members: new_members,

sequence: self.sequence + 1,

sig_needed: self.sig_needed,

};

// We'll use the contract from our last post to make the state

// transitions more efficient!

// Think about what else could be fun here though...

let out = TreePay {

participants: all_payments,

radix: 4,

};

ctx.template()

.add_output(change.total(), &change, None)?

.add_output(spent, &out, None)?

.into()

}

}

Now it’s pretty neat – rather than “exercise for the reader”, we can have Sapio

generate payment pool updates for us. And exiting from the pool is very

efficient and keeps most users online. But speaking of exercises for the reader,

try thinking through these extensions…

No Code: Payout to where?

Payouts in this version are defined as being to an address.

How creative can we get with that? What if the payment request is 1 BTC to

address X and we generated X as a 1 BTC expecting Vault in Sapio?

What else cool can we do?

Cut-through

We could make our DoTx differentiate between internal and external payouts. An

internal payout would allow for adding a new key OR for increasing the balance

of an existing key before other payments are processed. E.g., suppose we have

Alice with 1 BTC and Bob with 2, under the code above Alice sending 0.5 to Bob

and Bob sending 2.1 to Carol externally would fail and would remove funds from

the pool. If we want to keep funds in the pool, we can do that! And if we want

the balance from new internal transfers, could process before any deductions.

Internal tranfers to multiple addresses per user can also be used to improve

privacy!

It should also be possible to have external inputs add balance to the pool

during any state update.

Fees?

I basically glance over fees in this presentation… But there is more work to

be done to control and process fees fairly!

Cold-er Ejections

If you get kicked out of a pool because you went offline, might you be able to

specify – per user – some sort of vault program for the evicted coins to go into?

Howdy Partner

Who is next to whom is actually kinda relevant for a Pool with Efficient Ejections.

For example, if the pool splits because of an undersea cable breaking off France

and Britain, dividing users based on English or French would be much better than

random because after one transaction you could have all the English and French

users split and able to communicate again.

What different heuristics might you group people by? Reputation system? Amount

of funds at stake? Random? Sorted lexicographically?

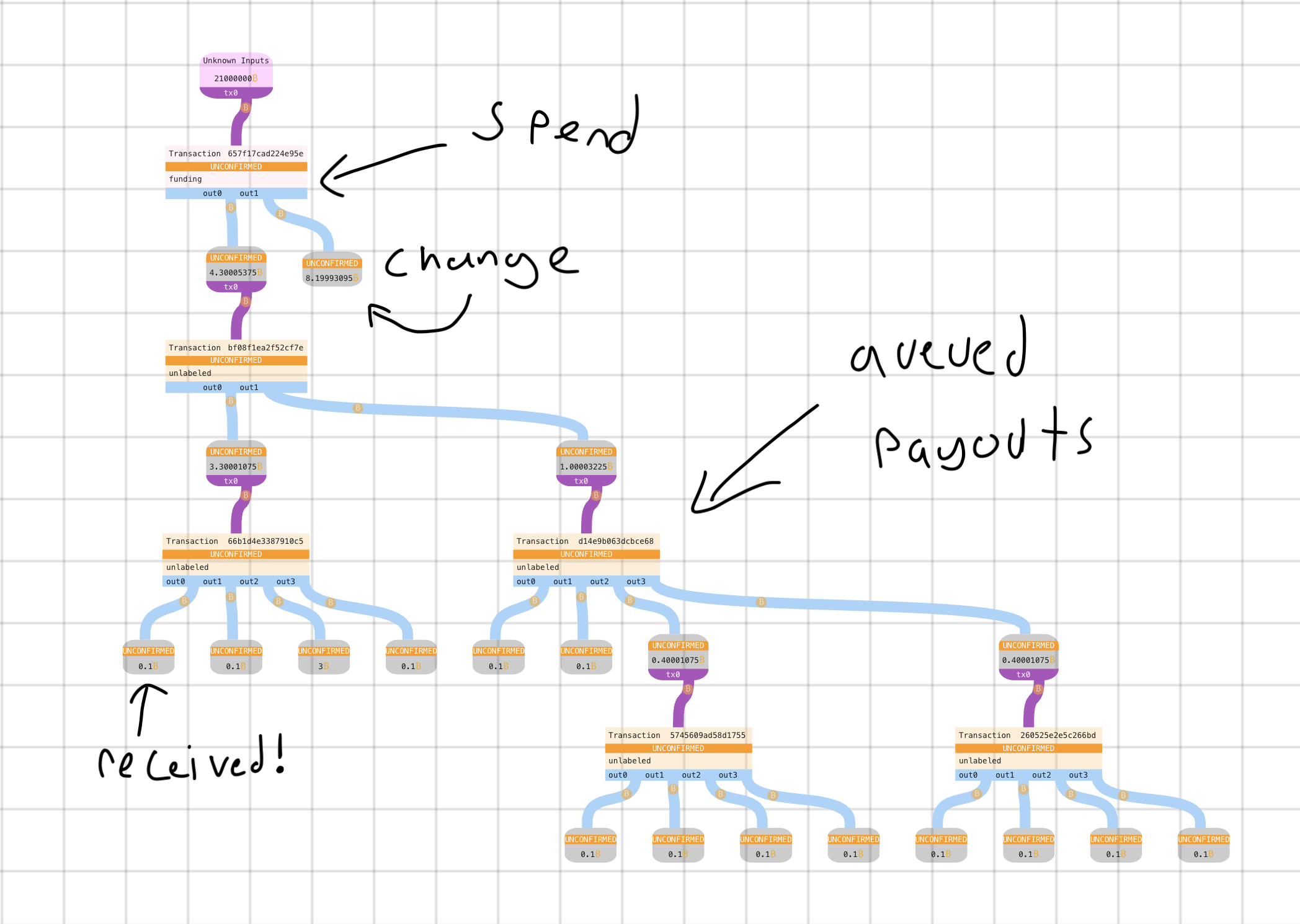

Let’s look at some pictures:

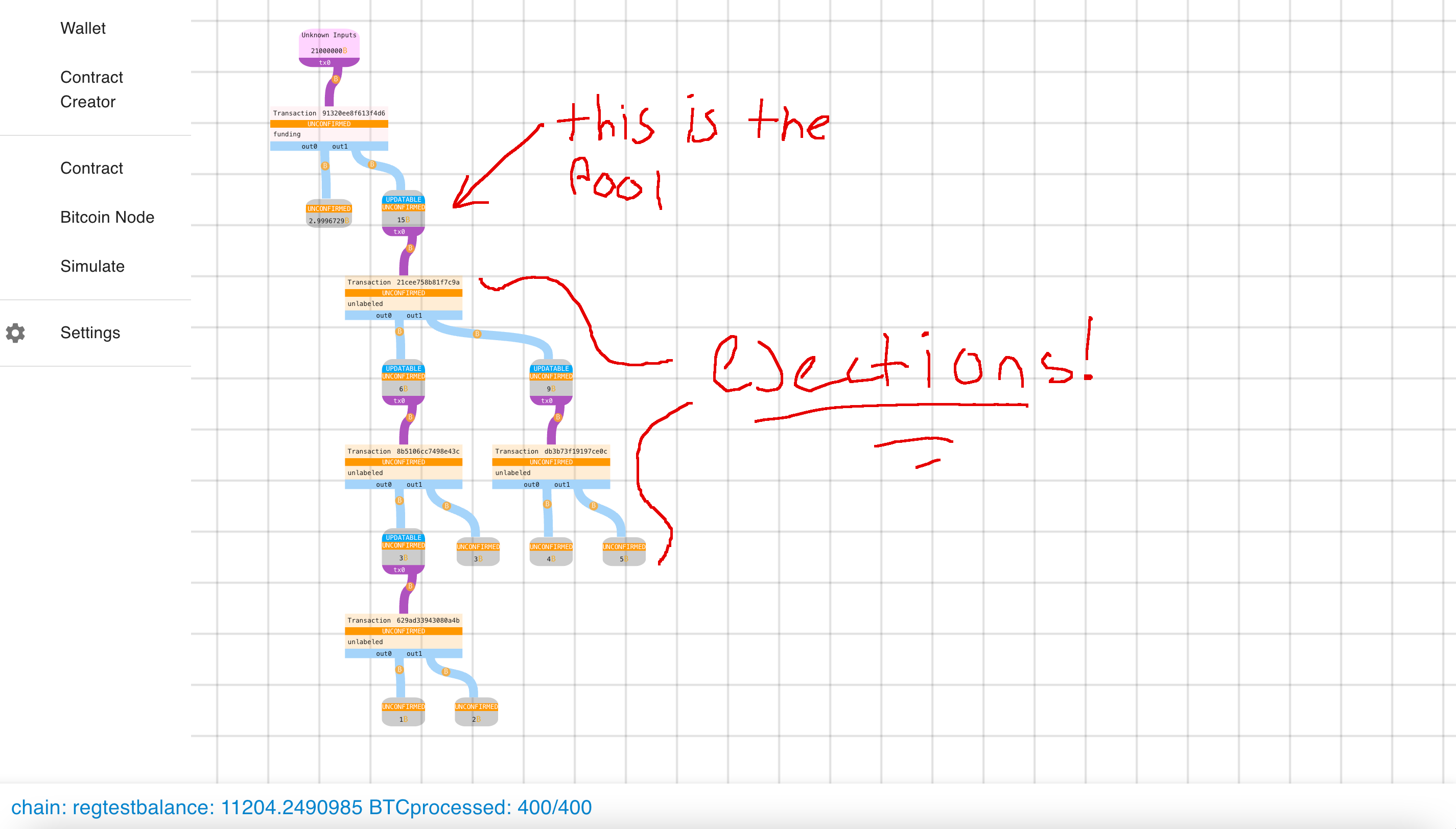

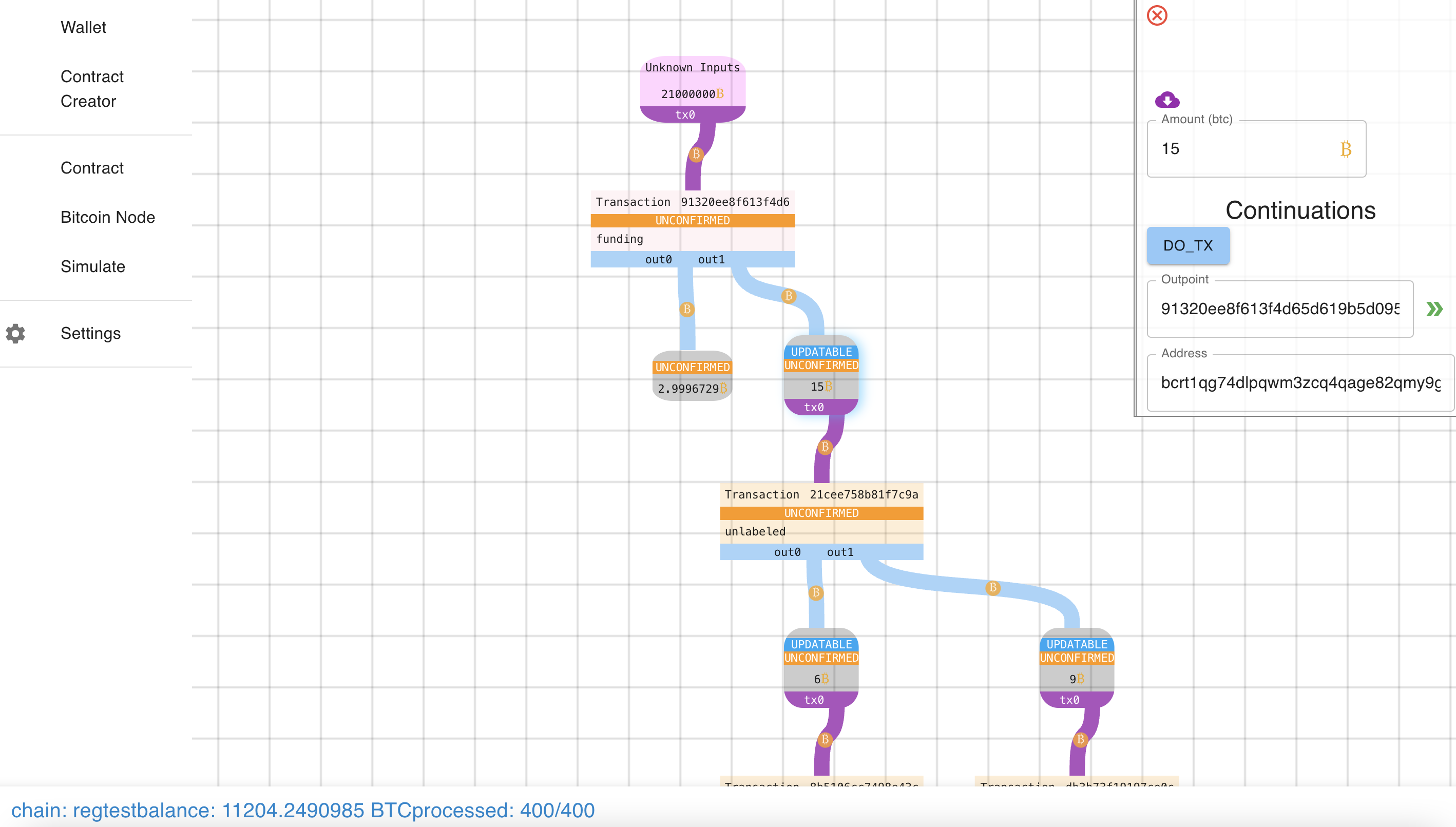

Creating a Pool

Pool Created!

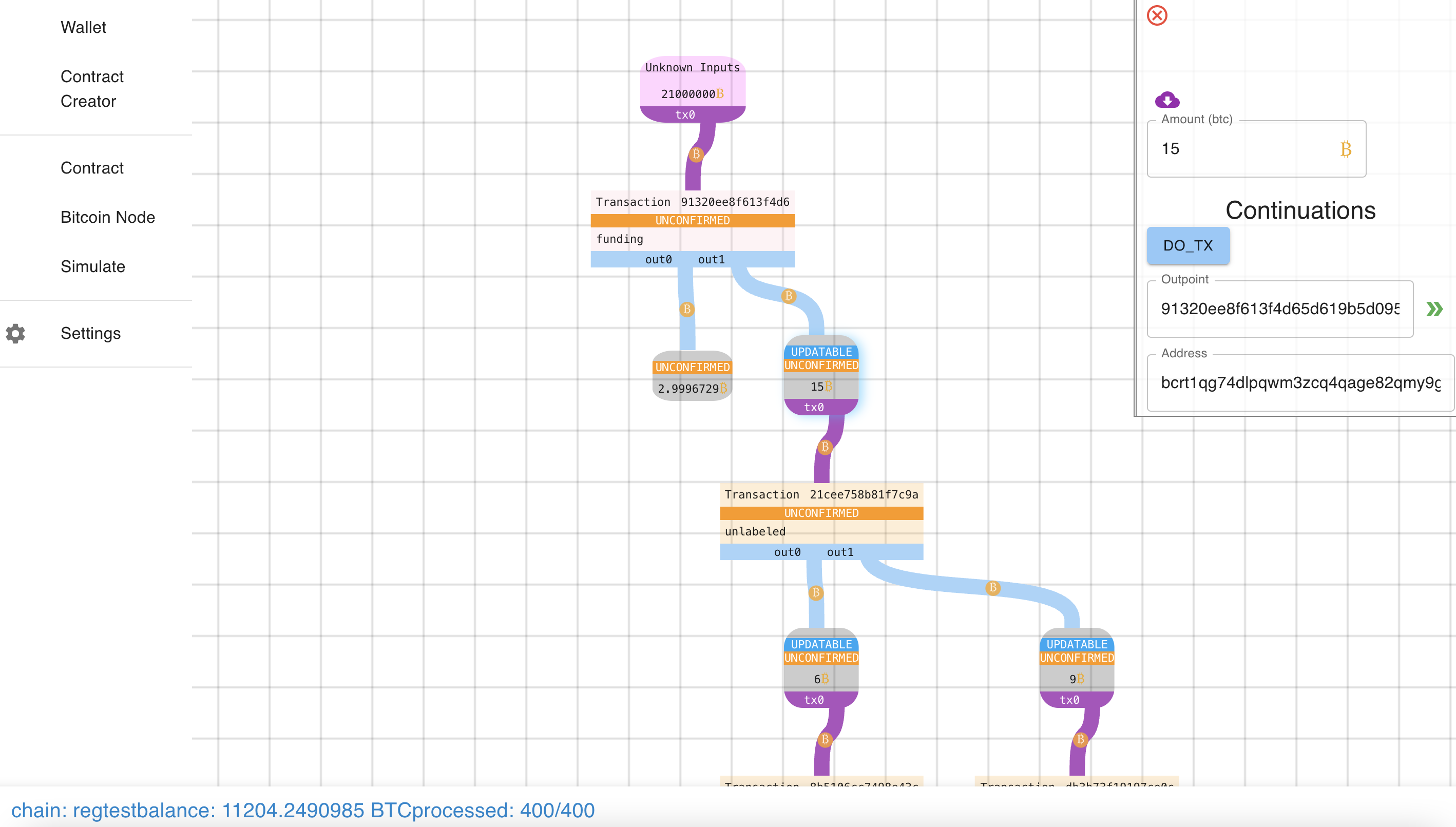

Inspecting the Root

Entering an update

Updated TX Graph

(had a ux bug, need to fix it before I add this :p)

Do Payment Pools Need CTV?

Not necessarily. Payment pools as shown can be done today, but they require

participants to use their own emulation / pre-signing servers before depositing

funds.

This might not seem bad; we already need everyone online for an update, right?

It’s truly not awful. However, many use cases of payment pool essentially

require being able to generate a payment pool without having all of the parties

online at the time of creation. E.g., imagine that your exchange matches you

with reputable payment pool counterparties when you withdraw (if you request

it). We’ll see the need concretely in a future post.

What about the Taproots

Unfortunately, rust-bitcoin/miniscript work on Taproot is still ongoing, so I

can’t show you how cool Taproot is for this. But essentially, our

Self::all_signed clauses become just a single key! And they can be

non-interactively generated at every level for the tree-ejection version. This is

great! It will work pretty much automatically without changing the user-code once

the compiler supports taproot. Huge boon for privacy and efficiency!

Contrast this V.S….

As noted, there are some other proposals out there.

It’s the author’s opinion that Sapio + CTV are the best form of payment pool

compared to alternatives for both scalability and privacy. To fully understand

why is a lot more technical than this already technical post (beleive it or not).

If you want to get into it,

you can see my accounting for costs on the mailing list:

It boils down to a few things:

- Cheaper

- Simpler

- More Composable

- Better Privacy

In posts coming soon we’ll get a heck’n lot more creative with what goes inside

a payment pool, including lightning, mining pools, and “daos”! But that’s all

for today.

Day 12: Rubin's Bitcoin Advent Calendar

09 Dec 2021

Welcome to day 12 of my Bitcoin Advent Calendar. You can see an index of all

the posts here or subscribe at

judica.org/join to get new posts in your inbox

Congestion is an ugly word, eh? When I hear it my fake synthesia triggers green

slime feeling, being stuck in traffic with broken AC, and ~the bread line~

waiting for your order at a crowded restaurant when you’re super starving. All

not good things.

So Congestion Control sounds pretty sweet right? We can’t do anything about the

demand itself, but maybe we can make the experience better. We can take a mucinex,

drive in the HOV lane, and eat the emergency bar you keep in your bag.

How might this be used in Bitcoin?

- Exchange collects N addresses they need to pay some bitcoin

- Exchange inputs into this contract

- Exchanges gets a single-output transaction, which they broadcast with high fee to get quick confirmation.

- Exchange distributes the redemption paths to all recipients (e.g. via mempool, email, etc).

- Users verify that the funds are “locked in” with this contract.

- Party

- Over time, when users are willing to pay fees, they CPFP pay for their redemptions (worst case cost \(O(\log N)\))

Throughout this post, we’ll show how to build the above logic in Sapio!

Before we get into that…

Talk Nerdy To Me

Let’s define some core concepts… Don’t worry too much if these are a bit hard

to get, it’s just useful context to have or think about.

Latency

Latency is the time from some notion of “started” to “stopped”. In Bitcoin you could think of the latency from 0 confirmations on a transaction (in mempool) to 1 confirmation (in a block), which is minimally expected to be 10

minutes for high fee transactions, but could be longer depending on the other transactions.

Fairness

Fairness is a measure of how “equitable” a distribution of goods or services is.

For example, suppose I want to divide 10 cookies among 10 children.

What if 1 child gets two cookies and the other 9 get 8/9ths of a cookie each? Or

what if 1 child gets no cookie and the other 9 get 10/9ths of a cookie each? How

fair is that?

Mathematicians and computer scientists love to come up with different measures

of fairness to be able to quantatatively compare these scenarios and their

relative fairness.

In Bitcoin we might think of different types of fairness: how long does your

transaction spend in the mempool? How much fee did you pay?

Throughput & Capacity

Let’s spend another moment on fairness. Perfectly fair would be:

- All children get 1 cookie

- All children get 1/10th of 1 cookie.

- All children get 0 cookies.

Clearly only one of these is particularly efficient.

Thus, we don’t just want to measure fairness, we also want to measure the

throughput against the capacity. The capacity is the maximum throughput, and the

the throughput is essentially how many of those cookies get eaten (usually, over

time). Now let’s look at our prior scenarios:

- All children get 1 cookie: Perfect Throughput.

- All children get 1/10th of 1 cookie: 1/10th Throughtput/Capacity.

- All children get 0 cookies: 0 Throughput :(

In this case it seems simple: why not just divide the cookies you big butt!

Well sometimes it’s hard to coordinate the sharing of these resources. For

example, think about if the cookies had to be given out in a buffet. The first

person might just take two cookies, not aware there were other kids who wouldn’t

get one!

This maps well onto the Bitcoin network. A really rich group of people might do

a bunch of relatively high fee transactions that are low importance to them and

inadvertently price out lower fee transactions that are more important to the

sender. It’s not malicious, just a consequence of having more money. So even

though Bitcoin can achieve 1MB of base transaction data every 10 minutes, that

capacity might get filled with a couple big consolidation transactions instead

of many transfers.

Burst & Over Provisioning

One issue that comes up in systems is that users show up randomly. How often

have you been at a restaurant with no line, you order your food, and then as

soon as you sit down the line has ten people in it? Lucky me, you think. I

showed up at the right time!. But then ten minutes later the line is clear.

Customers show up kind of randomly. And thus we see big bursts of activity.

Typically, in order to accomodate the bursts a restaurant must over-provision

it’s staff. They only make money when customers are there, and they need to

serve them quickly. But in between bursts, staff might just be watching grass

grow.

The same is true for Bitcoin. Transactions show up somewhat unpredictably, so

ideally Bitcoin would have ample space to accomodate any burst (this isn’t

true).

Little’s Law

Little’s law is a deceptively simple concept:

\[L = \lambda \times W\]

where \(L = \) length of the queue, \(\lambda = \) the arrival rate and

\(W=\) the average time a customer spends in the system.

What’s remarkable about it is that it makes almost no assumptions about the underlying process.

This can be used to think about, e.g., a mempool.

Suppose there are 10,000 transactions in the mempool, and based on historical

data we see 57 txns a minute.

\[\frac{10,000 \texttt{ minutes}}{57 \texttt{ transactions per minute}} = 175 \texttt{ minutes}\]

Thus we can infer how long transactions will on average spend waiting in the

mempool, without knowing what the bursts look like! Very cool.

I’m just showing off

I didn’t really need to make you read that gobbledygook, but I think they are

really useful concepts that anyone who wants to think about the impacts of

congestion & control techniques should keep in mind… Hopefully you learned

something!

It’s Bitcoin Time

Well, what’s going on in Bitcoin land? When we make a transaction there are

multiple different things going on.

- We are spending coins

- We are creating new coins

Currently, those two steps occur simultaneously. Think of our cookies. Imagine

if we let one kid get cookies at a time, and they also have to get their milk at

the same time. Then we let the next kid go. It’s going to take

\[T_{milk} + T_{cookies}\]

To get everyone served. What if instead we said kids could get one and then the

other, in separate lines.

Now it will take something closer to \(\max(T_{milk}, T_{cookies})\).

Whichever process is longer will dominate the time. (Probably milk).

Now imagine that getting a cookie takes 1 second per child, and getting a milk

takes 30 seconds. Everyone knows that you can have a cookie and have milk after.

If children take a random amount of time – let’s say on average 3 minutes,

sometimes more, sometimes less – to eat their cookies, then we can serve 10

kids cookies in 10 seconds, making everyone happy, and then fill up the milks

while everyone is enjoying a cookie. However, if we did the opposite – got

milks and then got cookies, it would take much longer for all of the kids to

get something and you’d see chaos.

Back to Bitcoin. Spending coins and creating new coins is a bit like milk and

cookies. We can make the spend correspond to distributing the cookies and

setting up the milk line. And the creating of the new coin can be more akin to

filling up milks whenever a kid wants it.

What this means practically is that by unbundling spending from redeeming we can

serve a much greater number of users that if they were one aggregate product

because we are taking the “expensive part” and letting it happen later than the

“cheap part”. And if we do this cleverly, the “setting up the milk line” in the

splitting of the spend allows all receivers to know they will get their fair share later.

This makes the system much higher throughput (unlimited confirmations of

transfer), lower latency to confirmation (you an see when a spend will

eventually pay you), but higher latency to coin creation in the best case,

although potentially no different than the average case, and (potentially) worse

overall throughput since we have some waste from coordinating the splitting.

It also improves costs because we may be willing to pay a higher price for part

one (since it generates the confirmation) than part two.

Can we build it?

Let’s start with a basic example of congestion control in Sapio.

First we define a payment as just being an Amount and an Address.

/// A payment to a specific address

pub struct Payment {

/// # Amount

/// The amount to send in btc

pub amount: AmountF64,

/// # Address

/// The Address to send to

pub address: Address,

}

Next, we’ll define a helper called PayThese, which takes a list of contracts

of some kind and pays them after an optional delay in a single transaction.

You can think of this (back to our kids) as calling a group of kids at a time

(e.g., table 1, then table 2) to get their cookies.

struct PayThese {

contracts: Vec<(Amount, Box<dyn Compilable>)>,

fees: Amount,

delay: Option<AnyRelTimeLock>,

}

impl PayThese {

#[then]

fn expand(self, ctx: Context) {

let mut bld = ctx.template();

// Add an output for each contract

for (amt, ct) in self.contracts.iter() {

bld = bld.add_output(*amt, ct.as_ref(), None)?;

}

// if there is a delay, add it

if let Some(delay) = self.delay {

bld = bld.set_sequence(0, delay)?;

}

// pay some fees

bld.add_fees(self.fees)?.into()

}

fn total_to_pay(&self) -> Amount {

let mut amt = self.fees;

for (x, _) in self.contracts.iter() {

amt += *x;

}

amt

}

}

impl Contract for PayThese {

declare! {then, Self::expand}

declare! {non updatable}

}

Lastly, we’ll define the logic for congestion control. The basics of what is

happening is we are going to define two transactions: One which pays from A ->

B, and then one which is guaranteed in B’s script to pay from B -> {1…n}. This

splits the confirmation txn from the larger payout txn.

However, we’re going to be a little more clever than that. We’ll apply this principle

recursively to create a tree.

Essentially what we are going to do is to take our 10 kids and then divide them

into groups of 2 (or whatever radix). E.g.: {1,2,3,4,5,6,7,8,9,10} would become

{ {1,2}, {3,4}, {5,6}, {7,8}, {9,10} }. The magic happens when we recursively

apply this idea, like below:

{1,2,3,4,5,6,7,8,9,10}

{ {1,2}, {3,4}, {5,6}, {7,8}, {9,10} }

{ { {1,2}, {3,4} }, { {5,6}, {7,8} }, {9,10} }

{ { {1,2}, {3,4} }, { { { 5,6}, {7,8} }, {9,10} } }

{ { { {1,2}, {3,4}}, { { {5,6}, {7,8} }, {9,10} } } }

The end result of this grouping is a single group! So now we could do a

transaction to pay/give cookies to that one group, and then if we wanted 9 to

get their cookie/sats We’d only have to publish:

level 0 to: Address({ { { {1,2}, {3,4} }, { { {5,6}, {7,8} }, {9,10} } } })

level 1 to: Address({ { {5,6}, {7,8} }, {9,10} } })

level 2 to: Address({9,10})

Now let’s show that in code:

/// # Tree Payment Contract

/// This contract is used to help decongest bitcoin

//// while giving users full confirmation of transfer.

#[derive(JsonSchema, Serialize, Deserialize)]

pub struct TreePay {

/// # Payments

/// all of the payments needing to be sent

pub participants: Vec<Payment>,

/// # Tree Branching Factor

/// the radix of the tree to build.

/// Optimal for users should be around 4 or

/// 5 (with CTV, not emulators).

pub radix: usize,

#[serde(with = "bitcoin::util::amount::serde::as_sat")]

#[schemars(with = "u64")]

/// # Fee Sats (per tx)

/// The amount of fees per transaction to allocate.

pub fee_sats_per_tx: bitcoin::util::amount::Amount,

/// # Relative Timelock Backpressure

/// When enabled, exert backpressure by slowing down

/// tree expansion node by node either by time or blocks

pub timelock_backpressure: Option<AnyRelTimeLock>,

}

impl TreePay {

#[then]

fn expand(self, ctx: Context) {

// A queue of all the payments to be made initialized with

// all the input payments

let mut queue = self

.participants

.iter()

.map(|payment| {

// Convert the payments to an internal representation

let mut amt = AmountRange::new();

amt.update_range(payment.amount);

let b: Box<dyn Compilable> =

Box::new(Compiled::from_address(payment.address.clone(),

Some(amt)));

(payment.amount, b)

})

.collect::<VecDeque<(Amount, Box<dyn Compilable>)>>();

loop {

// take out a group of size `radix` payments

let v: Vec<_> = queue

.drain(0..std::cmp::min(self.radix, queue.len()))

.collect();

if queue.len() == 0 {

// in this case, there's no more payments to make so bundle

// them up into a final transaction

let mut builder = ctx.template();

for pay in v.iter() {

builder = builder.add_output(pay.0, pay.1.as_ref(), None)?;

}

if let Some(timelock) = self.timelock_backpressure {

builder = builder.set_sequence(0, timelock)?;

}

builder = builder.add_fees(self.fee_sats_per_tx)?;

return builder.into();

} else {

// There are still more, so make this group and add it to

// the back of the queue

let pay = Box::new(PayThese {

contracts: v,

fees: self.fee_sats_per_tx,

delay: self.timelock_backpressure,

});

queue.push_back((pay.total_to_pay(), pay))

}

}

}

}

impl Contract for TreePay {

declare! {then, Self::expand}

declare! {non updatable}

}

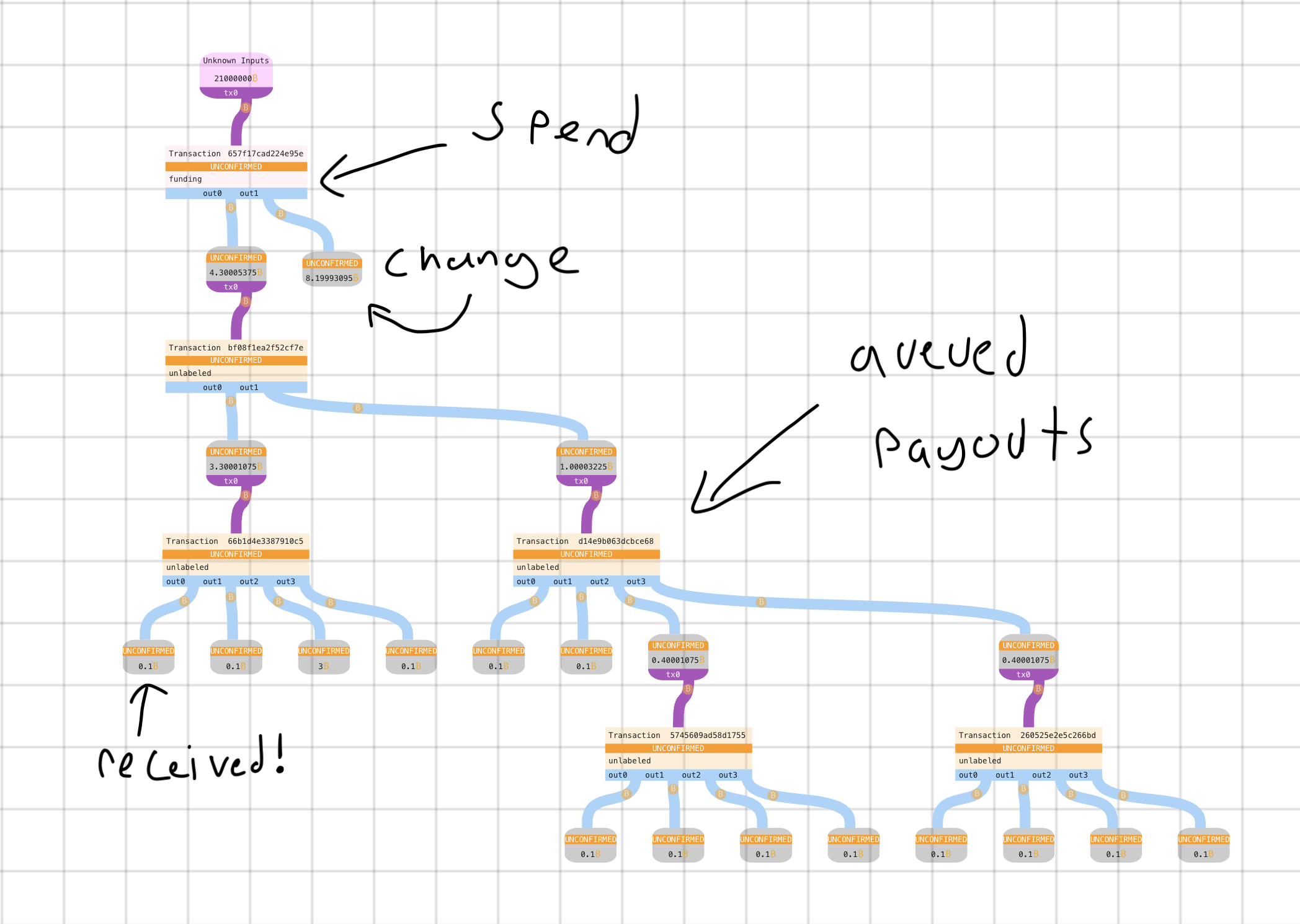

So now what does that look like when we send to it? Let’s do a TreePay with 14 recipients and radix 4:

As you can see, the queuing puts some structure into a batched payment! This is

(roughly) the exact same code as above generating these transactions. What this

also means is given an output and a description of the arguments passed to the

contract, anyone can re-generate the expansion transactions and verify that they

can eventually receive their money! These payout proofs can also be delivered in

a pruned form, but that’s just a bonus.

Everyone gets their cookie (confirmation of transfer) immediately, and knows

they can get their milk (spendability) later. A smart wallet could manage your

liquidity over pedning redemptions, so you could passively expand outputs

whenever fees are cheap.

There are a lot of extensions to this basic design, and we’ll see two really

exciting ones tomorrow and the next day!

If you want to read more about the impact of congestion control on the network,

I previously wrote two articles simulating the impact of congestion control on

the network which you can read here:

What’s great about this is that not only do we make a big benefit for anyone who

wants to use it, we show in the Batching Simulation that even with the overheads

of a TreePay, the incentive compatible behavior around exchange batching can

actually help us use less block space overall.

How channel balancing might look.

How channel balancing might look. chart showing that the rewards are smoother over time

chart showing that the rewards are smoother over time