Here you'll find an assorted mix of content from yours truly. I post about a lot

of things, but primarily

Day 19: Rubin's Bitcoin Advent Calendar

16 Dec 2021

Welcome to day 19 of my Bitcoin Advent Calendar. You can see an index of all

the posts here or subscribe at

judica.org/join to get new posts in your inbox

For today’s post we’re going to build out some Sapio NFT protocols that are

client-side verifiable. Today we’ll focus on code, tomorrow we’ll do more

discussion and showing how they work. I was sick last night (minor burrito

oriented food poisoning suspected) and so I got behind, hence this post being up

late.

As usual, the disclaimer that as I’ve been behind… so we’re less focused today

on correctness and more focused on giving you the shape of the idea. In other

words, I’m almost positive it won’t work properly, but it can compile! And the

general flow looks correct.

There’s also a couple new concepts I want to adopt as I’ve been working on this,

so those are things that will have to happen as I refine this idea to be

production grade.

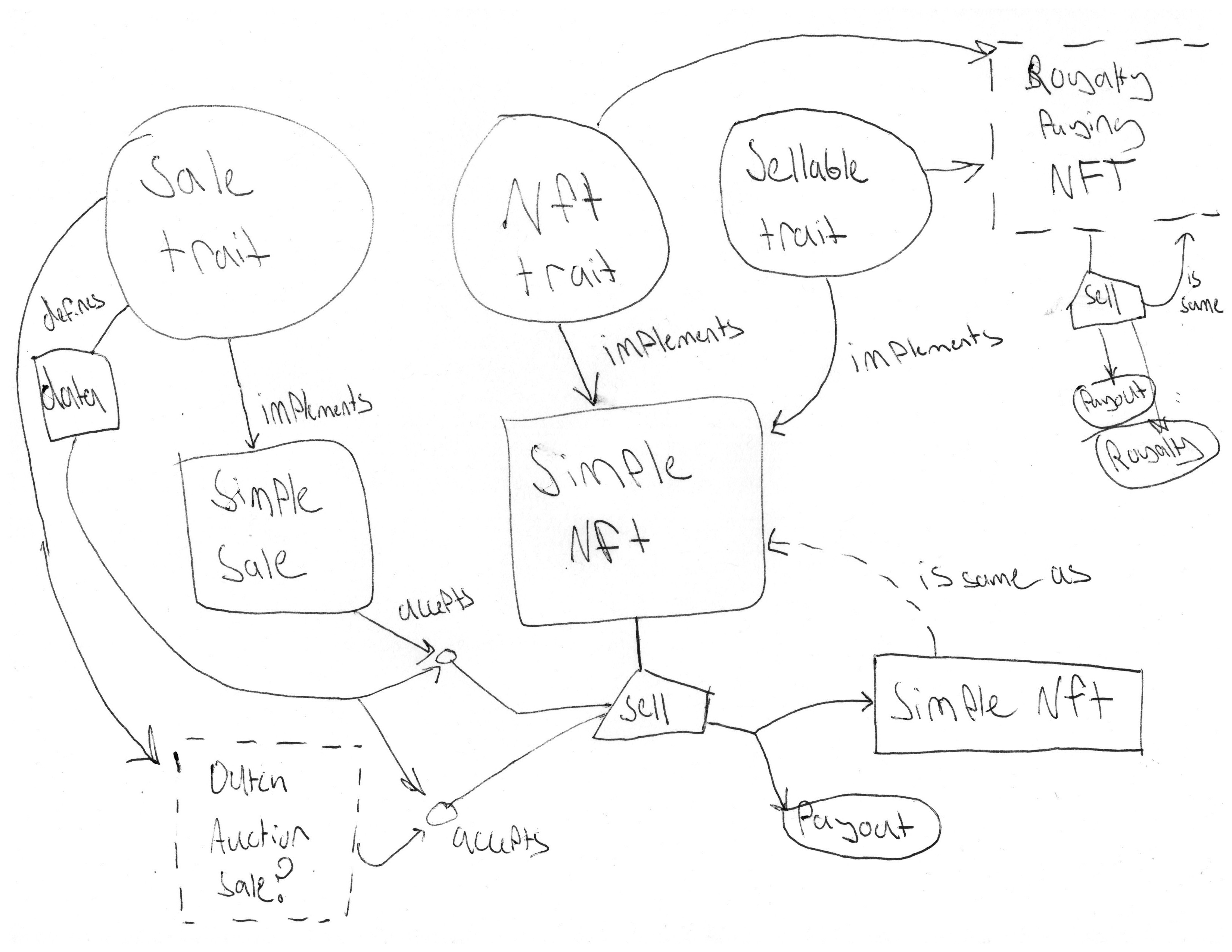

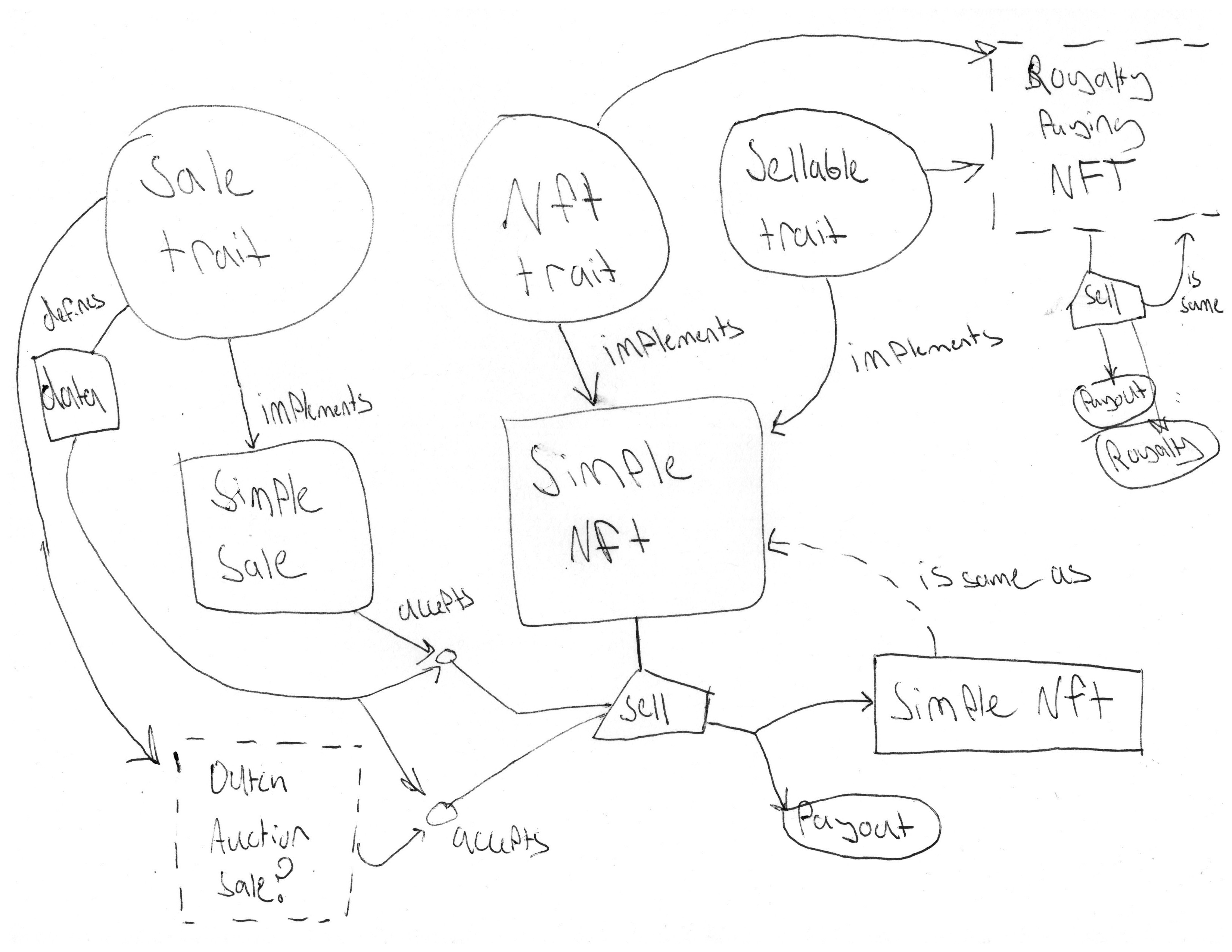

Before we start, let’s get an eagle-eye view of the ‘system’ we’re going to be

building, because it represents multiple modules and logical components.

By the end, we’ll have 5 separate things:

- An Abstract NFT Interface

- An Abstract Sellable Interface

- An Abstract Sale Interface

- A Concrete Sellable NFT (Simple NFT)

- A Concrete Sale Interface (Simple NFT Sale)

In words:

Simple NFT implements both NFT and Sellable, and has a sell function that

can be called with any Sale module.

Simple NFT Sale implements Sale, and can be used with the sell of anything

that implements Sellable and NFT.

We can make other implementations of Sale and NFT and they should be

compatible.

How’s it going to ‘work’?

Essentially how this is going to work do is

- An artist mint an NFT.

- The artist can sell it to anyone whose bids the artist accepts

Normally, in Ethereum NFTs, you could do something for step 2:

- The artist signs “anyone can buy at this price”

with Bitcoin NFTs, it’s a little different. The artist has to run a server that

accepts bids above the owner’s current price threshold and returns signed

under-funded transaction that would pay the owner the asking price.

Alternatively, the bidder can send an open-bid that the owner can fill

immediately.

Because Sapio is super-duper cool, we can make abstract interfaces for this

stuff so that NFTs can have lots of neat features like enforcing royalties,

dutch auction prices, batch minting, generative art minting, and more. We’ll see

a bit more tomorrow.

Client validation is central to this story. A lot of the rules are not

enforced by the Bitcoin blockchain. They are, however, enforced by requiring

that the ‘auditor’ be able to re-reach the same identical contract state by

re-compiling the entire contract from the start. I.e., as long as you generate

all your state transitions through Sapio, you can verify that an NFT is

‘authentic’. Of course, anyone can ‘burn’ an NFT if they want by sending e.g.

to an unknown key. Client side validation just posits that sending to an

unknown key is ‘on the same level’ of error as corrupting an NFT by doing state

transitions without having the corresponding ‘witness’ of sapio effects to

generate the transfer.

Please re-read this section after you get throught the code (I’ll remind you).

Declaring an NFT Minting Interface

First we are going to declare the basic information for a NFT.

Every NFT should have a owner (PublicKey) and a locator (some url, IPFS hash,

etc).

NFTs also should track which Sapio module was used to mint them, to ensure

compatibility going forward. If it’s not known, modules can try to fill it in

and guess (e.g., a good gues is “this module”).

Let’s put that to code:

/// # Trait for a Mintable NFT

#[derive(Serialize, JsonSchema, Deserialize, Clone)]

pub struct Mint_NFT_Trait_Version_0_1_0 {

/// # Initial Owner

/// The key that will own this NFT

pub owner: bitcoin::PublicKey,

/// # Locator

/// A piece of information that will instruct us where the NFT can be

/// downloaded -- e.g. an IPFs Hash

pub locator: String,

/// # Minting Module

/// If a specific sub-module is to be used / known -- when in doubt, should

/// be None.

pub minting_module: Option<SapioHostAPI<Mint_NFT_Trait_Version_0_1_0>>,

}

/// Boilerplate for the Mint trait

pub mod mint_impl {

use super::*;

#[derive(Serialize, Deserialize, JsonSchema)]

pub enum Versions {

Mint_NFT_Trait_Version_0_1_0(Mint_NFT_Trait_Version_0_1_0),

}

/// we must provide an example!

impl SapioJSONTrait for Mint_NFT_Trait_Version_0_1_0 {

fn get_example_for_api_checking() -> Value {

let key = "02996fe4ed5943b281ca8cac92b2d0761f36cc735820579da355b737fb94b828fa";

let ipfs_hash = "bafkreig7r2tdlwqxzlwnd7aqhkkvzjqv53oyrkfnhksijkvmc6k57uqk6a";

serde_json::to_value(mint_impl::Versions::Mint_NFT_Trait_Version_0_1_0(

Mint_NFT_Trait_Version_0_1_0 {

owner: bitcoin::PublicKey::from_str(key).unwrap(),

locator: ipfs_hash.into(),

minting_module: None,

},

))

.unwrap()

}

}

}

Shaweeeeet! We have an NFT Minting Interface!

But you can’t actually use it to Mint yet, since we lack an Implementation.

Before we implement it…

What are NFTs Good For? Selling! (Sales Interface)

If you have an NFT, you probably will want to sell it in the future. Let’s

declare a sales interface.

To sell an NFT we need to know:

- Who currently owns it

- Who is buying it

- What they are paying for it

- Maybe some extra stuff

/// # NFT Sale Trait

/// A trait for coordinating a sale of an NFT

#[derive(Serialize, JsonSchema, Deserialize, Clone)]

pub struct NFT_Sale_Trait_Version_0_1_0 {

/// # Owner

/// The key that will own this NFT

pub sell_to: bitcoin::PublicKey,

/// # Price

/// The price in Sats

pub price: AmountU64,

/// # NFT

/// The NFT's Current Info

pub data: Mint_NFT_Trait_Version_0_1_0,

/// # Extra Information

/// Extra information required by this contract, if any.

/// Must be Optional for consumer or typechecking will fail.

/// Usually None unless you know better!

pub extra: Option<Value>,

}

/// Boilerplate for the Sale trait

pub mod sale_impl {

use super::*;

#[derive(Serialize, Deserialize, JsonSchema)]

pub enum Versions {

/// # Batching Trait API

NFT_Sale_Trait_Version_0_1_0(NFT_Sale_Trait_Version_0_1_0),

}

impl SapioJSONTrait for NFT_Sale_Trait_Version_0_1_0 {

fn get_example_for_api_checking() -> Value {

let key = "02996fe4ed5943b281ca8cac92b2d0761f36cc735820579da355b737fb94b828fa";

let ipfs_hash = "bafkreig7r2tdlwqxzlwnd7aqhkkvzjqv53oyrkfnhksijkvmc6k57uqk6a";

serde_json::to_value(sale_impl::Versions::NFT_Sale_Trait_Version_0_1_0(

NFT_Sale_Trait_Version_0_1_0 {

sell_to: bitcoin::PublicKey::from_str(key).unwrap(),

price: Amount::from_sat(0).into(),

data: Mint_NFT_Trait_Version_0_1_0 {

owner: bitcoin::PublicKey::from_str(key).unwrap(),

locator: ipfs_hash.into(),

minting_module: None,

},

extra: None,

},

))

.unwrap()

}

}

}

That’s the interface for the contract that sells the NFTs. We also need an

interface for NFTs that want to initiate a sale.

To do that, we need to know:

- What kind of sale we are doing

- The data for that sale

This is really just expressing that we need to bind a NFT Sale Implementation to

our contract. We can express the sale interface as follows.

/// # Sellable NFT Function

/// If a NFT should be sellable, it should have this trait implemented.

pub trait SellableNFT: Contract {

decl_continuation! {<web={}> sell<Sell>}

}

/// # Sell Instructions

#[derive(Serialize, Deserialize, JsonSchema)]

pub enum Sell {

/// # Hold

/// Don't transfer this NFT

Hold,

/// # MakeSale

/// Transfer this NFT

MakeSale {

/// # Which Sale Contract to use?

/// Specify a hash/name for a contract to generate the sale with.

which_sale: SapioHostAPI<NFT_Sale_Trait_Version_0_1_0>,

/// # The information needed to create the sale

sale_info: NFT_Sale_Trait_Version_0_1_0,

},

}

impl Default for Sell {

fn default() -> Sell {

Sell::Hold

}

}

impl StatefulArgumentsTrait for Sell {}

Getting Concrete: Making an NFT

Let’s create a really simple NFT now that implements these interfaces.

There’s a bit of boilerplate, so we’ll go section-by-section.

First, let’s declare the SimpleNFT

/// # SimpleNFT

/// A really simple NFT... not much too it!

#[derive(JsonSchema, Serialize, Deserialize)]

pub struct SimpleNFT {

/// The minting data, and nothing else.

data: Mint_NFT_Trait_Version_0_1_0,

}

/// # The SimpleNFT Contract

impl Contract for SimpleNFT {

// NFTs... only good for selling?

declare! {updatable<Sell>, Self::sell}

// embeds metadata

declare! {then, Self::metadata_txns}

}

First, let’s implement the logic for selling the NFT… You remember our old

friend the Sales interface?

impl SimpleNFT {

/// # signed

/// Get the current owners signature.

#[guard]

fn signed(self, ctx: Context) {

Clause::Key(self.data.owner.clone())

}

}

fn default_coerce(k: <SimpleNFT as Contract>::StatefulArguments) -> Result<Sell, CompilationError> {

Ok(k)

}

impl SellableNFT for SimpleNFT {

#[continuation(guarded_by = "[Self::signed]", web_api, coerce_args = "default_coerce")]

fn sell(self, ctx: Context, sale: Sell) {

if let Sell::MakeSale {

sale_info,

which_sale,

} = sale

{

// if we're selling...

if sale_info.data.owner != self.data.owner {

// Hmmm... metadata mismatch! the current owner does not

// matched the sale's claimed owner.

return Err(CompilationError::TerminateCompilation);

}

// create a contract from the sale API passed in

let compiled = Ok(CreateArgs {

context: ContextualArguments {

amount: ctx.funds(),

network: ctx.network,

effects: unsafe { ctx.get_effects_internal() }.as_ref().clone(),

},

arguments: sale_impl::Versions::NFT_Sale_Trait_Version_0_1_0(sale_info.clone()),

})

.map(serde_json::to_value)

// use the sale API we passed in

.map(|args| create_contract_by_key(&which_sale.key, args, Amount::from_sat(0)))

// handle errors...

.map_err(|_| CompilationError::TerminateCompilation)?

.ok_or(CompilationError::TerminateCompilation)?;

// send to this sale!

let mut builder = ctx.template();

// todo: we need to cut-through the compiled contract address, but this

// upgrade to Sapio semantics will come Soon™.

builder = builder.add_output(compiled.amount_range.max(), &compiled, None)?;

builder.into()

} else {

/// Don't do anything if we're holding!

empty()

}

}

}

Next, let’s implement the metadata logic. There are a million ways to do metadata,

so feel free to ‘skip’ this section and just let your mind wander on interesting

things you could do here…

impl SimpleNFT {

/// # unspendable

/// what? This is just a sneaky way of making a provably unspendable branch

/// (since the preimage of [0u8; 32] hash can never be found). We use that to

/// help us embed metadata inside of our contract...

#[guard]

fn unspendable(self, ctx: Context) {

Clause::Sha256(sha256::Hash::from_inner([0u8; 32]))

}

/// # Metadata TXNs

/// This metadata TXN is provably unspendable because it is guarded

/// by `Self::unspendable`. Neat!

/// Here, we simple embed a OP_RETURN.

/// But you could imagine tracking (& client side validating)

/// an entire tree of transactions based on state transitions with these

/// transactions... in a future post, we'll see more!

#[then(guarded_by = "[Self::unspendable]")]

fn metadata_txns(self, ctx: Context) {

ctx.template()

.add_output(

Amount::ZERO,

&Compiled::from_op_return(

&sha256::Hash::hash(&self.data.locator.as_bytes()).as_inner()[..],

)?,

None,

)?

// note: what if we also comitted to the hash of the wasm module

// compiling this contract?

.into()

}

}

Lastly, some icky boilerplate stuff:

#[derive(Serialize, Deserialize, JsonSchema)]

enum Versions {

Mint_NFT_Trait_Version_0_1_0(Mint_NFT_Trait_Version_0_1_0),

}

impl TryFrom<Versions> for SimpleNFT {

type Error = CompilationError;

fn try_from(v: Versions) -> Result<Self, Self::Error> {

let Versions::Mint_NFT_Trait_Version_0_1_0(mut data) = v;

let this = LookupFrom::This

.try_into()

.map_err(|_| CompilationError::TerminateCompilation)?;

match data.minting_module {

// if no module is provided, it must be this module!

None => {

data.minting_module = Some(this);

Ok(SimpleNFT { data })

}

// if a module is provided, we have no idea what to do...

// unless the module is this module itself!

Some(ref module) if module.key == this.key => Ok(SimpleNFT { data }),

_ => Err(CompilationError::TerminateCompilation),

}

}

}

REGISTER![[SimpleNFT, Versions], "logo.png"];

Right on! Now we have made a NFT Implementation. We can Mint one, but wait.

How do we sell it?

We need a NFT Sale Implementation

So let’s do it. In today’s post, we’ll implement the most boring lame ass Sale…

Tomorrow we’ll do more fun stuff, I swear.

First, let’s get our boring declarations out of the way:

/// # Simple NFT Sale

/// A Sale which simply transfers the NFT for a fixed price.

#[derive(JsonSchema, Serialize, Deserialize)]

pub struct SimpleNFTSale(NFT_Sale_Trait_Version_0_1_0);

/// # Versions Trait Wrapper

#[derive(Serialize, Deserialize, JsonSchema)]

enum Versions {

/// # Batching Trait API

NFT_Sale_Trait_Version_0_1_0(NFT_Sale_Trait_Version_0_1_0),

}

impl Contract for SimpleNFTSale {

declare! {updatable<()>, Self::transfer}

}

fn default_coerce<T>(_: T) -> Result<(), CompilationError> {

Ok(())

}

impl From<Versions> for SimpleNFTSale {

fn from(v: Versions) -> SimpleNFTSale {

let Versions::NFT_Sale_Trait_Version_0_1_0(x) = v;

SimpleNFTSale(x)

}

}

REGISTER![[SimpleNFTSale, Versions], "logo.png"];

Now, onto the logic of a sale!

impl SimpleNFTSale {

/// # signed

/// sales must be signed by the current owner

#[guard]

fn signed(self, ctx: Context) {

Clause::Key(self.0.data.owner.clone())

}

/// # transfer

/// transfer exchanges the NFT for cold hard Bitcoinz

#[continuation(guarded_by = "[Self::signed]", web_api, coerce_args = "default_coerce")]

fn transfer(self, ctx: Context, u: ()) {

let amt = ctx.funds();

// first, let's get the module that should be used to 're-mint' this NFT

// to the new owner

let key = self

.0

.data

.minting_module

.clone()

.ok_or(CompilationError::TerminateCompilation)?

.key;

// let's make a copy of the old nft metadata..

let mut mint_data = self.0.data.clone();

// and change the owner to the buyer

mint_data.owner = self.0.sell_to;

// let's now compile a new 'mint' of the NFT

let new_nft_contract = Ok(CreateArgs {

context: ContextualArguments {

amount: ctx.funds(),

network: ctx.network,

effects: unsafe { ctx.get_effects_internal() }.as_ref().clone(),

},

arguments: mint_impl::Versions::Mint_NFT_Trait_Version_0_1_0(mint_data),

})

.and_then(serde_json::to_value)

.map(|args| create_contract_by_key(&key, args, Amount::from_sat(0)))

.map_err(|_| CompilationError::TerminateCompilation)?

.ok_or(CompilationError::TerminateCompilation)?;

// Now for the magic:

// This is a transaction that creates at output 0 the new nft for the

// person, and must add another input that pays sufficiently to pay the

// prior owner an amount.

// todo: we also could use cut-through here once implemented

// todo: change seem problematic here? with a bit of work, we could handle it

// cleanly if the buyer identifys an output they are spending before requesting

// a purchase.

ctx.template()

.add_output(amt, &new_nft_contract, None)?

.add_amount(self.0.price.into())

.add_sequence()

.add_output(self.0.price.into(), &self.0.data.owner, None)?

// note: what would happen if we had another output that

// had a percentage-of-sale royalty to some creator's key?

.into()

}

}

And that’s it! Makes sense, right? I hope…

But if not

Re read the part before the code again! Maybe it will be more clear now :)

Day 18: Rubin's Bitcoin Advent Calendar

15 Dec 2021

Welcome to day 18 of my Bitcoin Advent Calendar. You can see an index of all

the posts here or subscribe at

judica.org/join to get new posts in your inbox

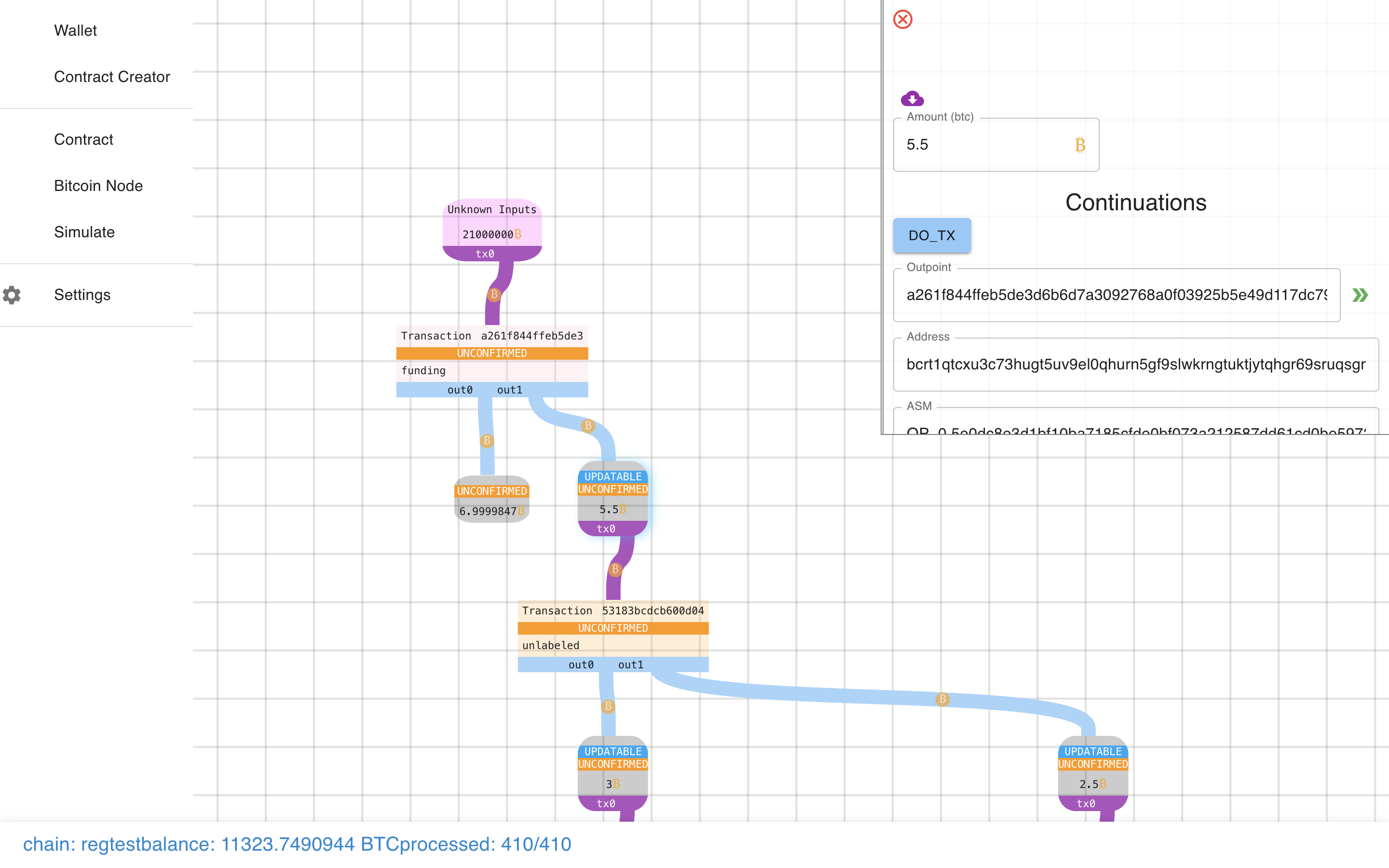

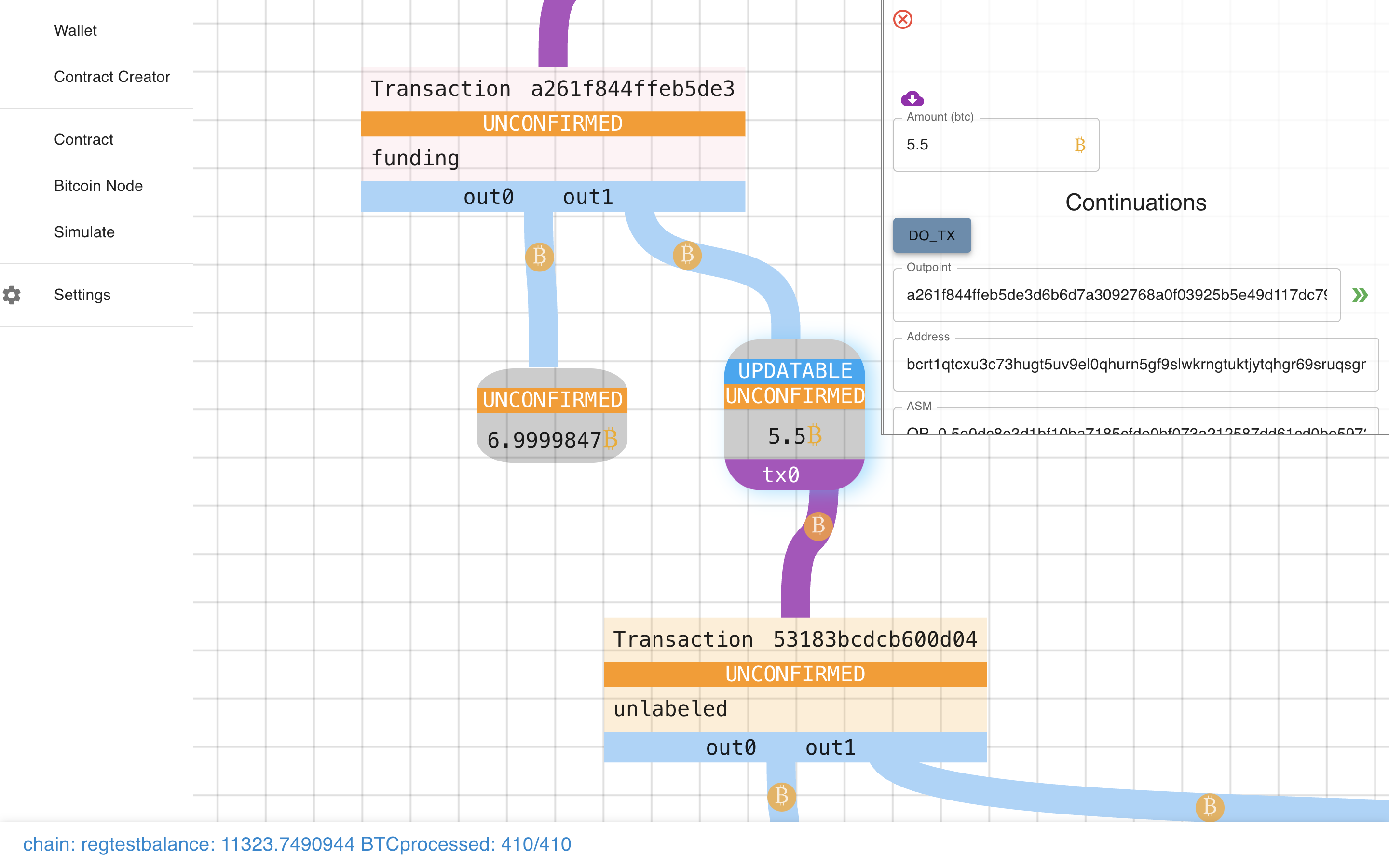

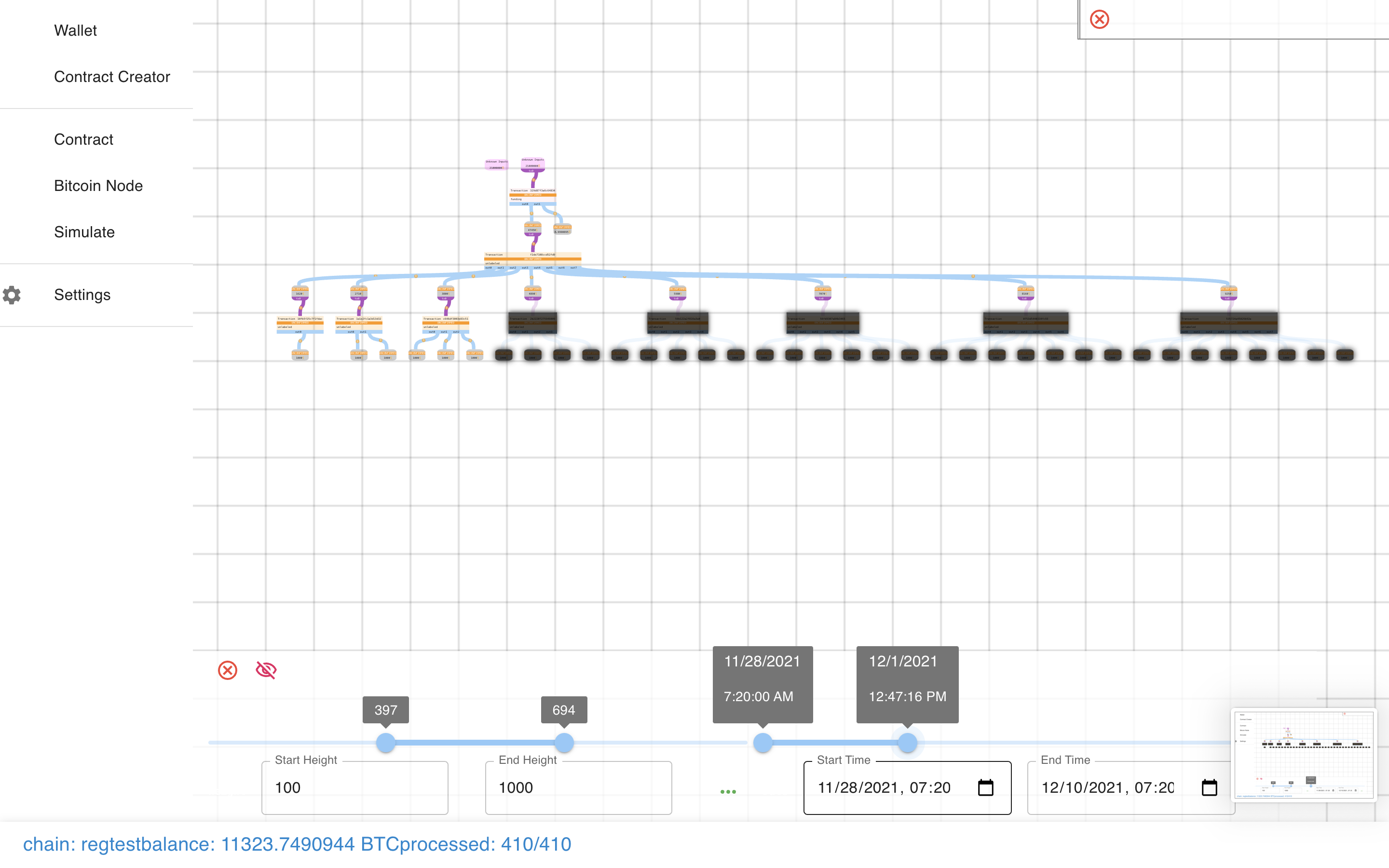

Today’s post will be a pretty different format that usual, it’s basically going

to be a pictorial walk through of the Sapio

Studio, the frontend tool for Sapio

projects. As an example, we’ll go through a Payment Pool contract to familiarize

ourselves.

I wanted to put this post here, before we get into some more applications,

because I want you to start thinking past “cool one-off concepts we can

implement” and to start thinking about reusable components we can build and ship

into a common Bitcoin Smart Contract software (Sapio Studio or its successors).

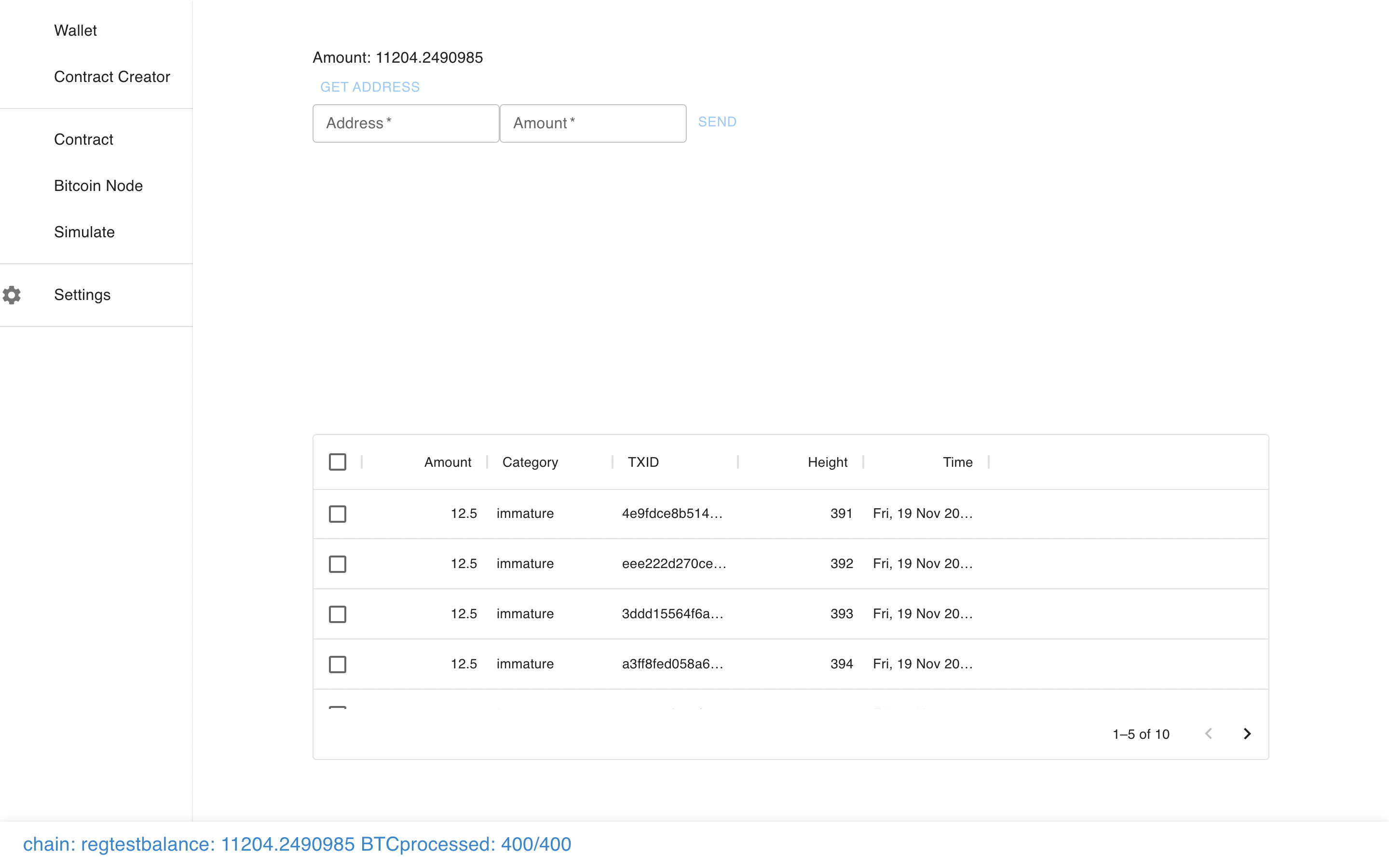

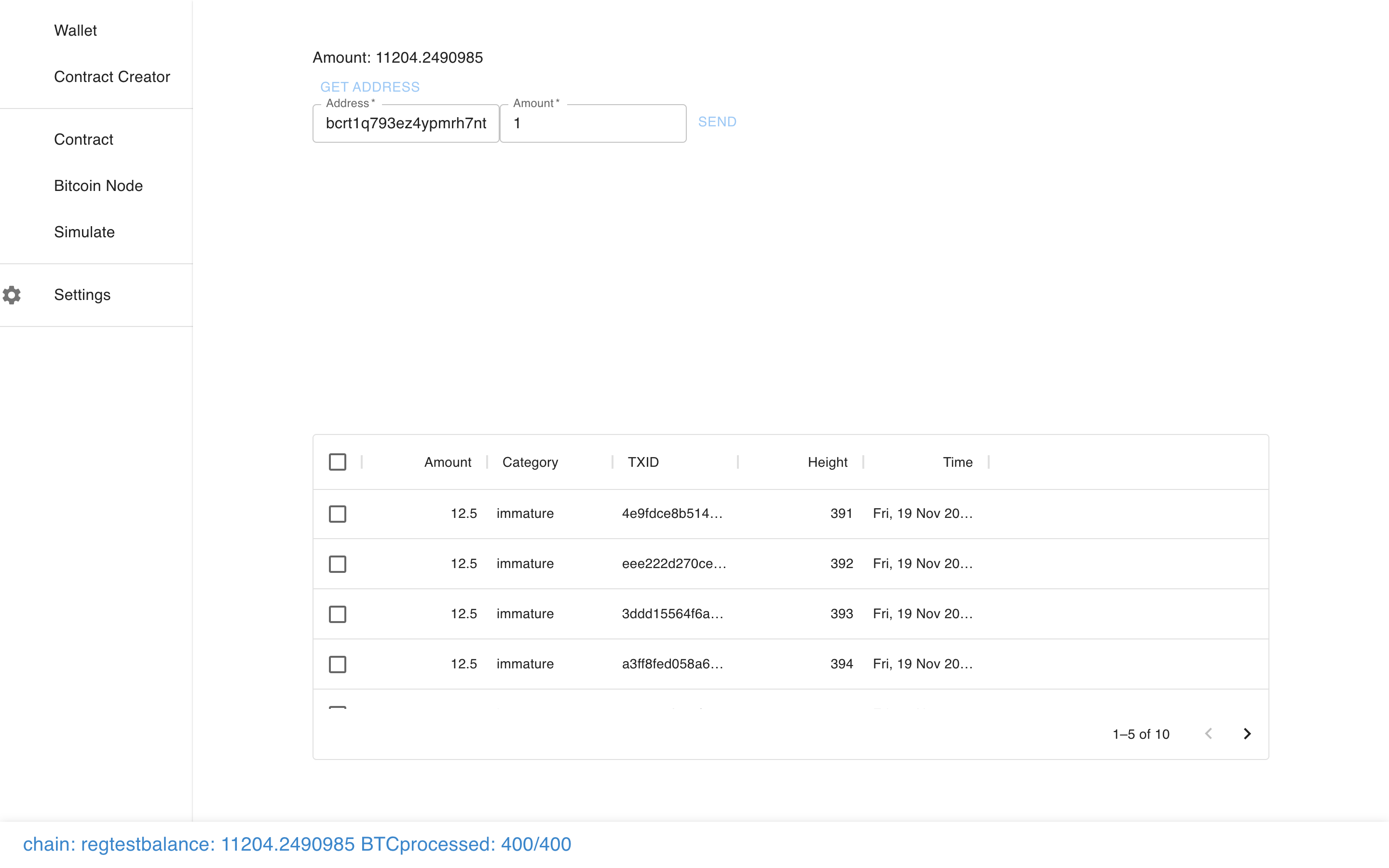

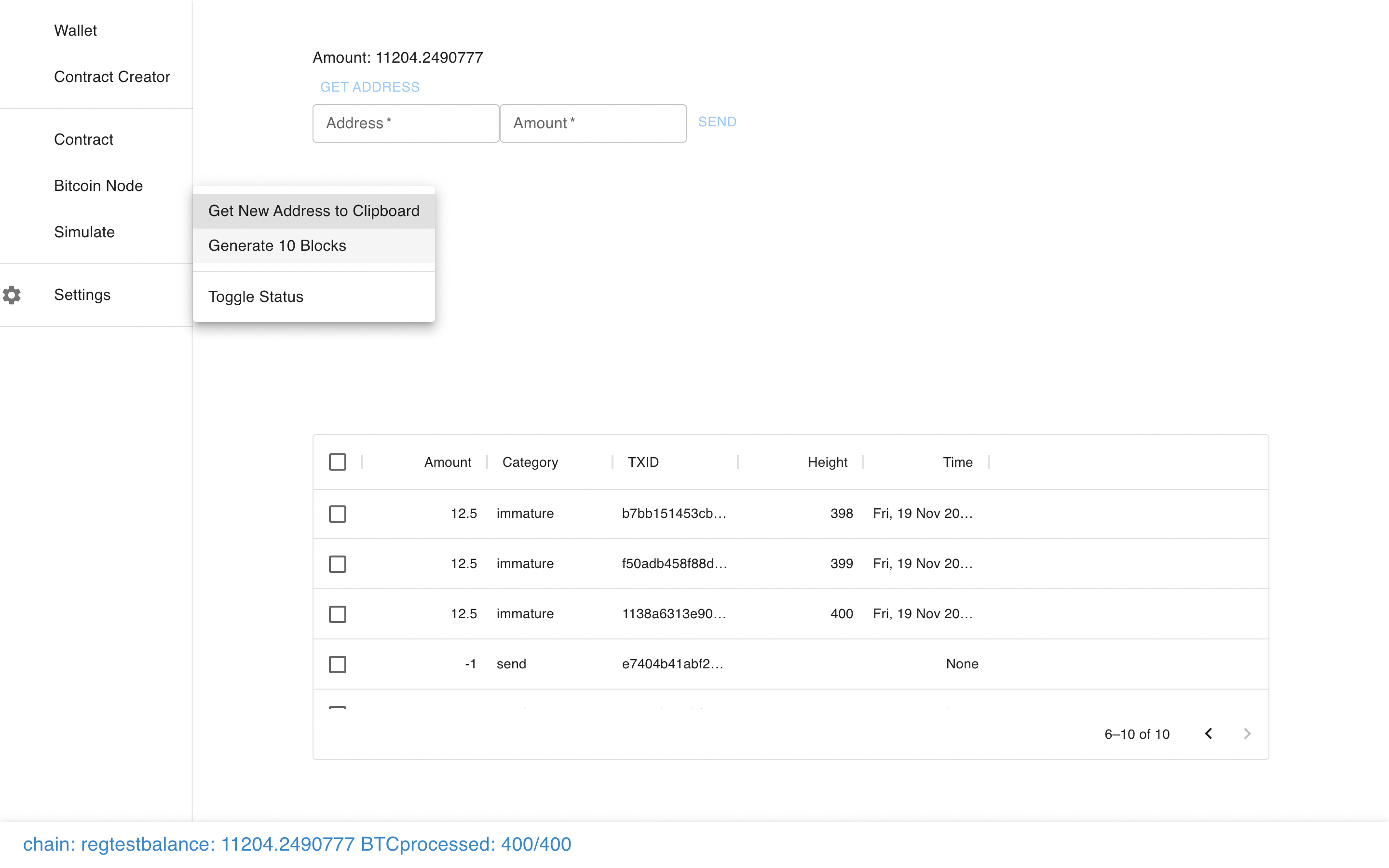

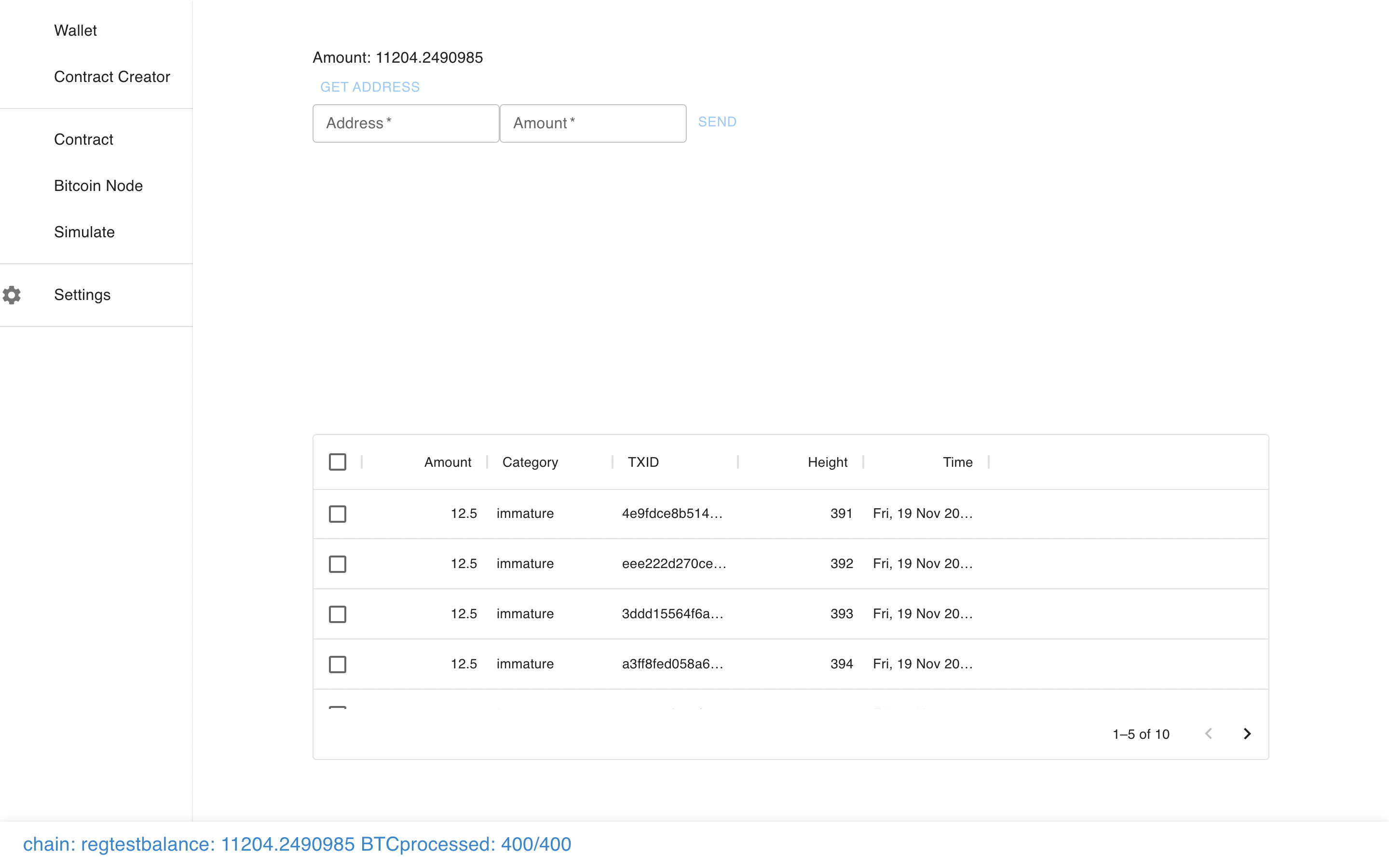

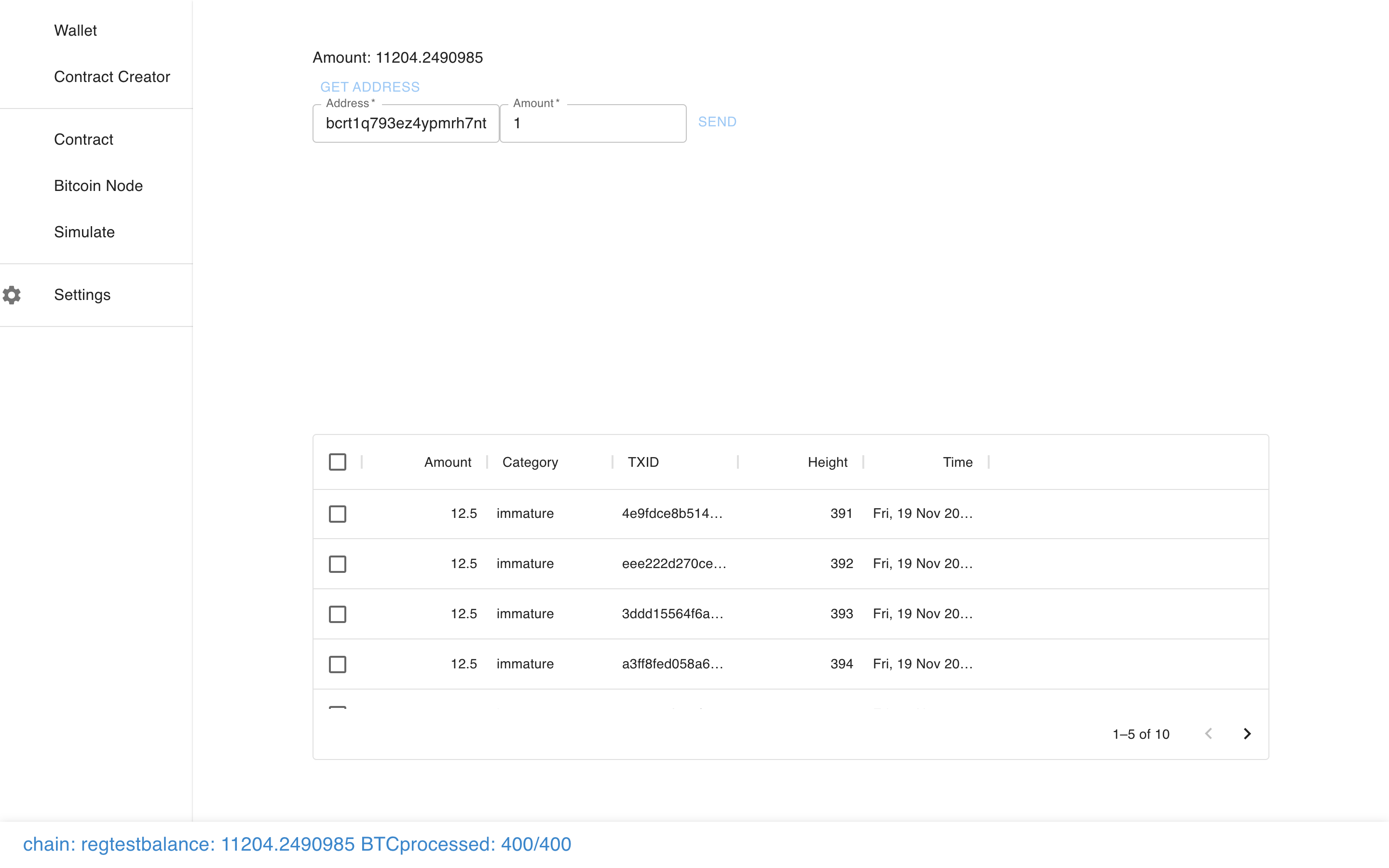

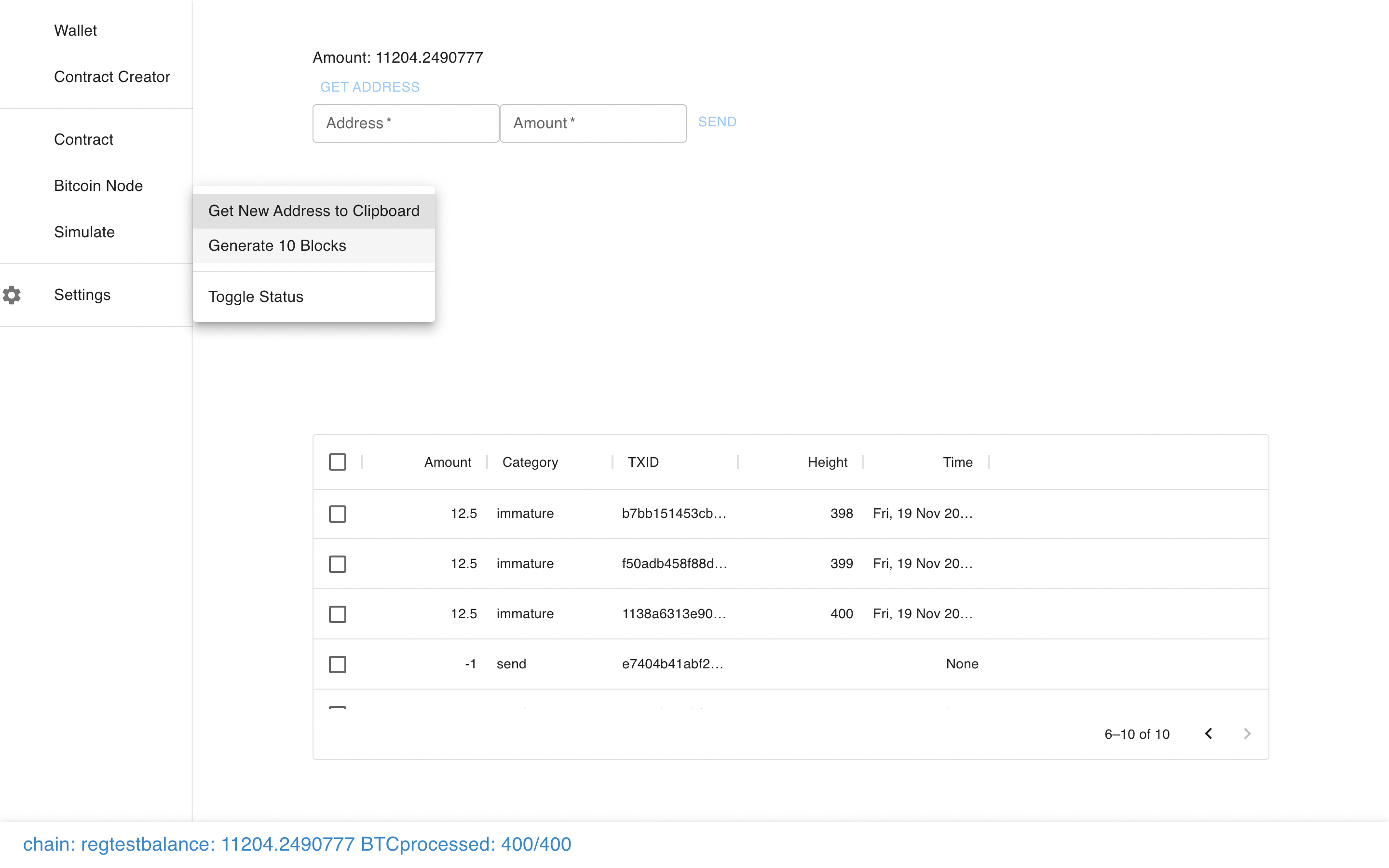

At it’s core, Sapio Studio is just a wallet frontend to Bitcoin Core.

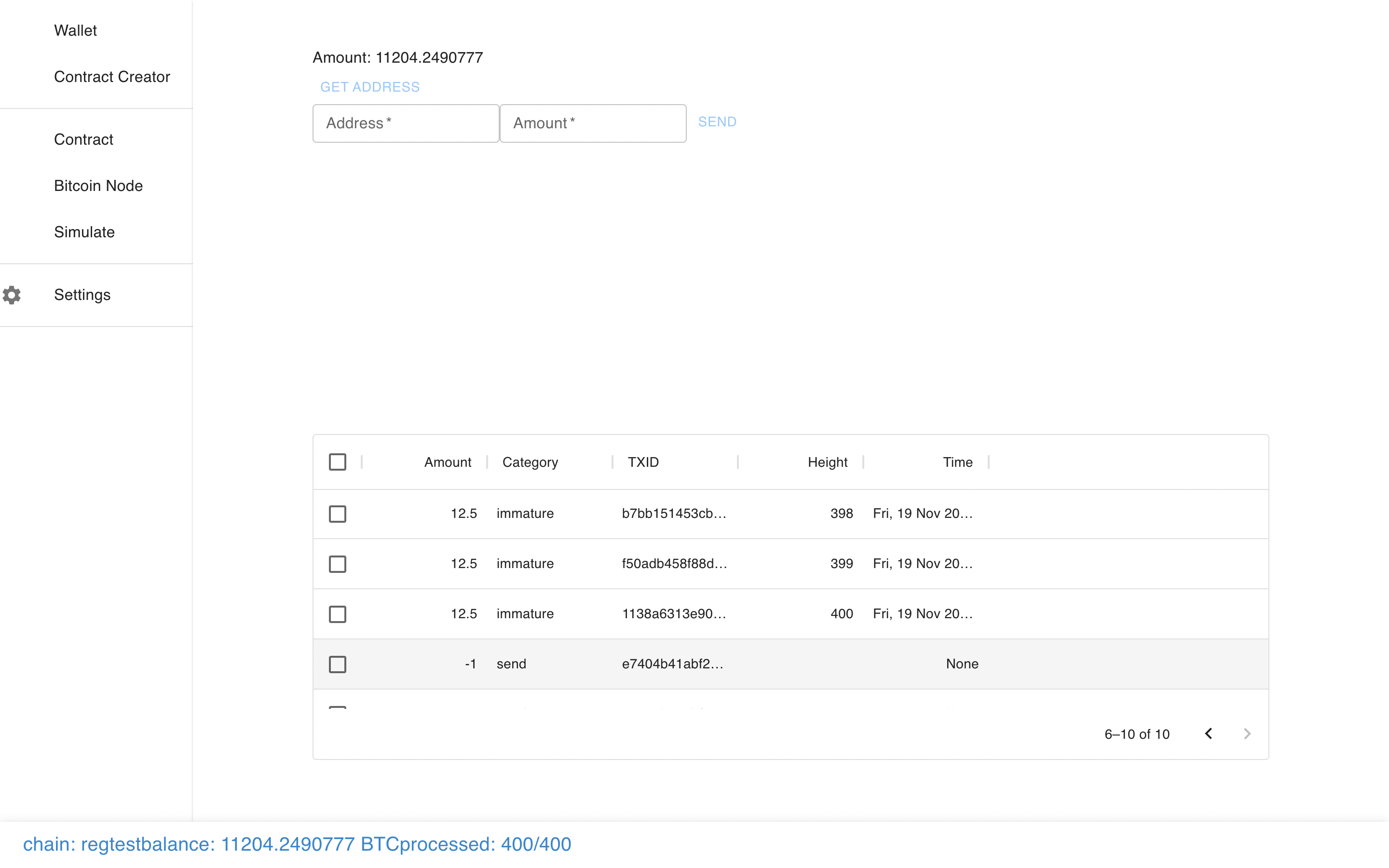

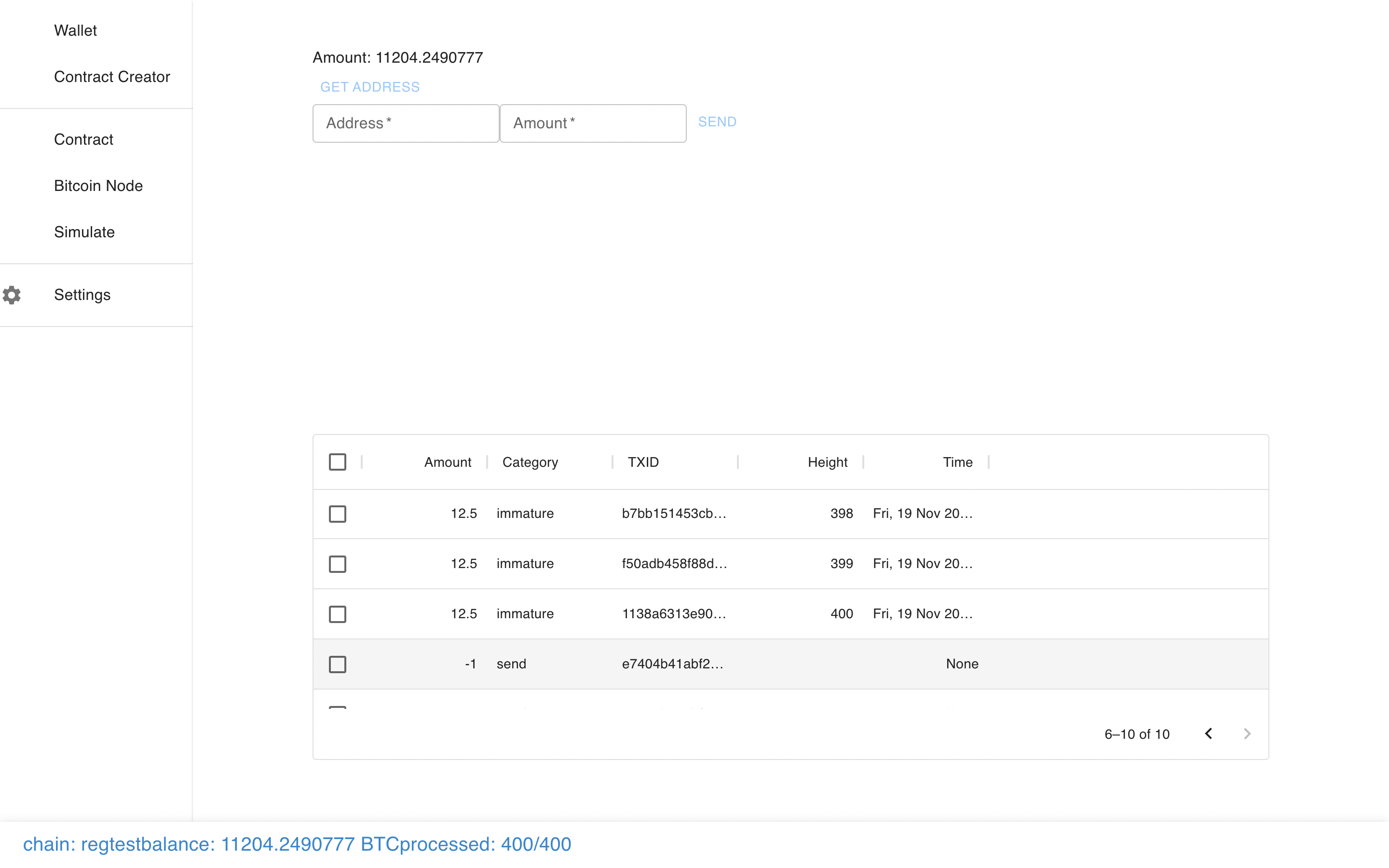

You can make a transaction, just like normal…

And see it show up in the pending transactions…

And even mine some regtest blocks.

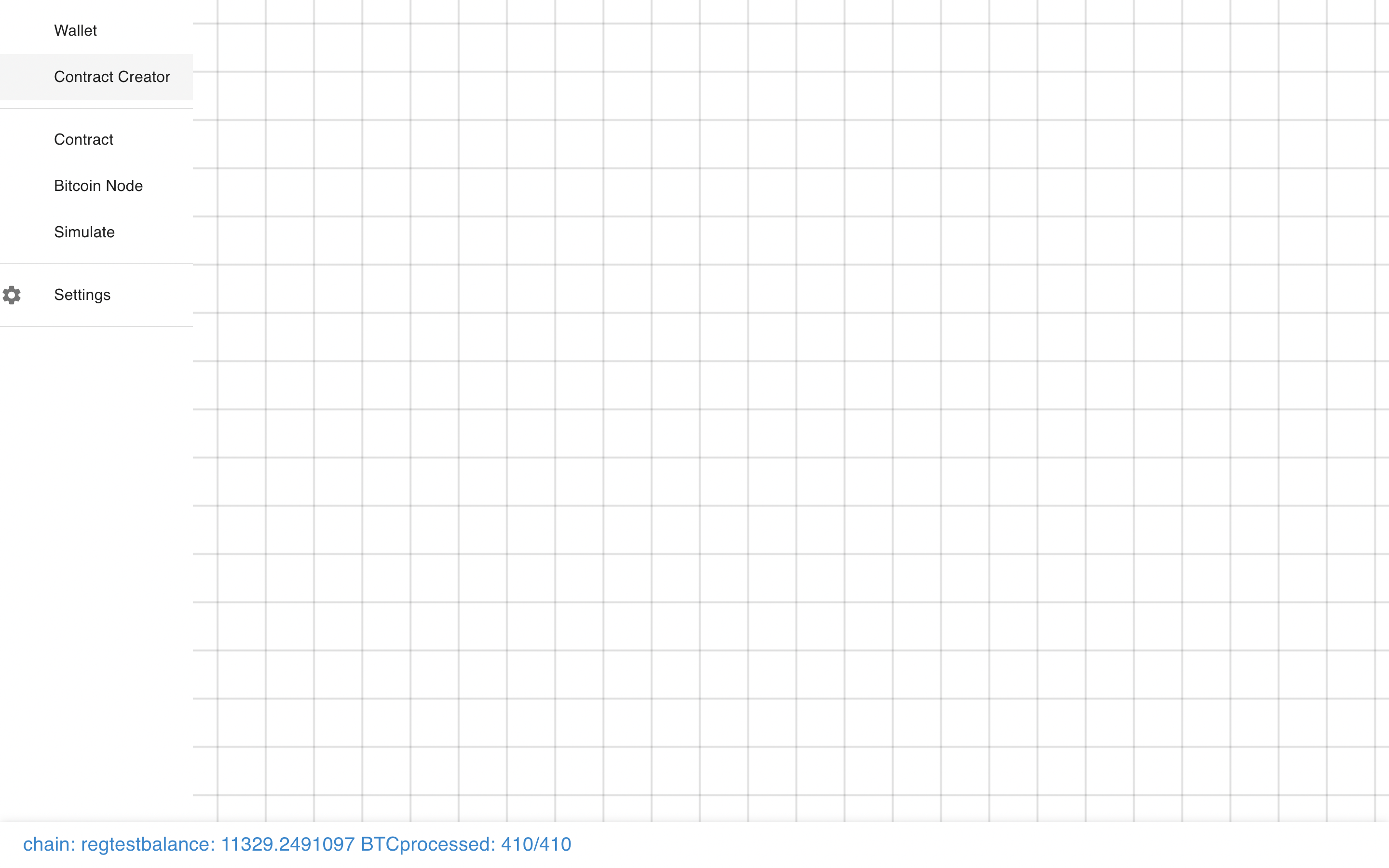

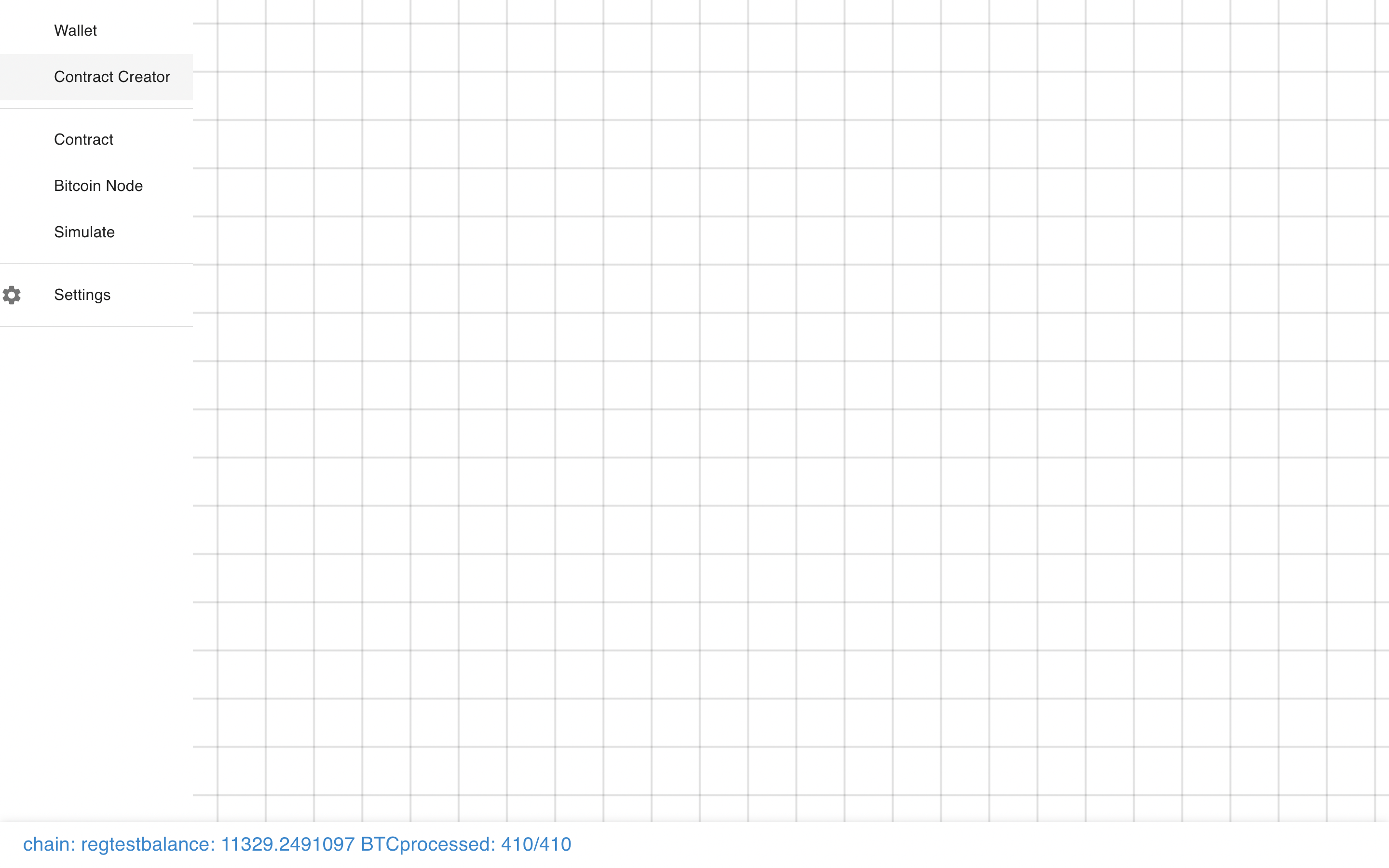

But where Sapio Studio is different is that there is also the ability to create

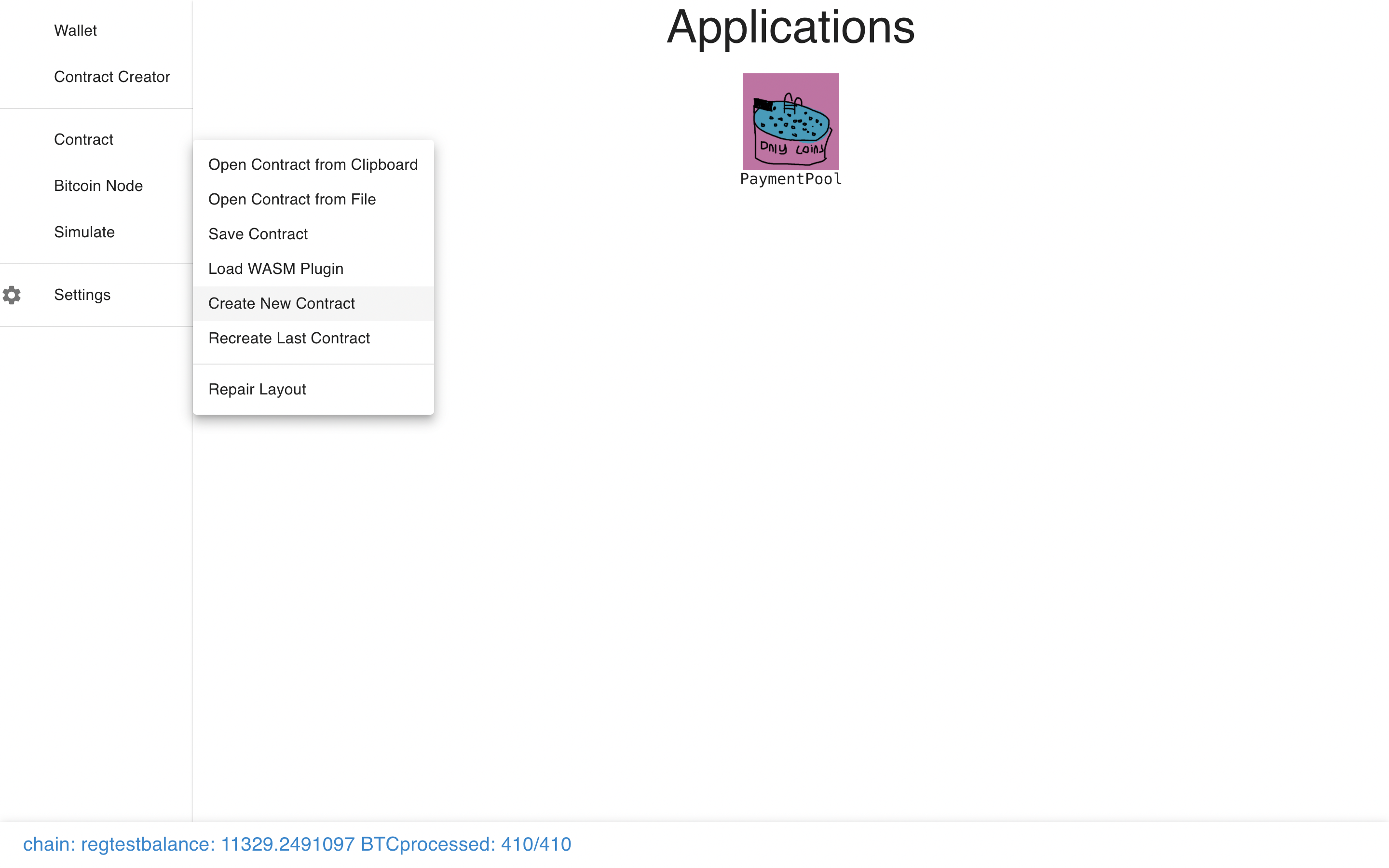

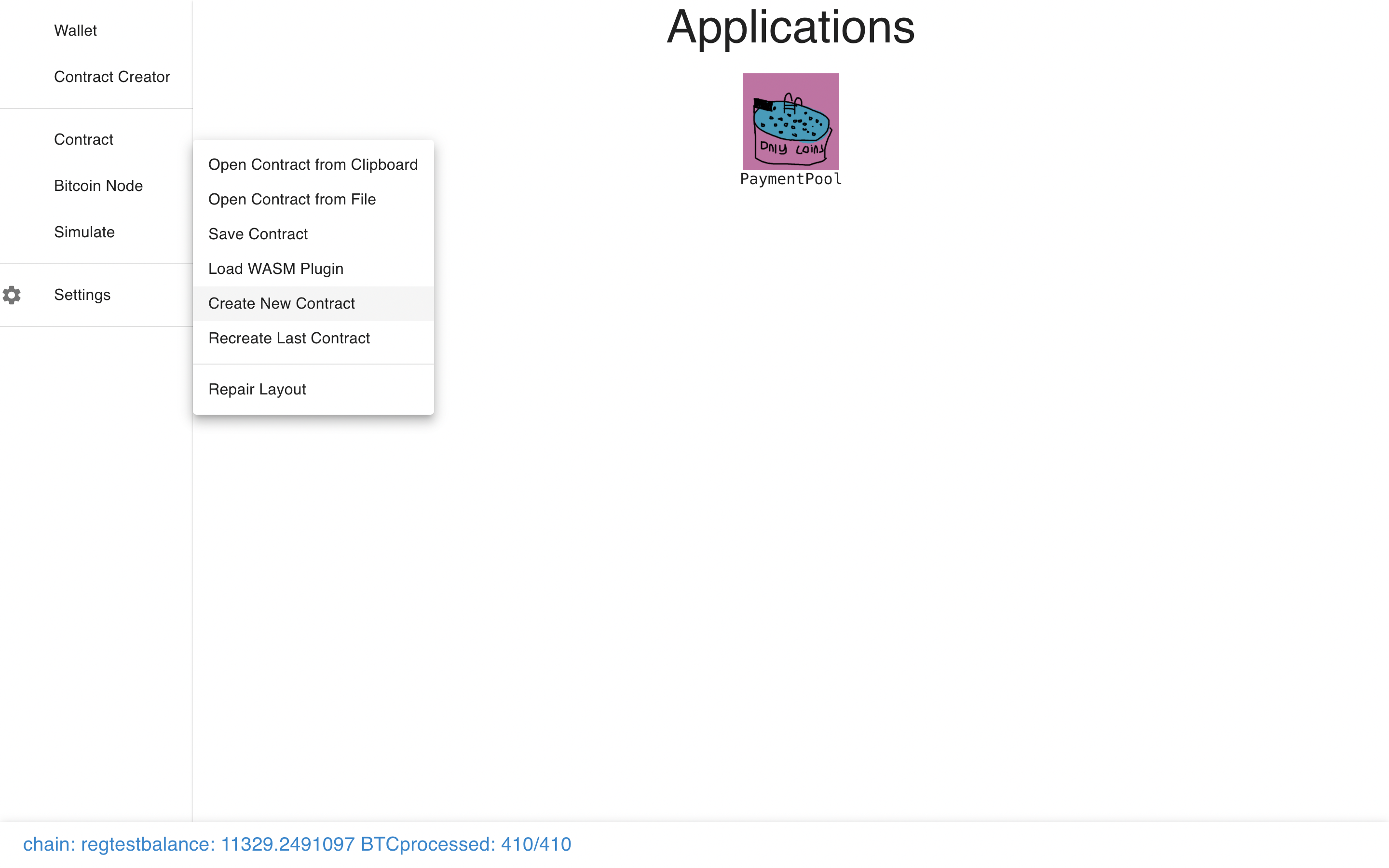

contracts.

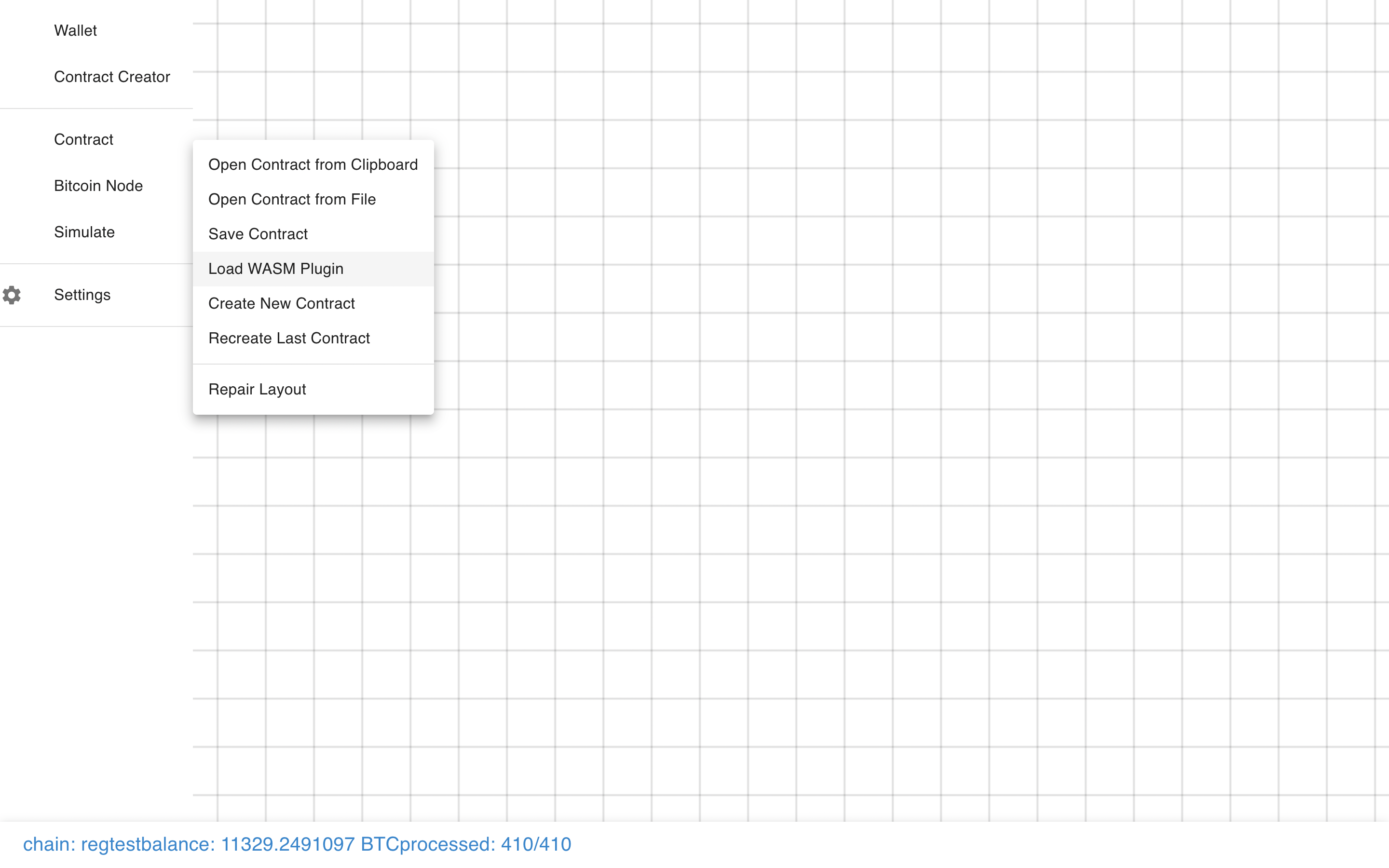

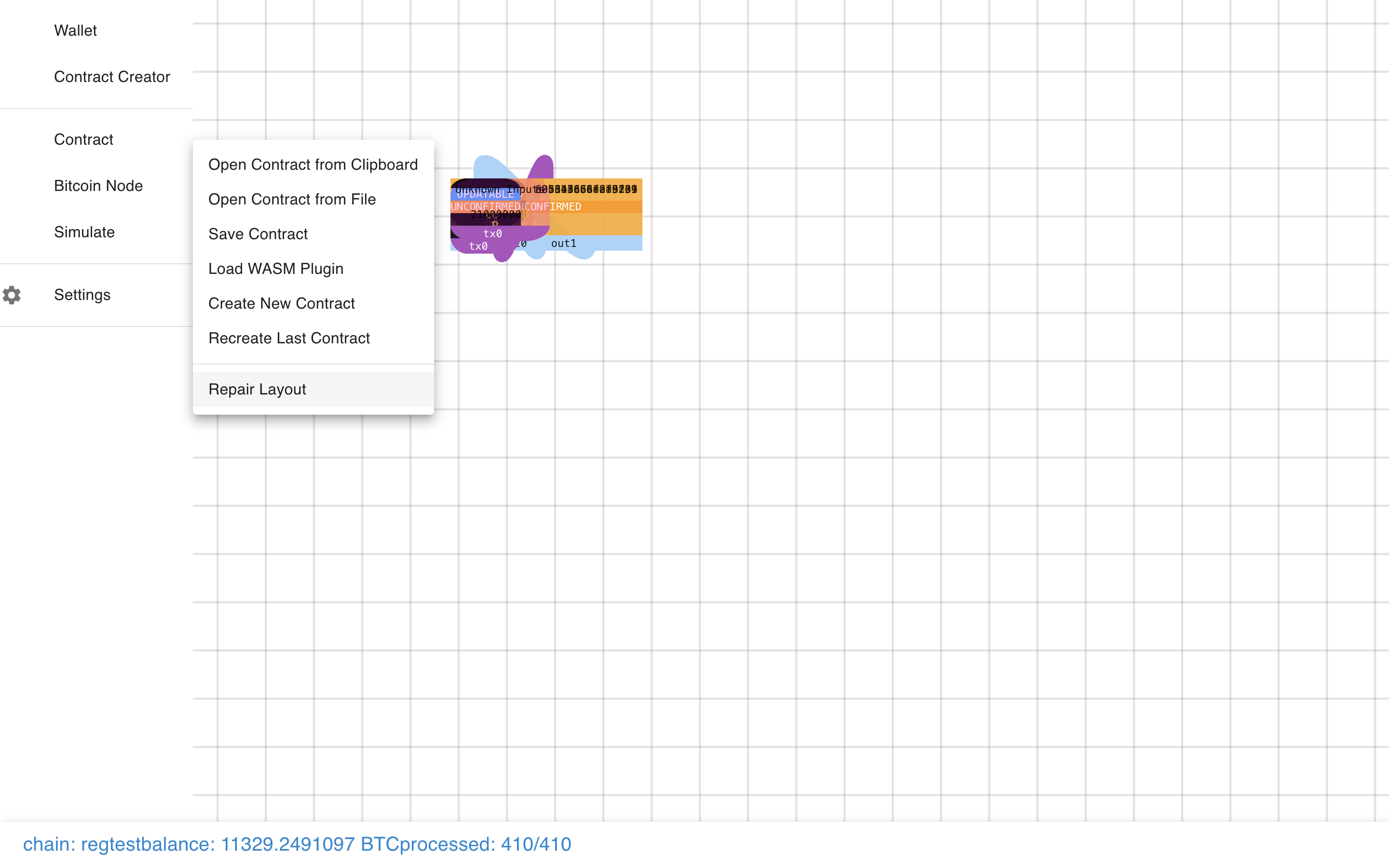

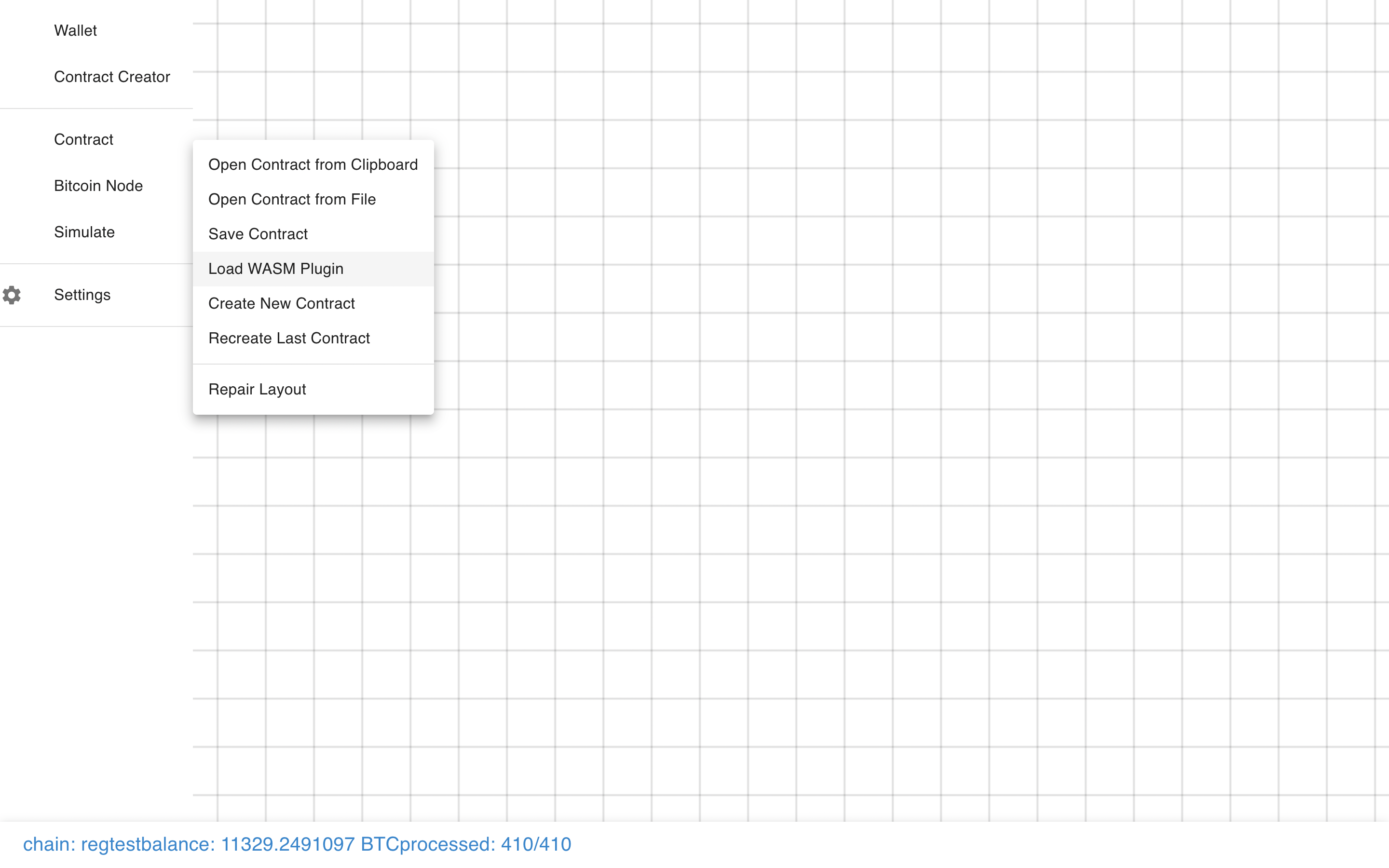

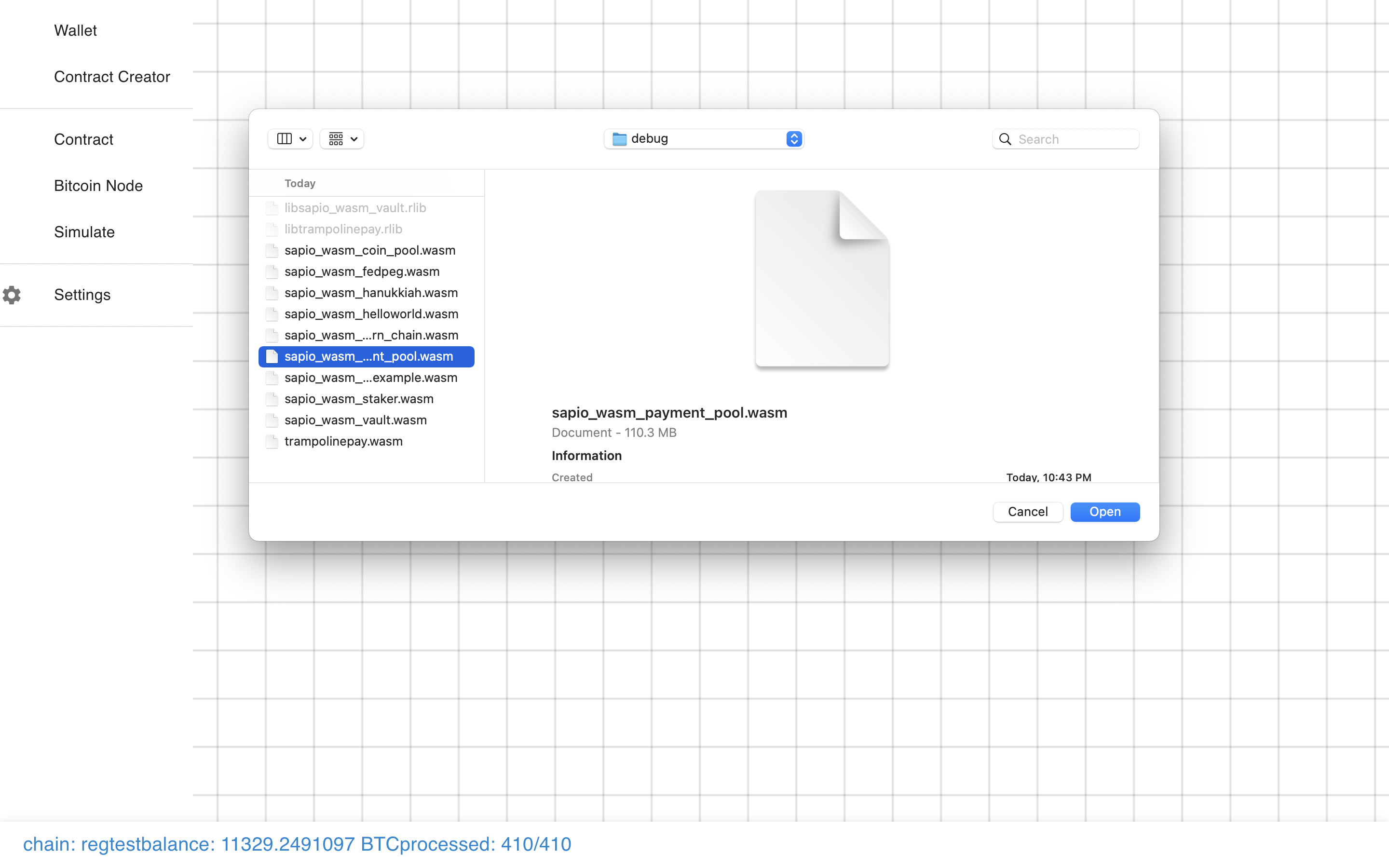

Before we can do that, we need to load a WASM Plugin with a compiled contract.

Before we can do that, we need to load a WASM Plugin with a compiled contract.

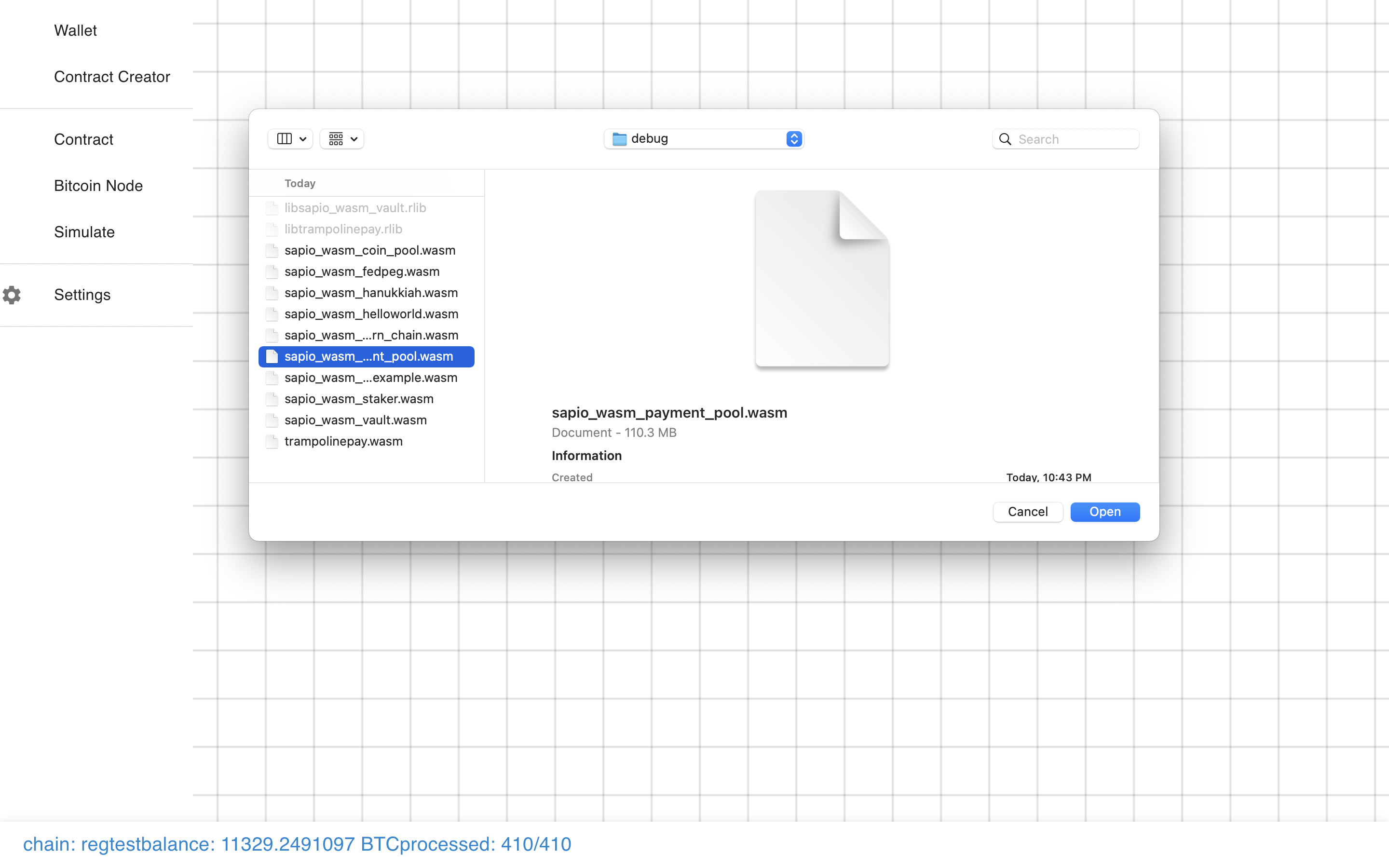

Let’s load the Payment Pool module. You can see the code for it

here.

Let’s load the Payment Pool module. You can see the code for it

here.

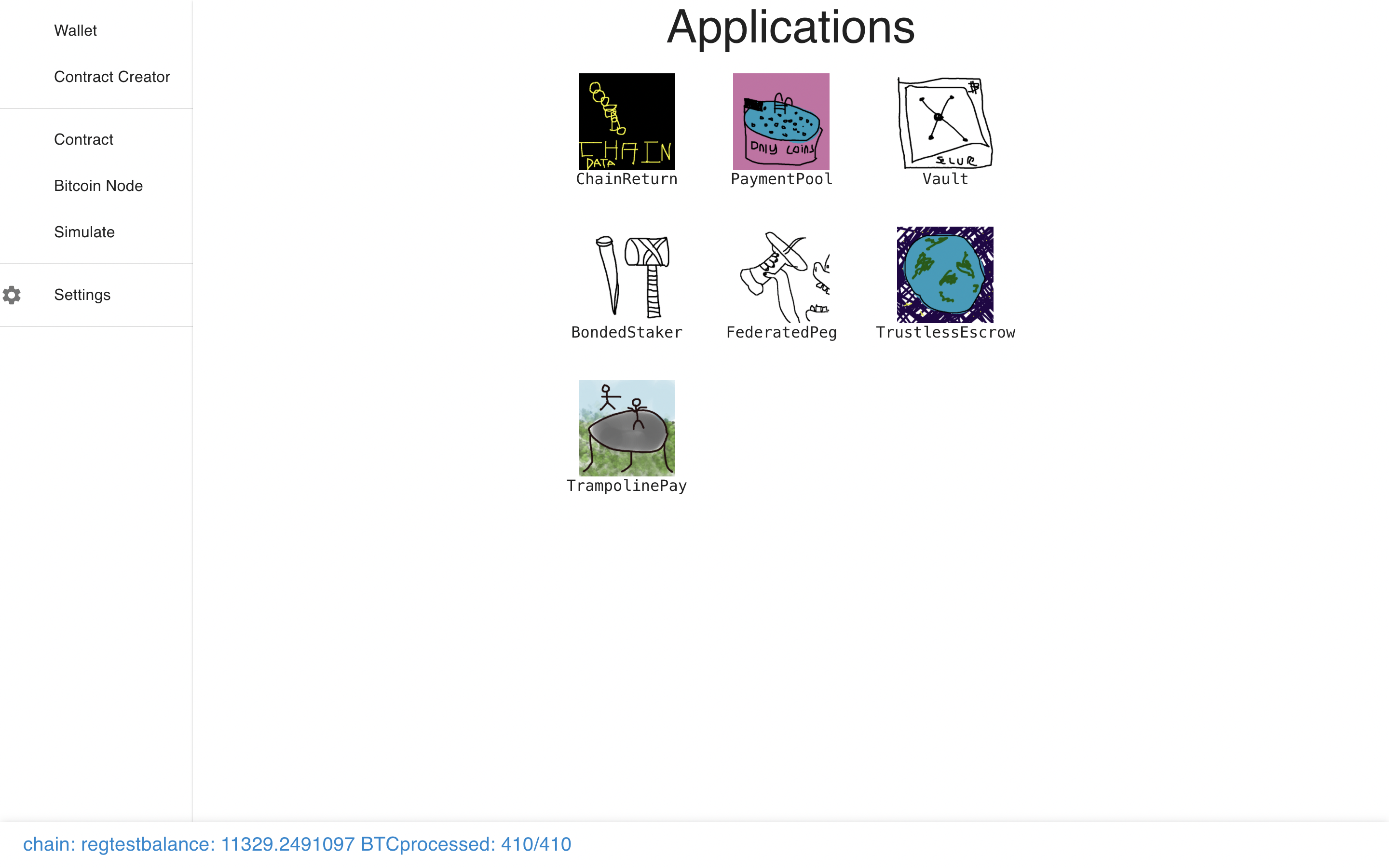

And now we can see we have a module!

And now we can see we have a module!

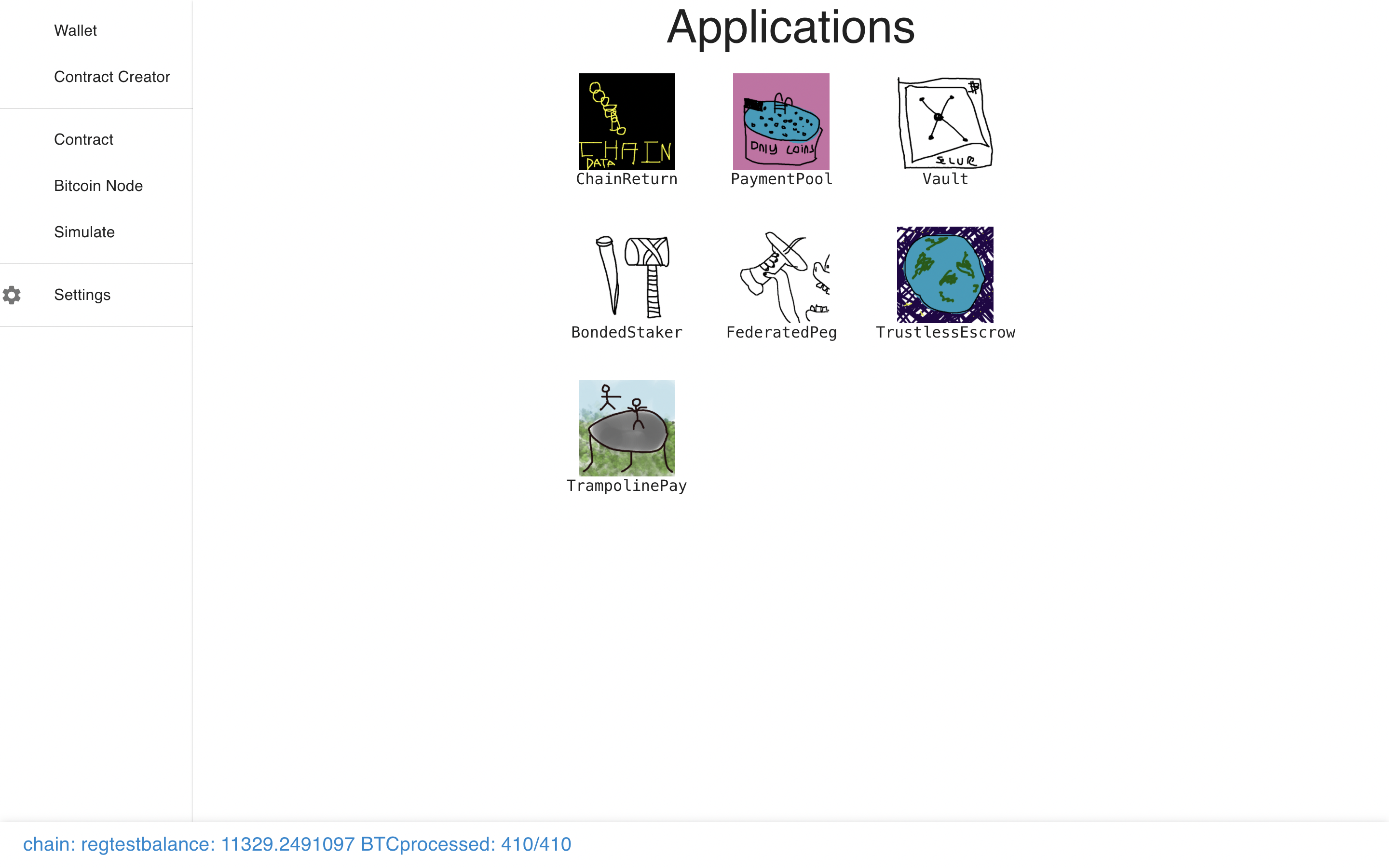

Let’s load a few more so it doesn’t look lonely.

Let’s load a few more so it doesn’t look lonely.

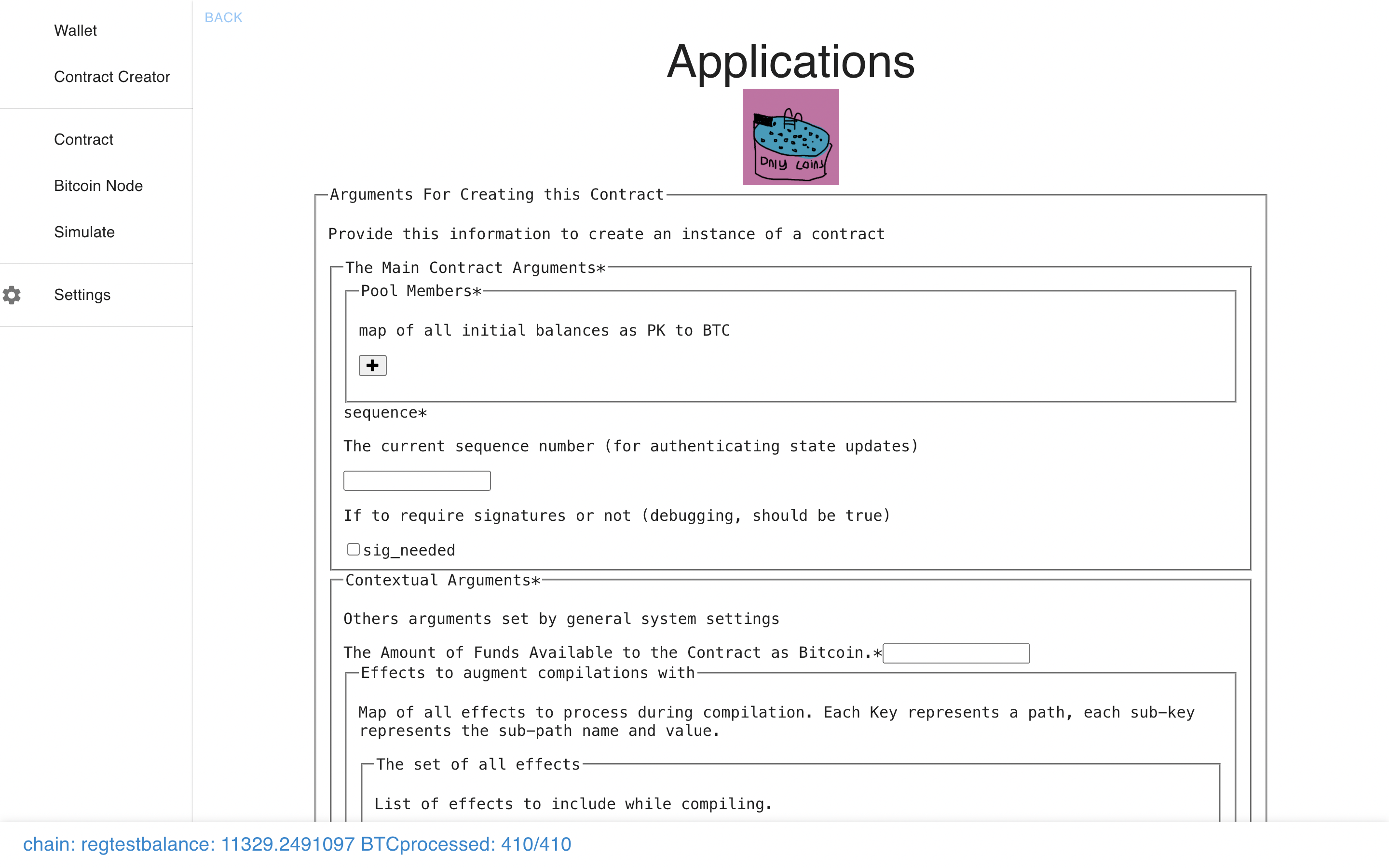

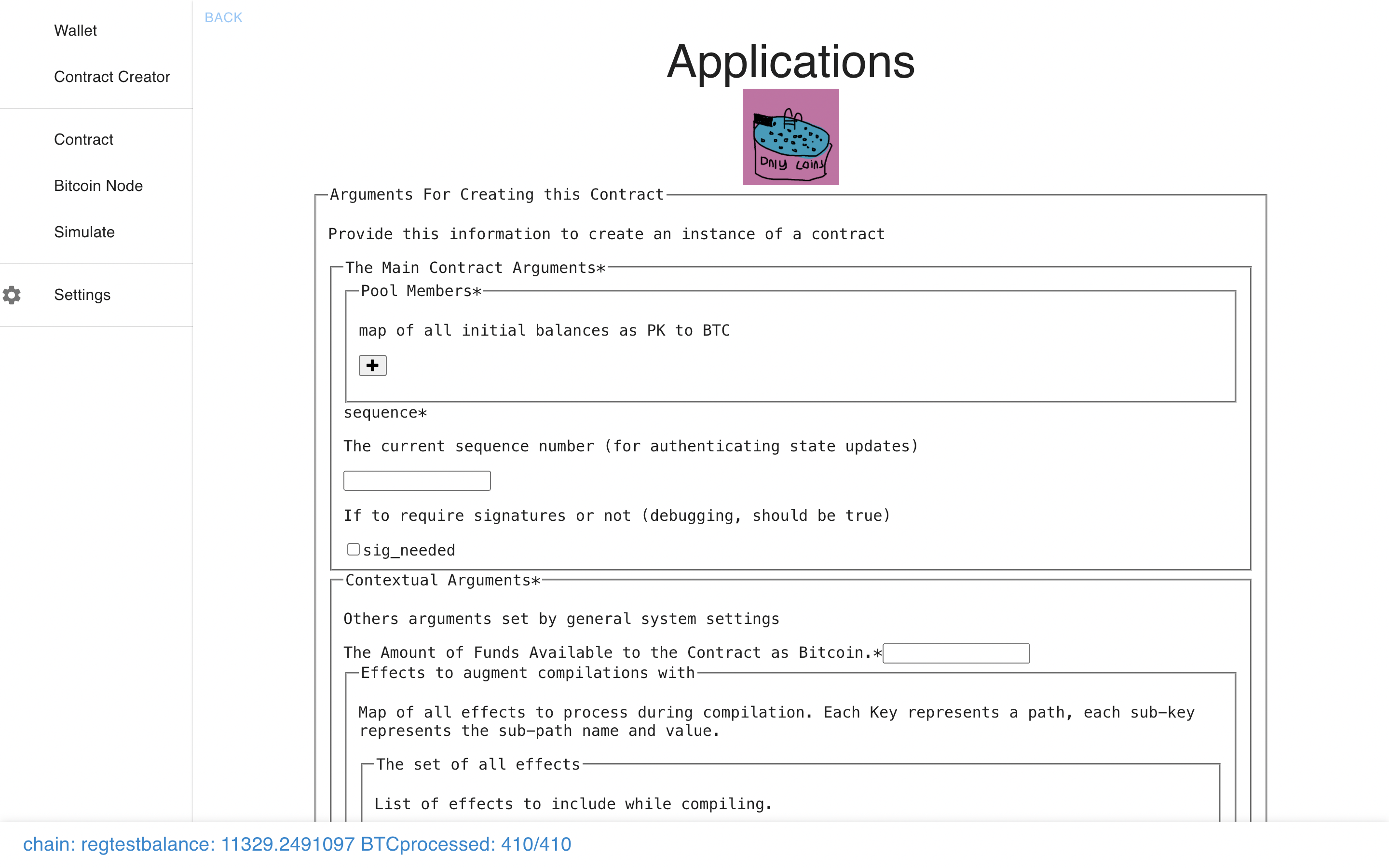

Now let’s check out the Payment Pool module.

Now let’s check out the Payment Pool module.

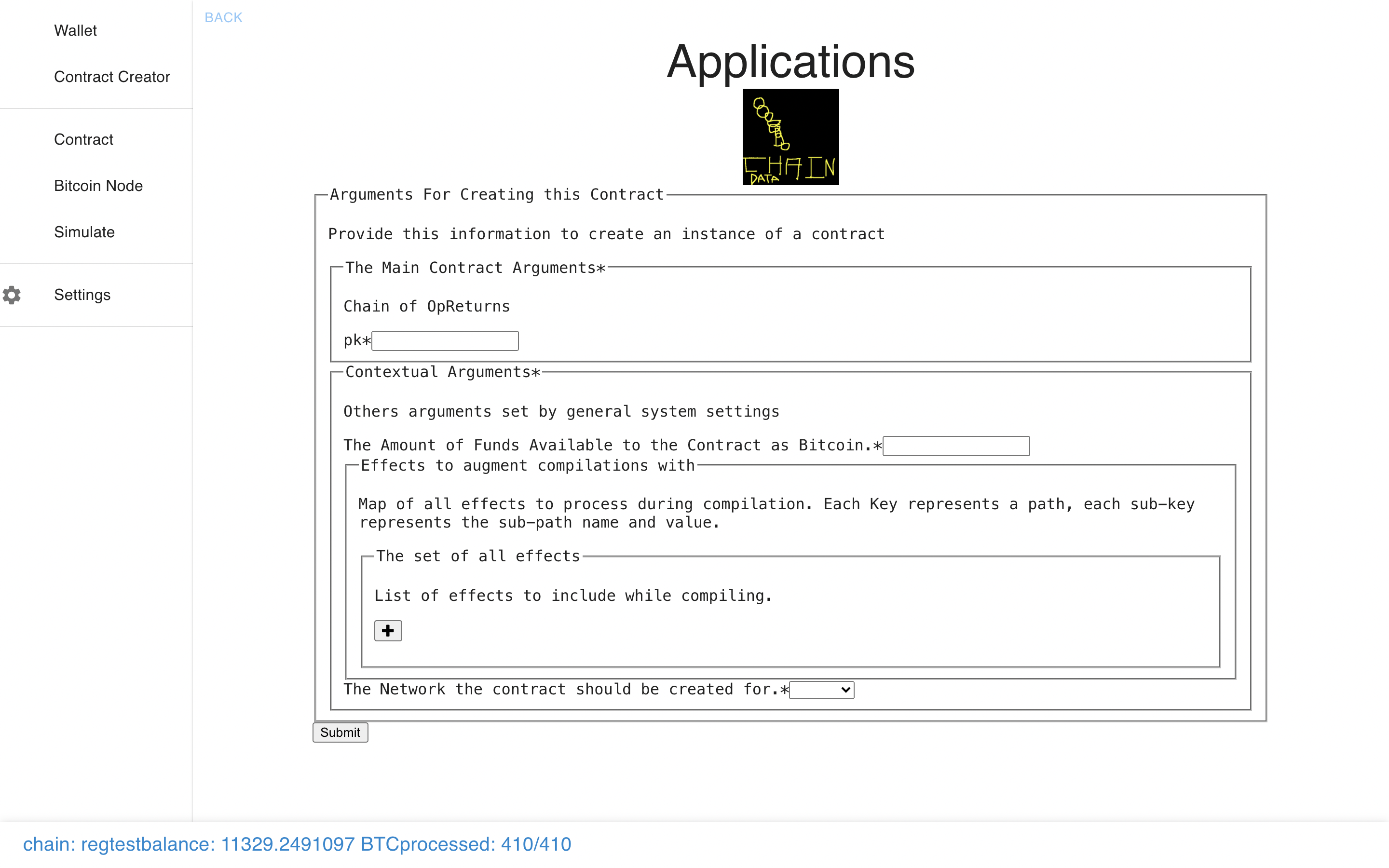

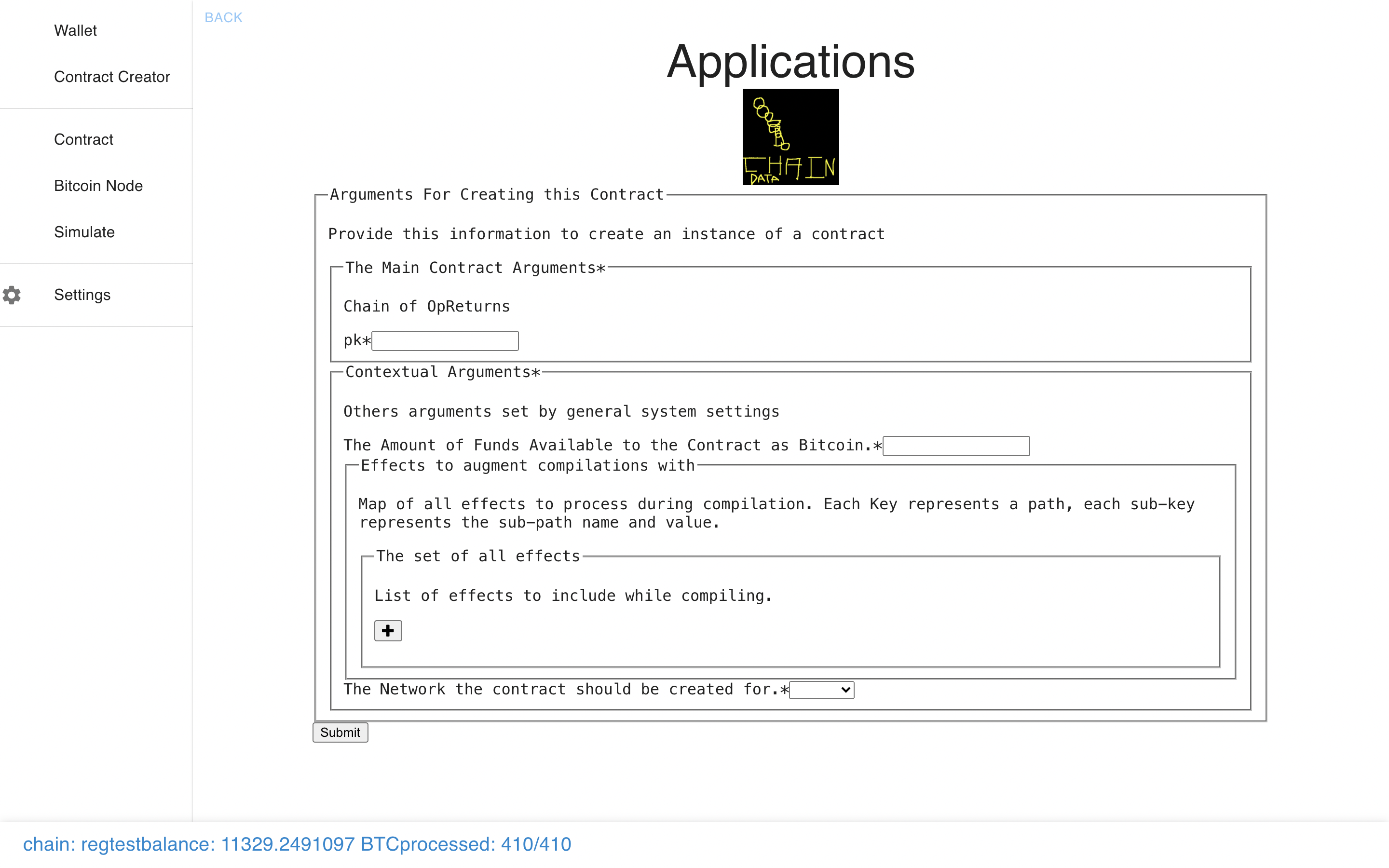

Now let’s check out another one – we can see they each have different types of

arguments, auto-generated from the code.

Now let’s check out another one – we can see they each have different types of

arguments, auto-generated from the code.

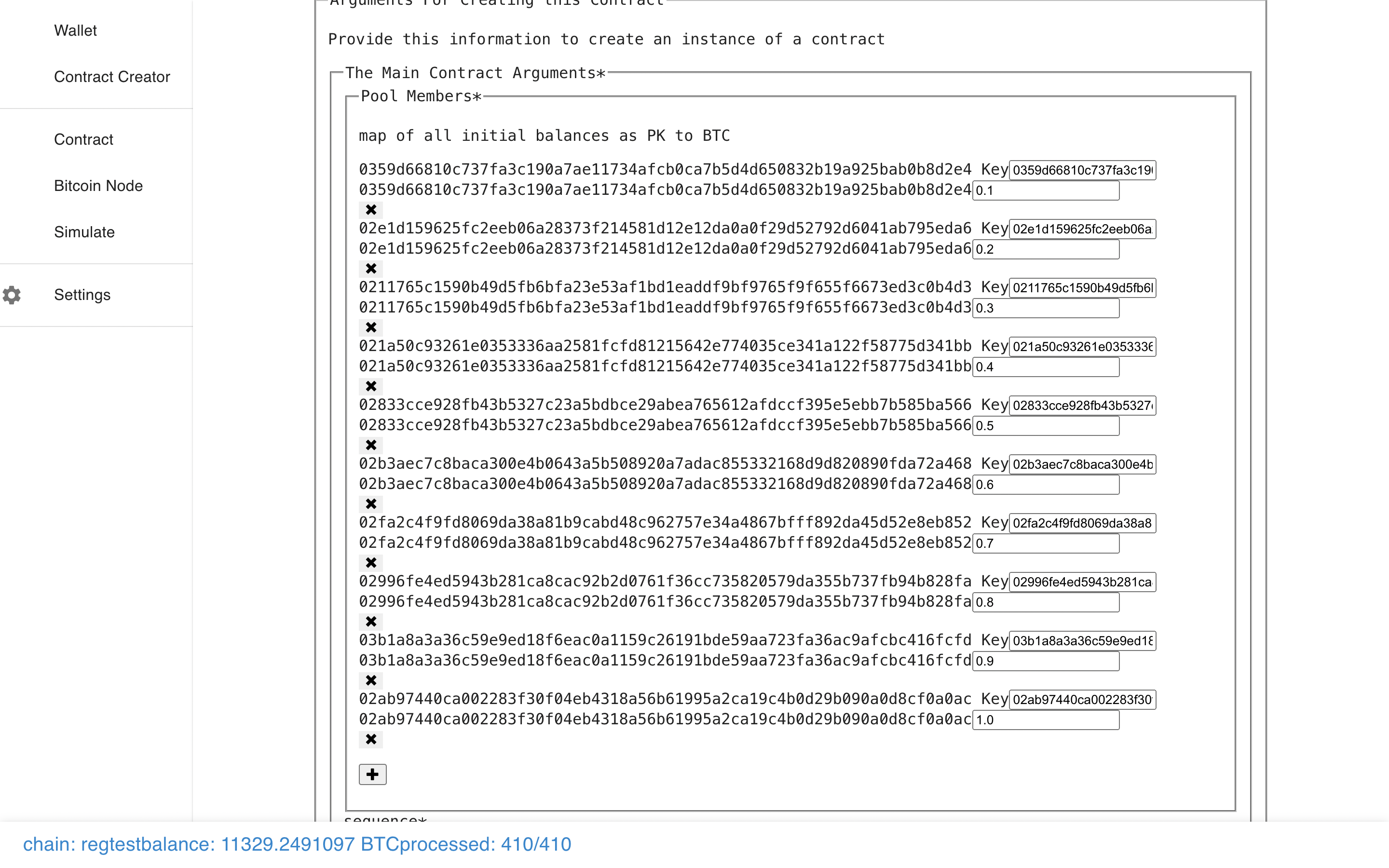

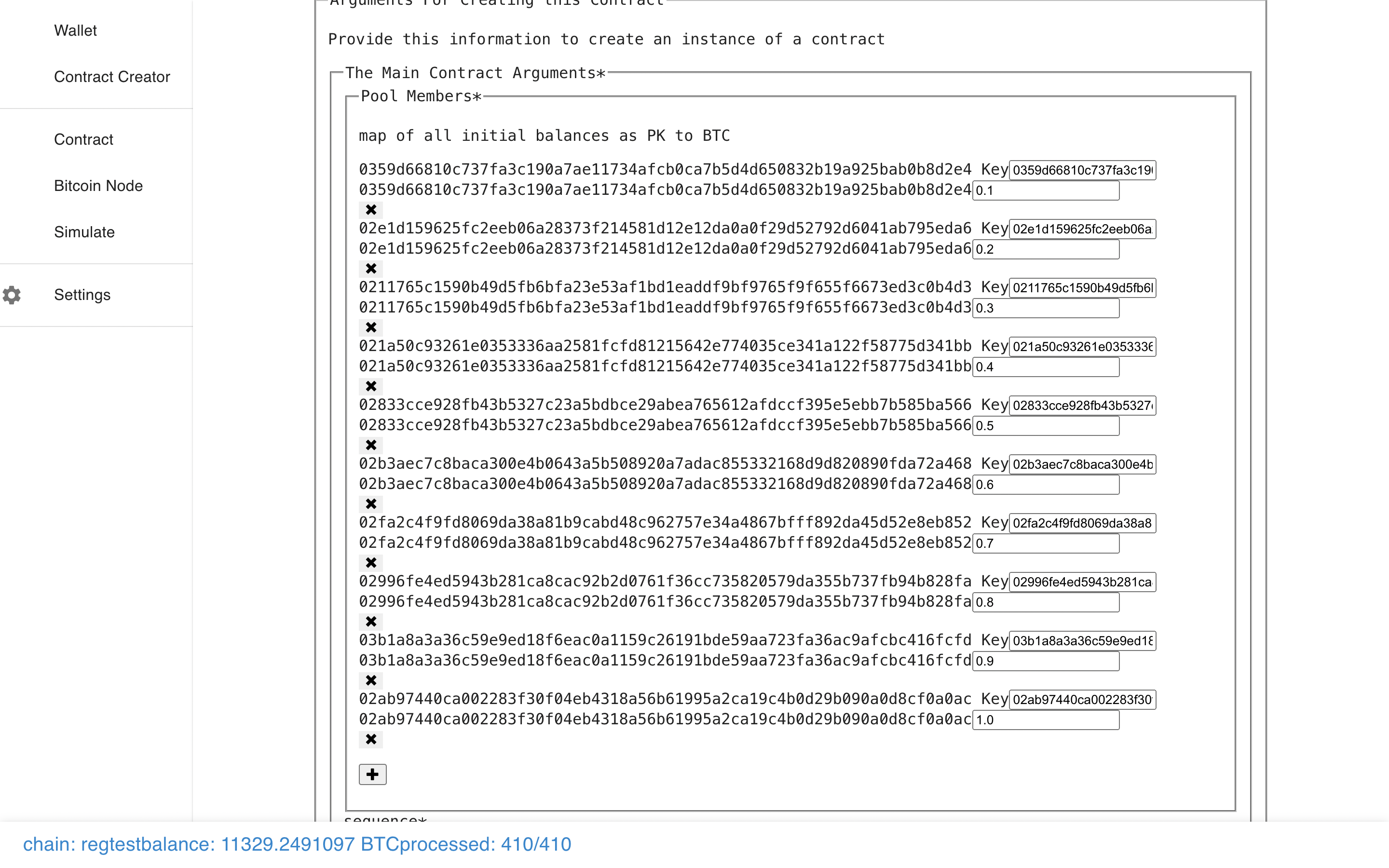

Let’s fill out the form with 10 keys to make a Payment Pool controlled by 10

people, and then submit it.

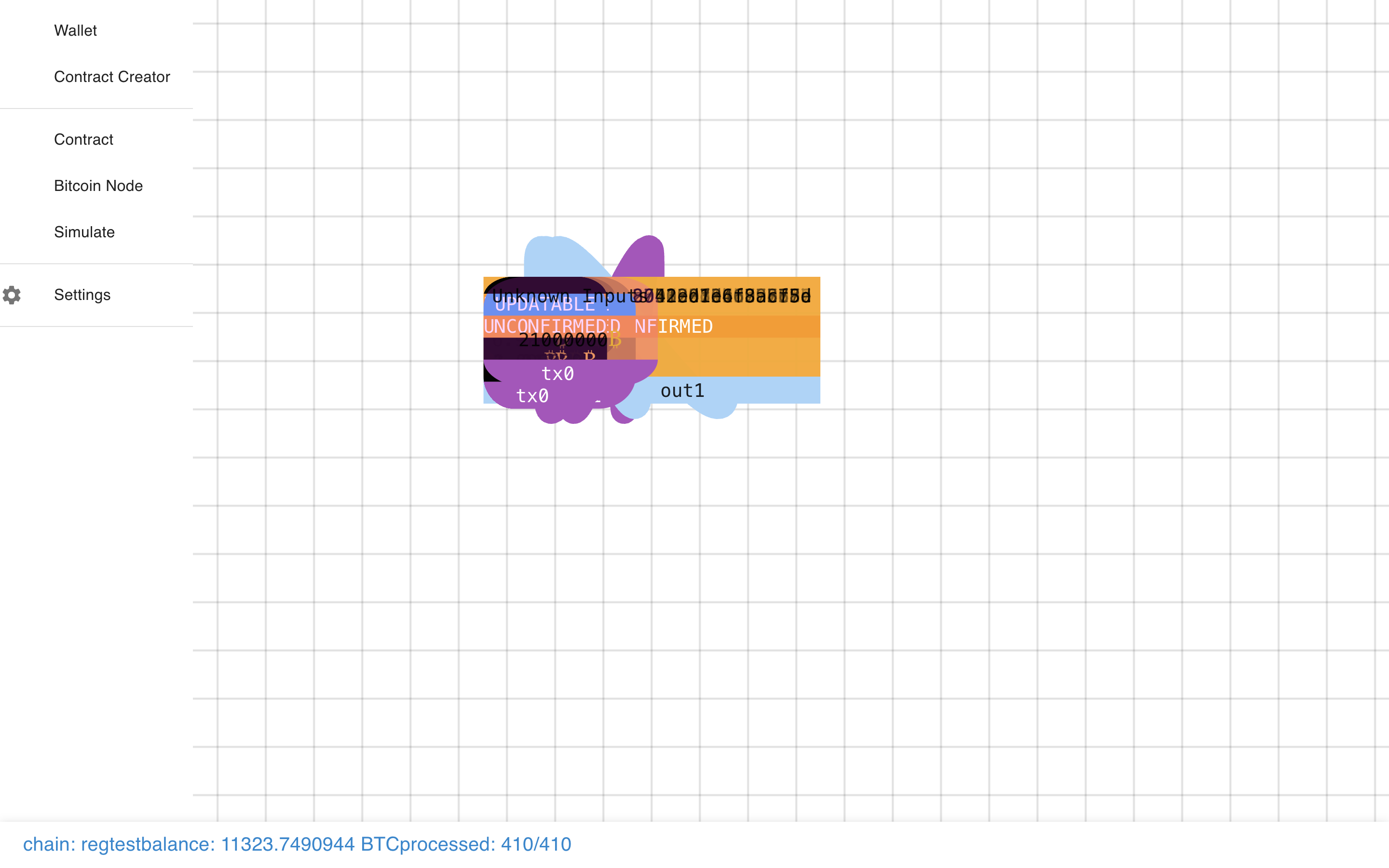

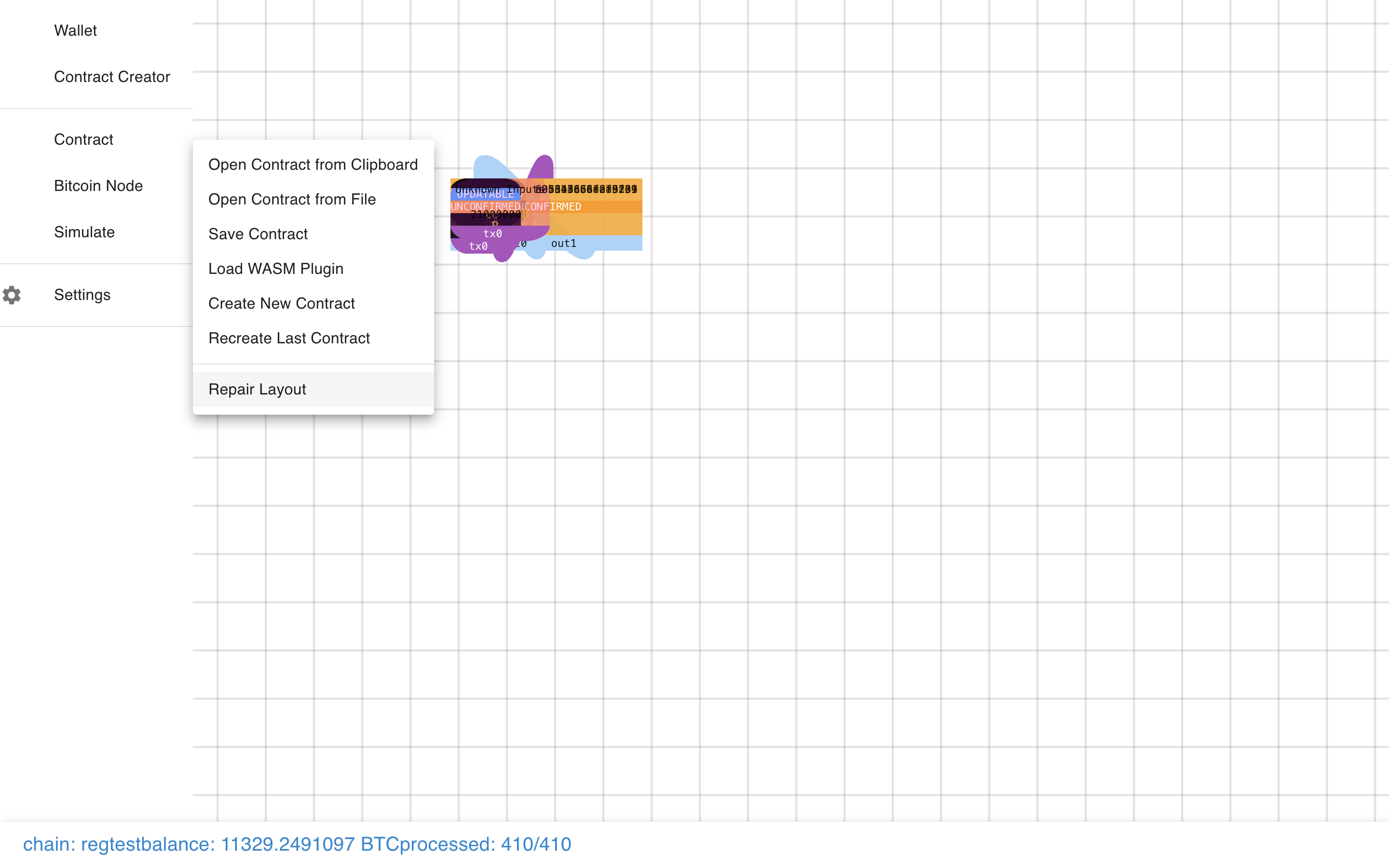

What’s that??? It’s a small bug I am fixing :/. Not to worry…

What’s that??? It’s a small bug I am fixing :/. Not to worry…

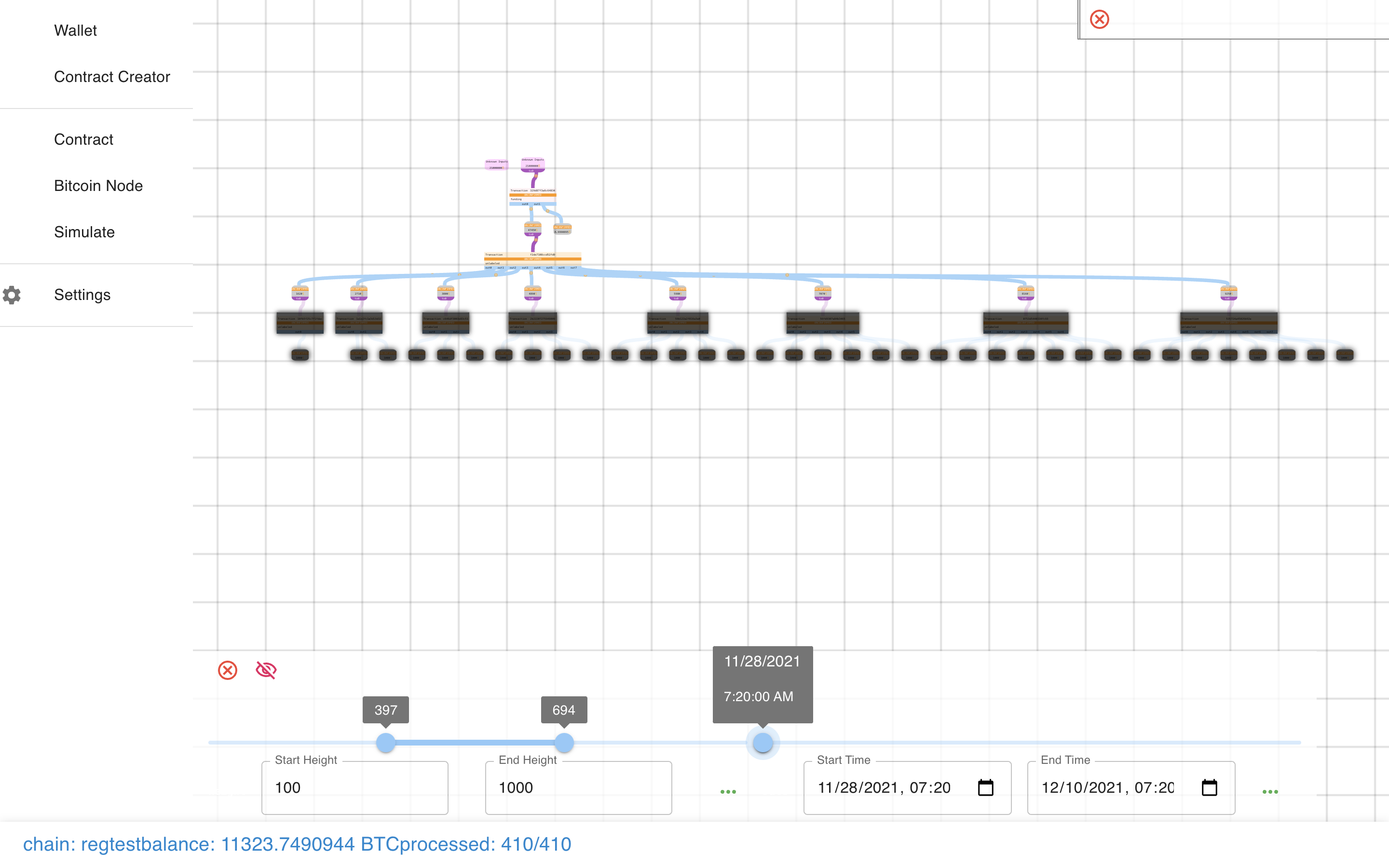

Just click repair layout.

Just click repair layout.

And the presentation resets. I’ll fix it soon, but it can be useful if there’s a

glitch to reset it.

And the presentation resets. I’ll fix it soon, but it can be useful if there’s a

glitch to reset it.

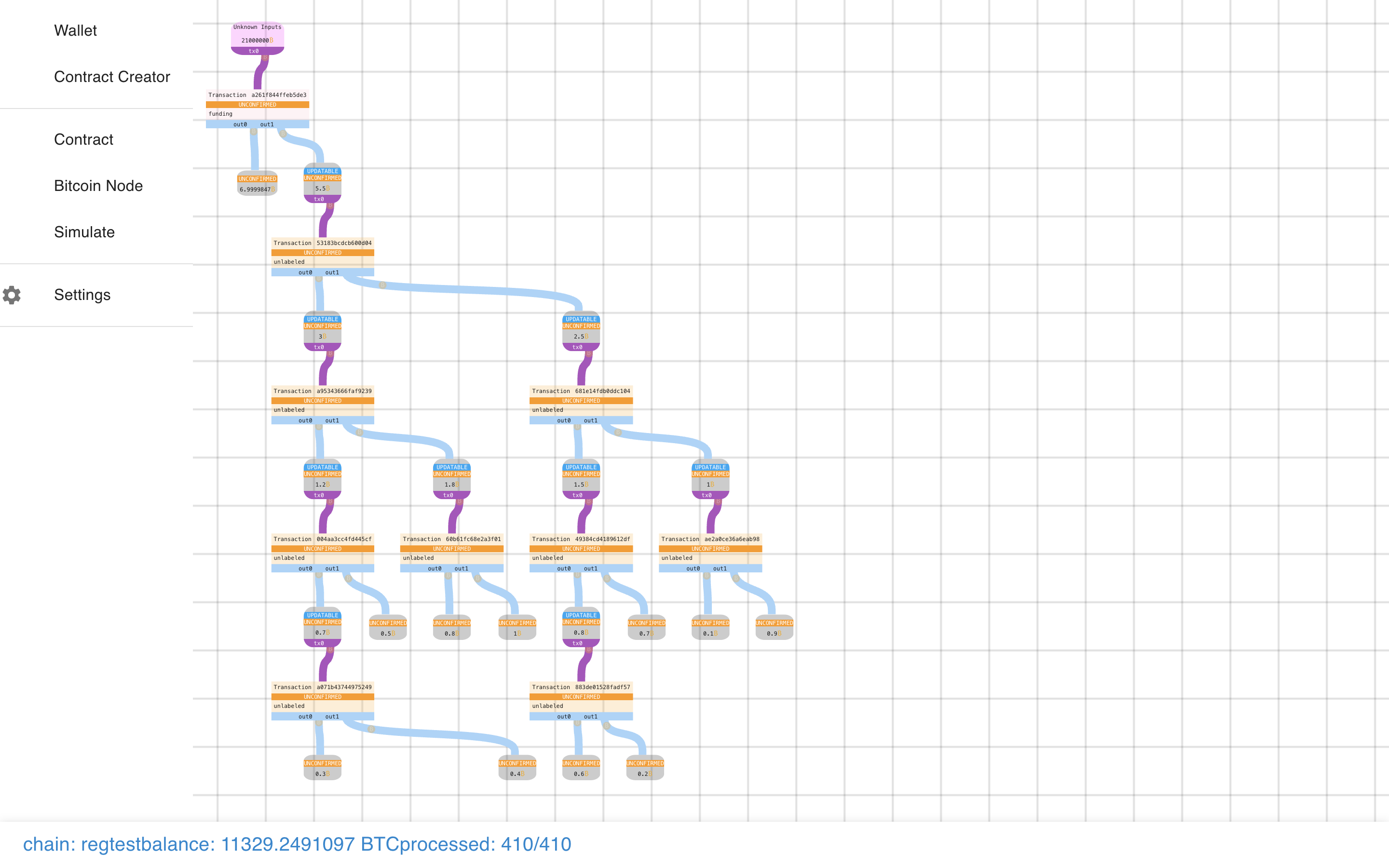

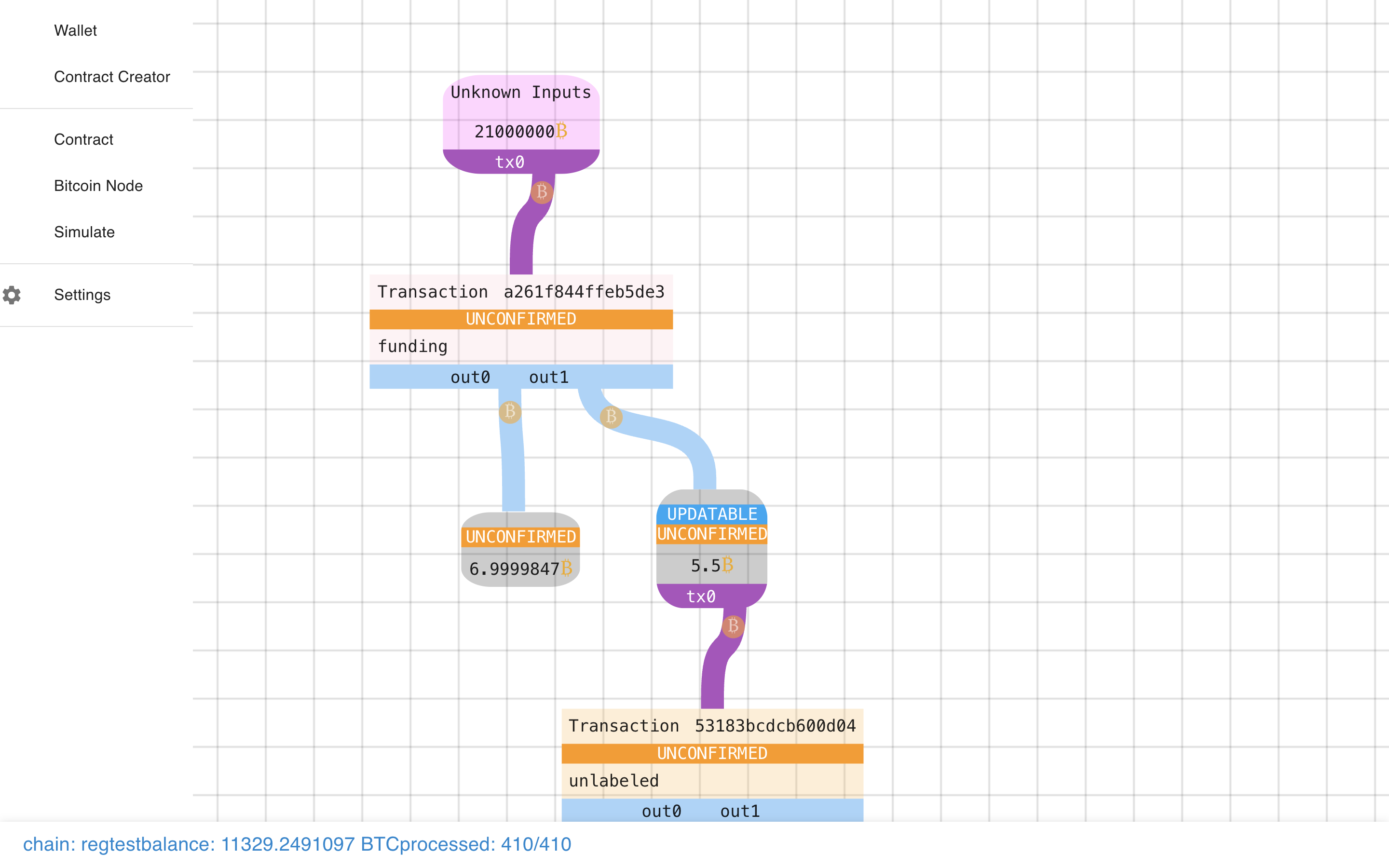

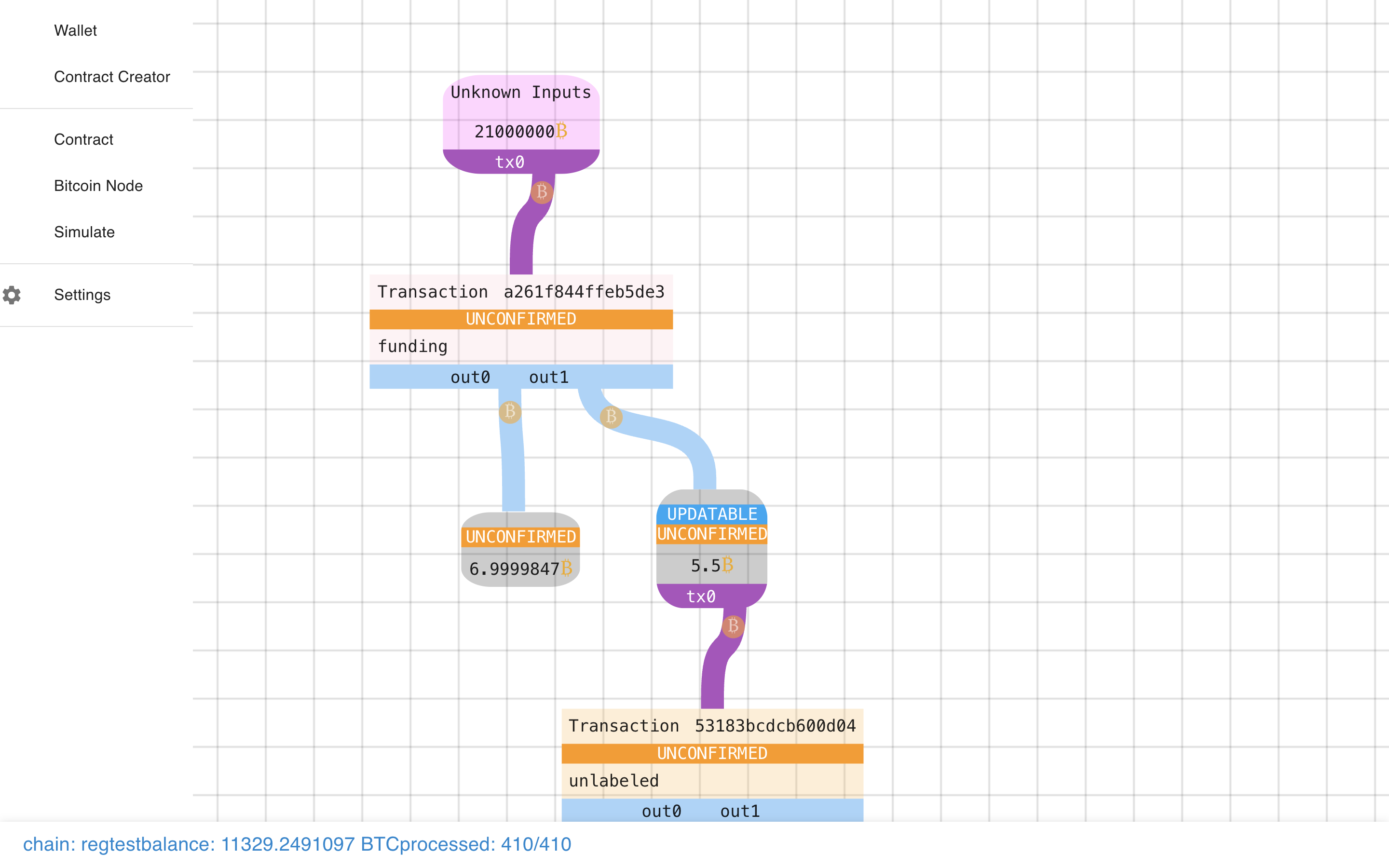

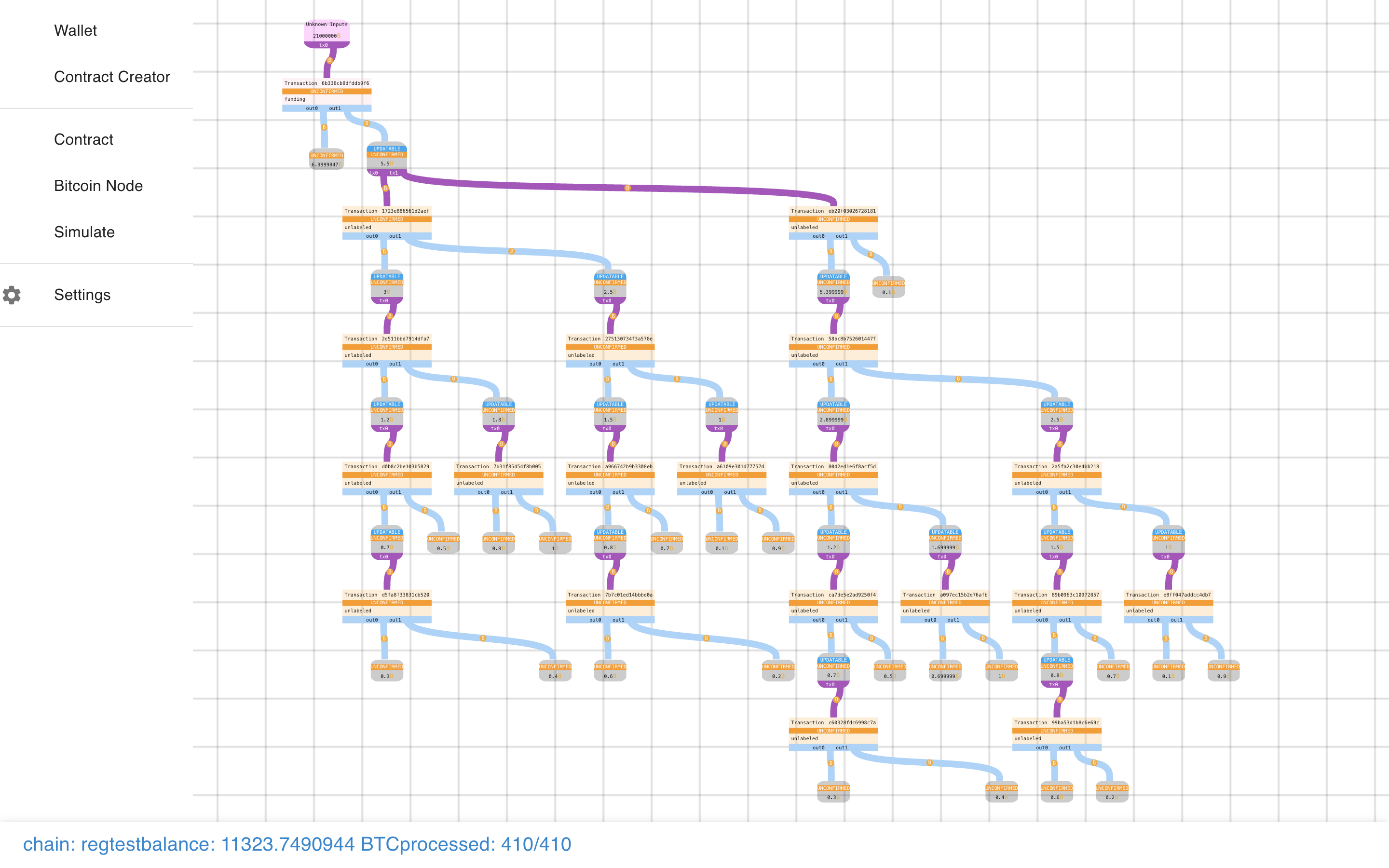

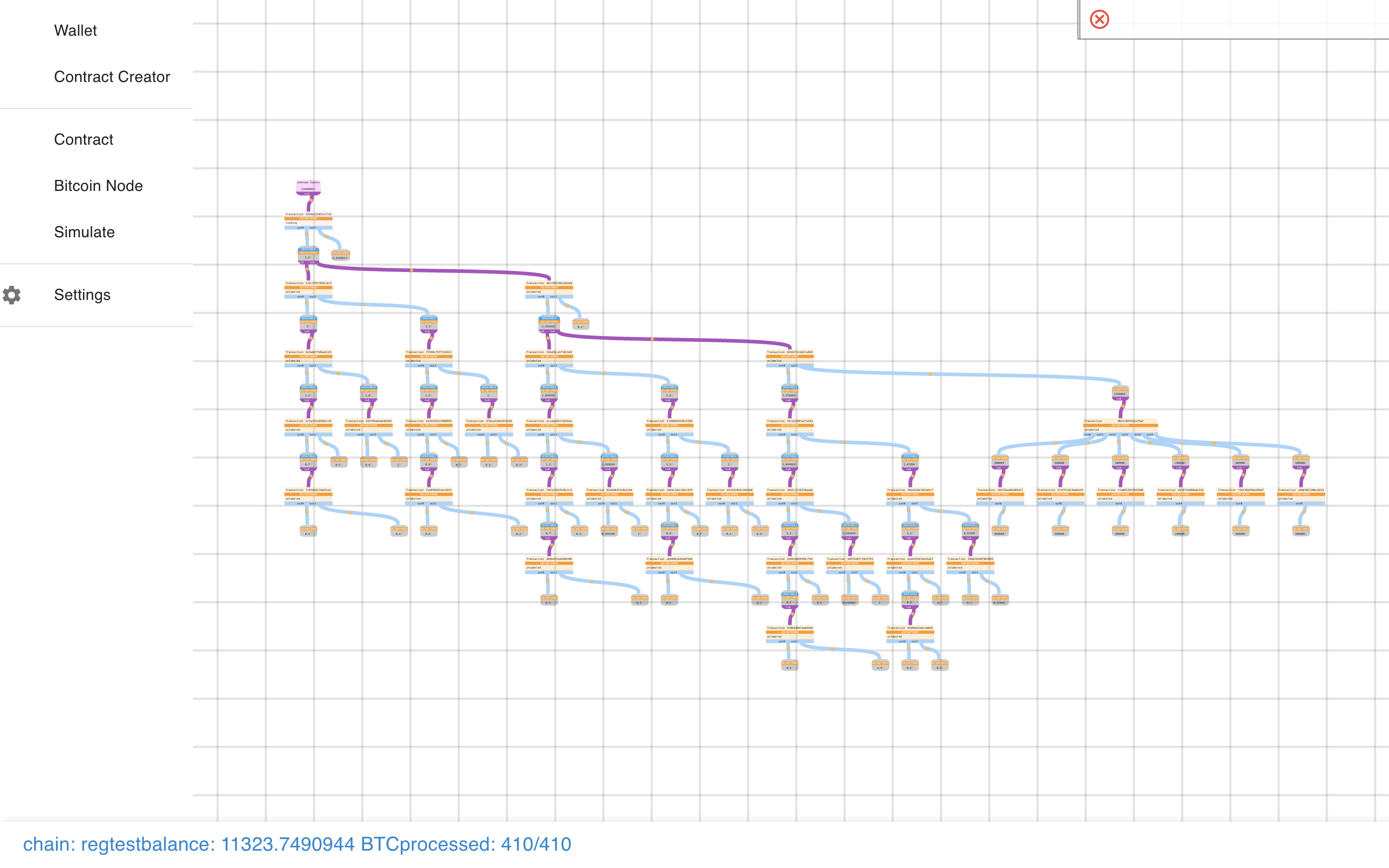

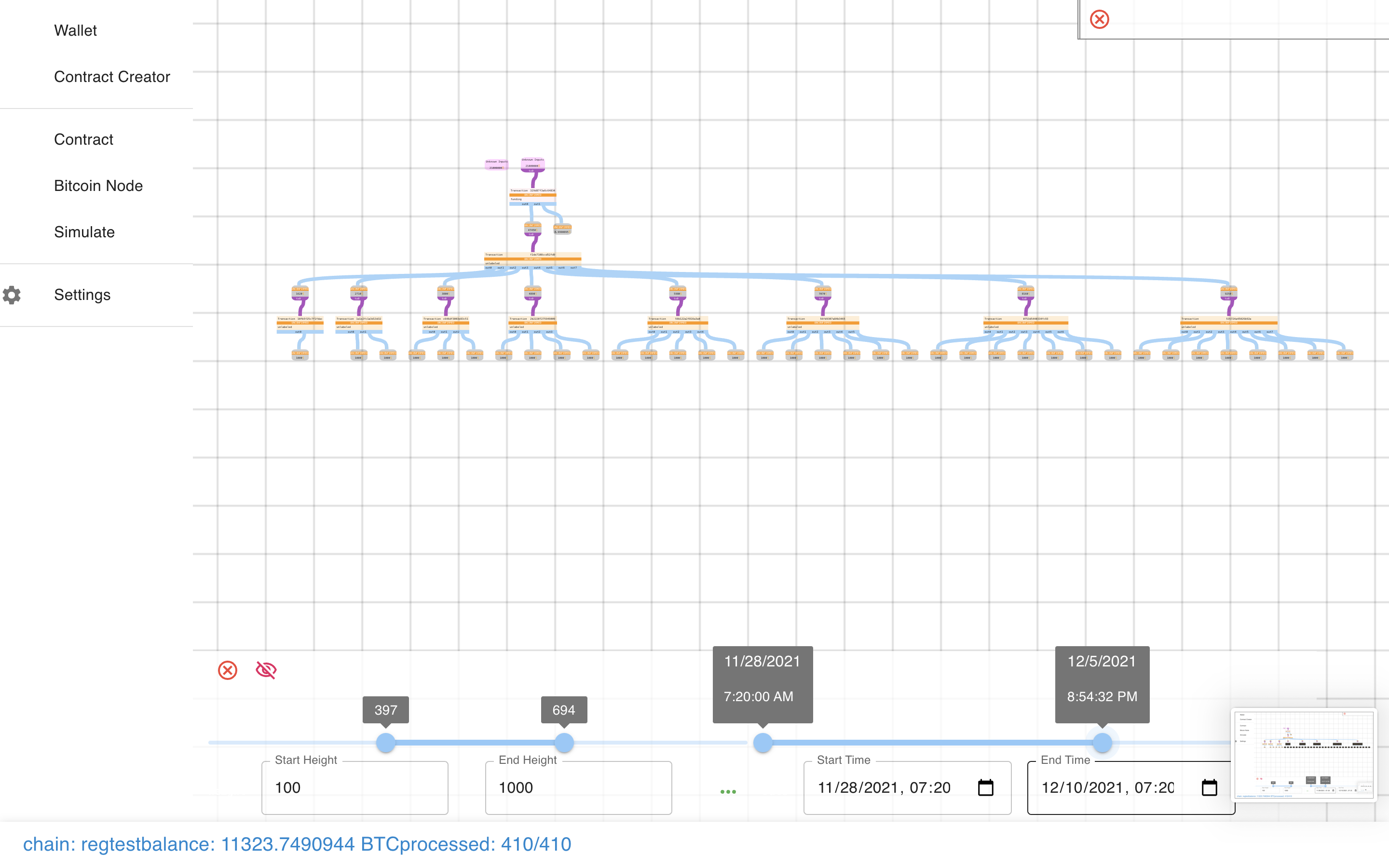

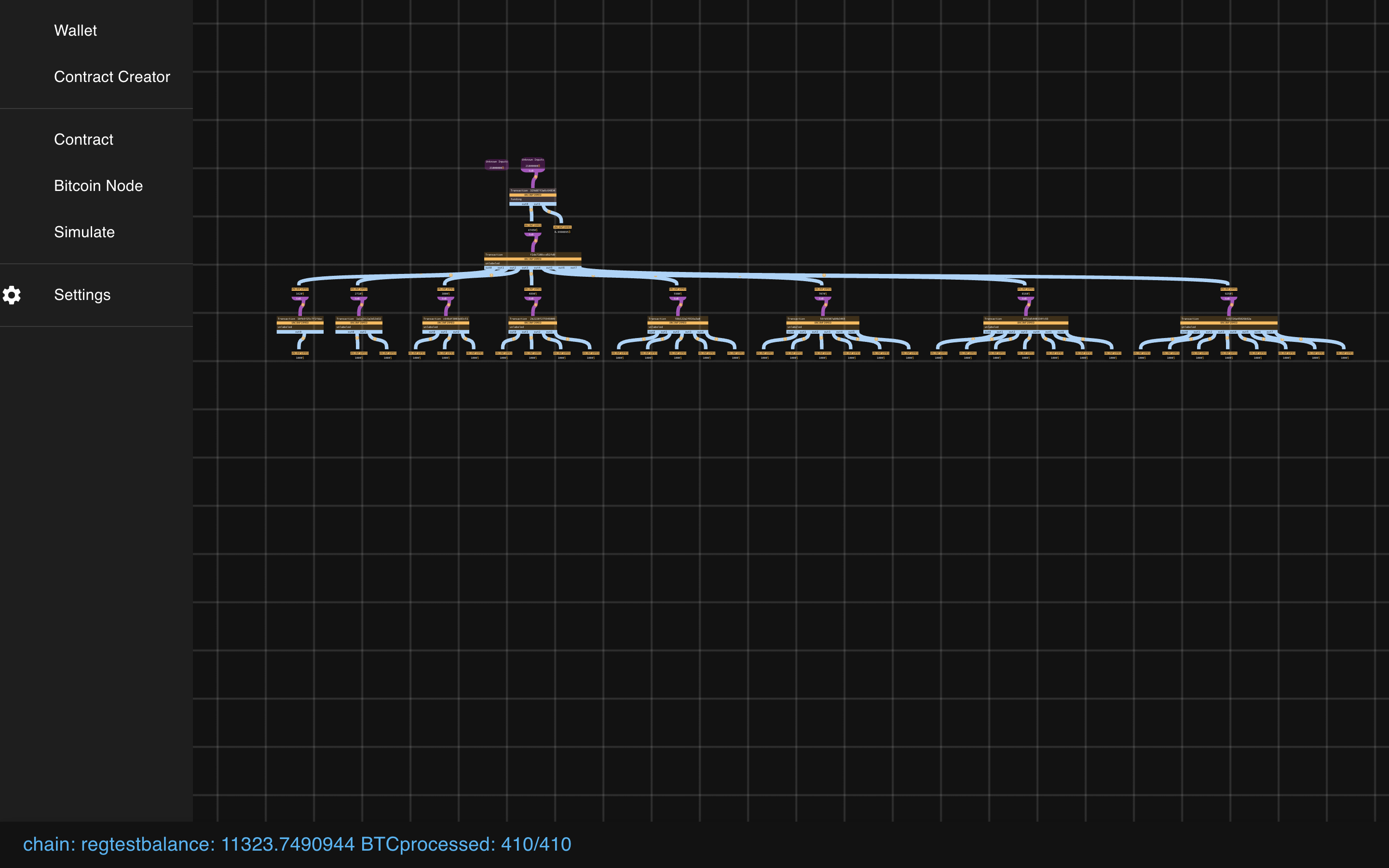

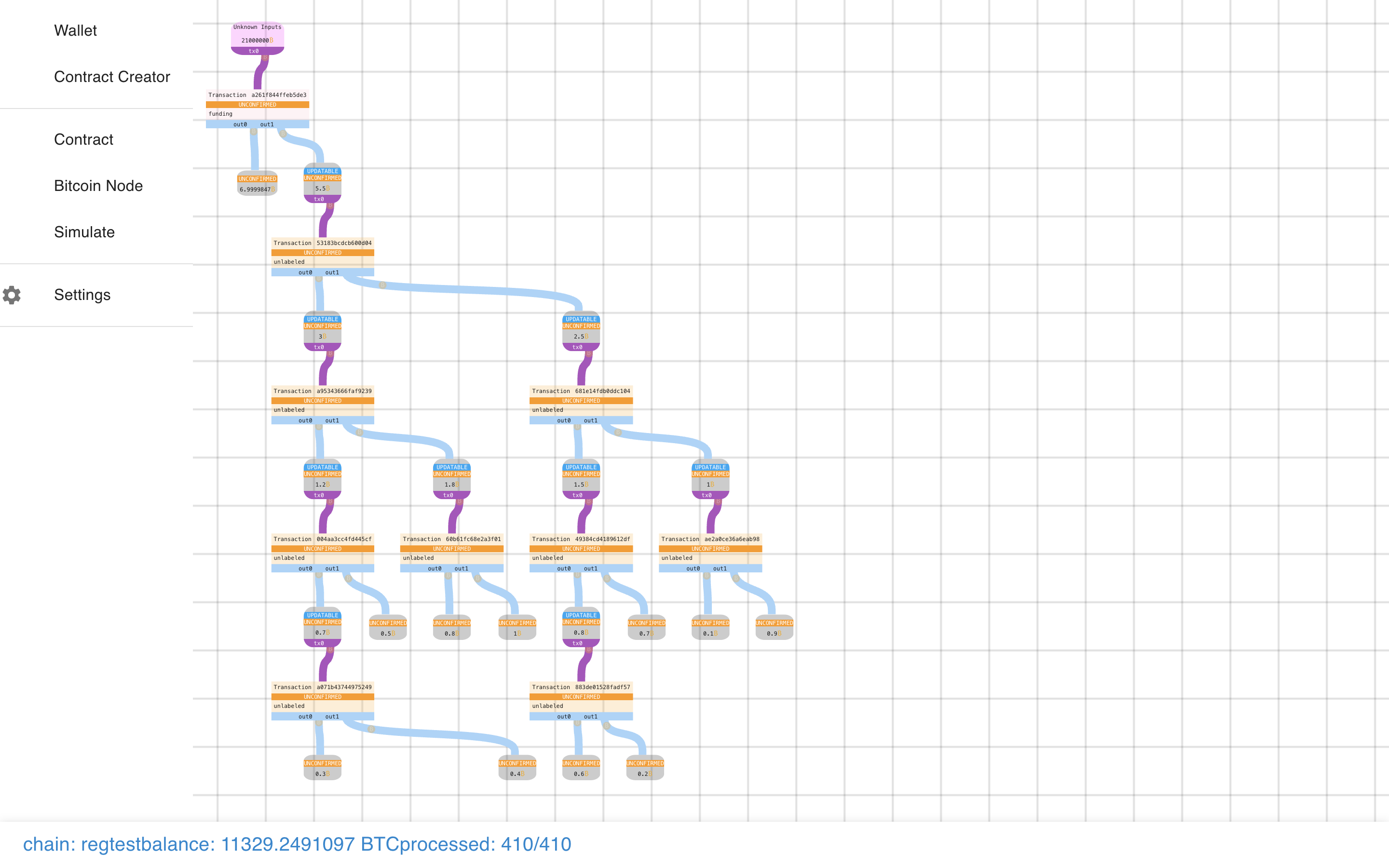

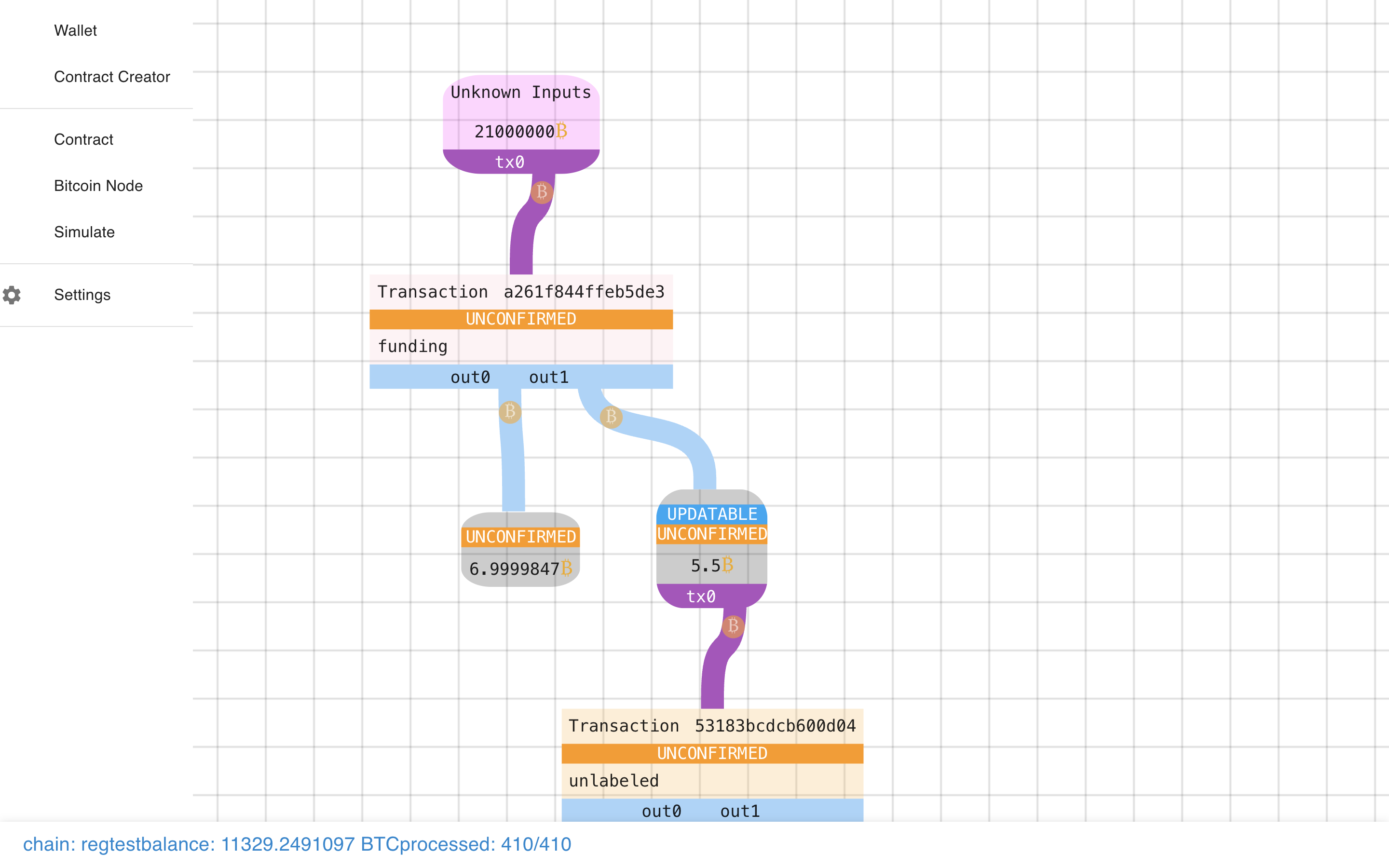

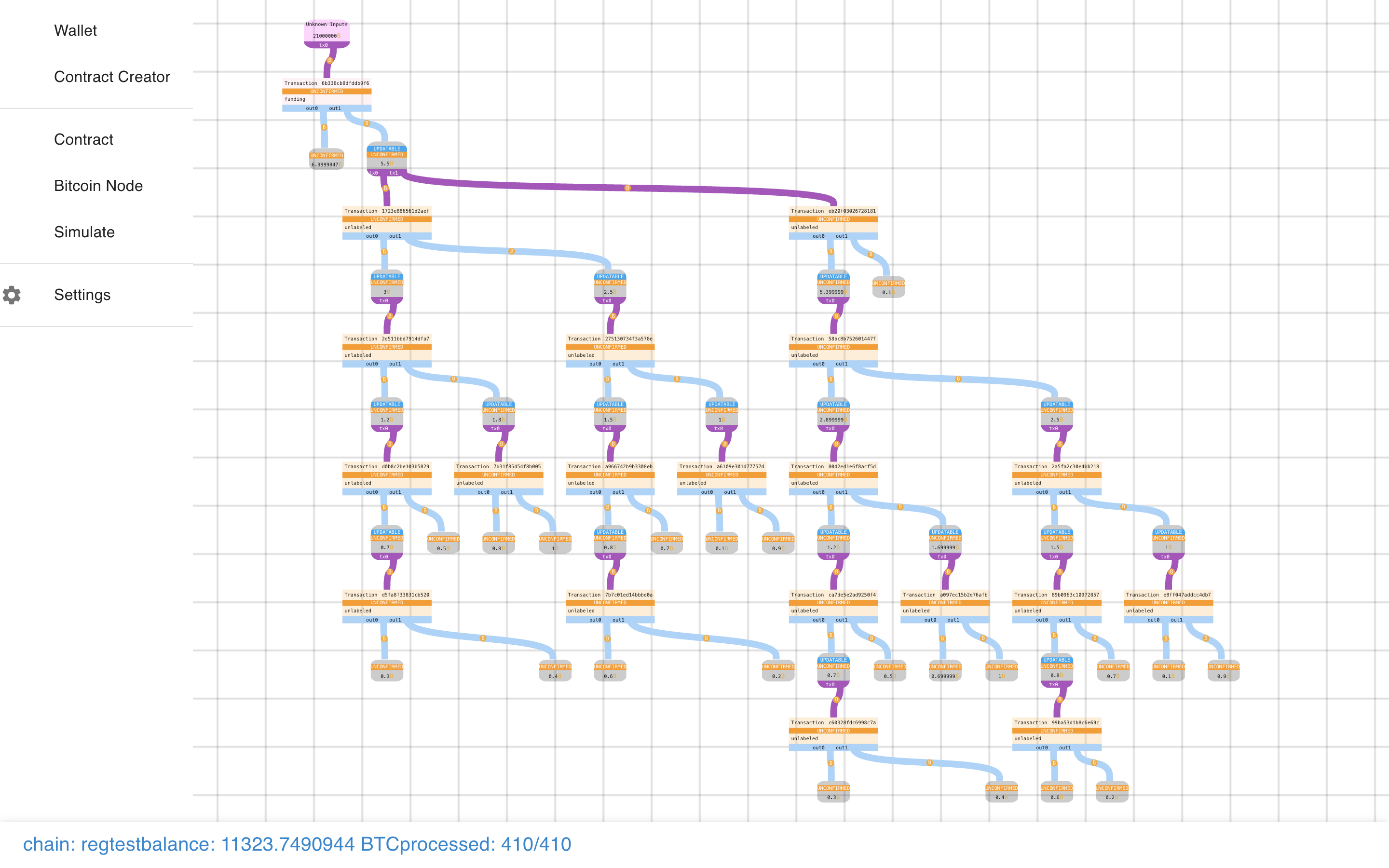

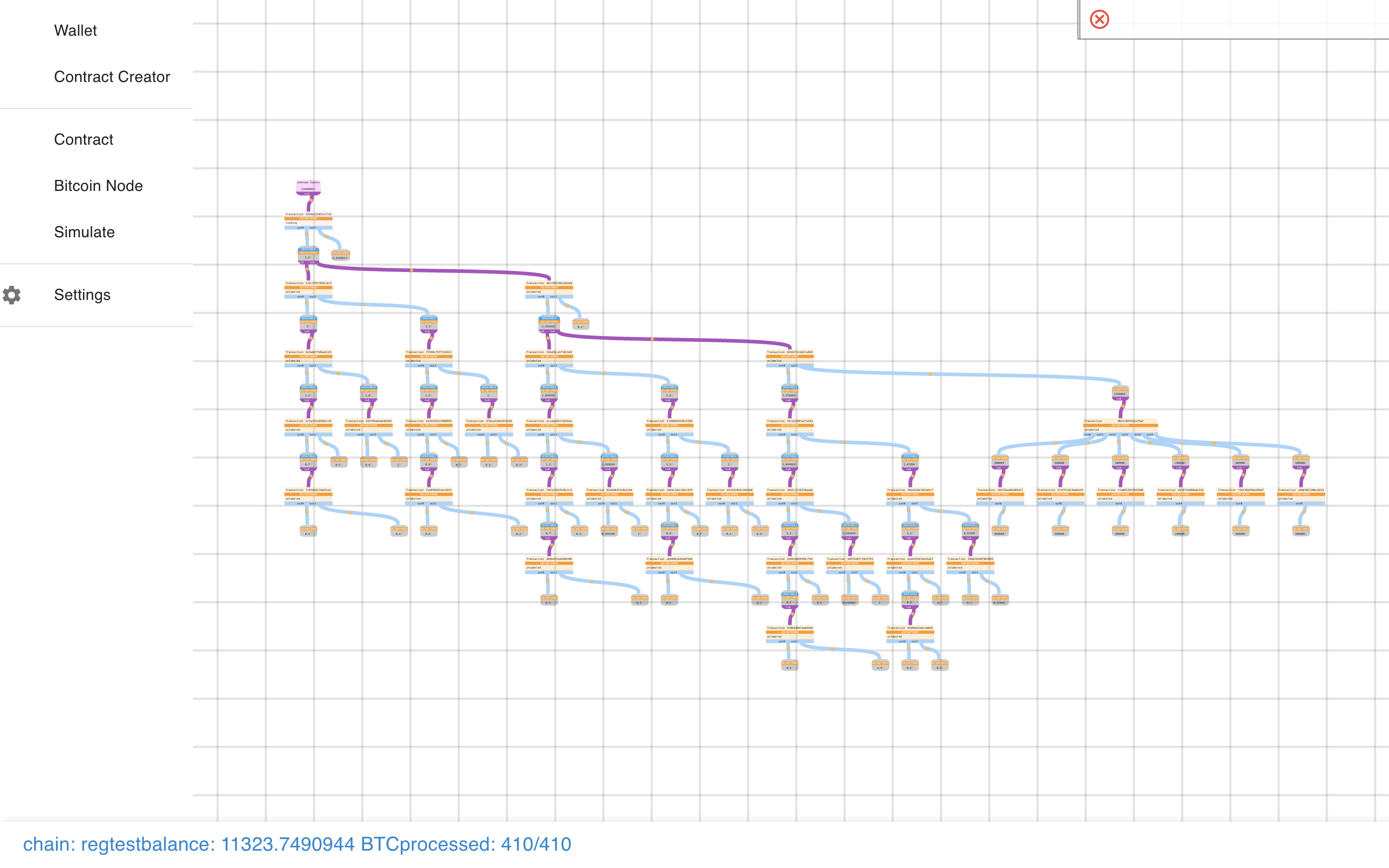

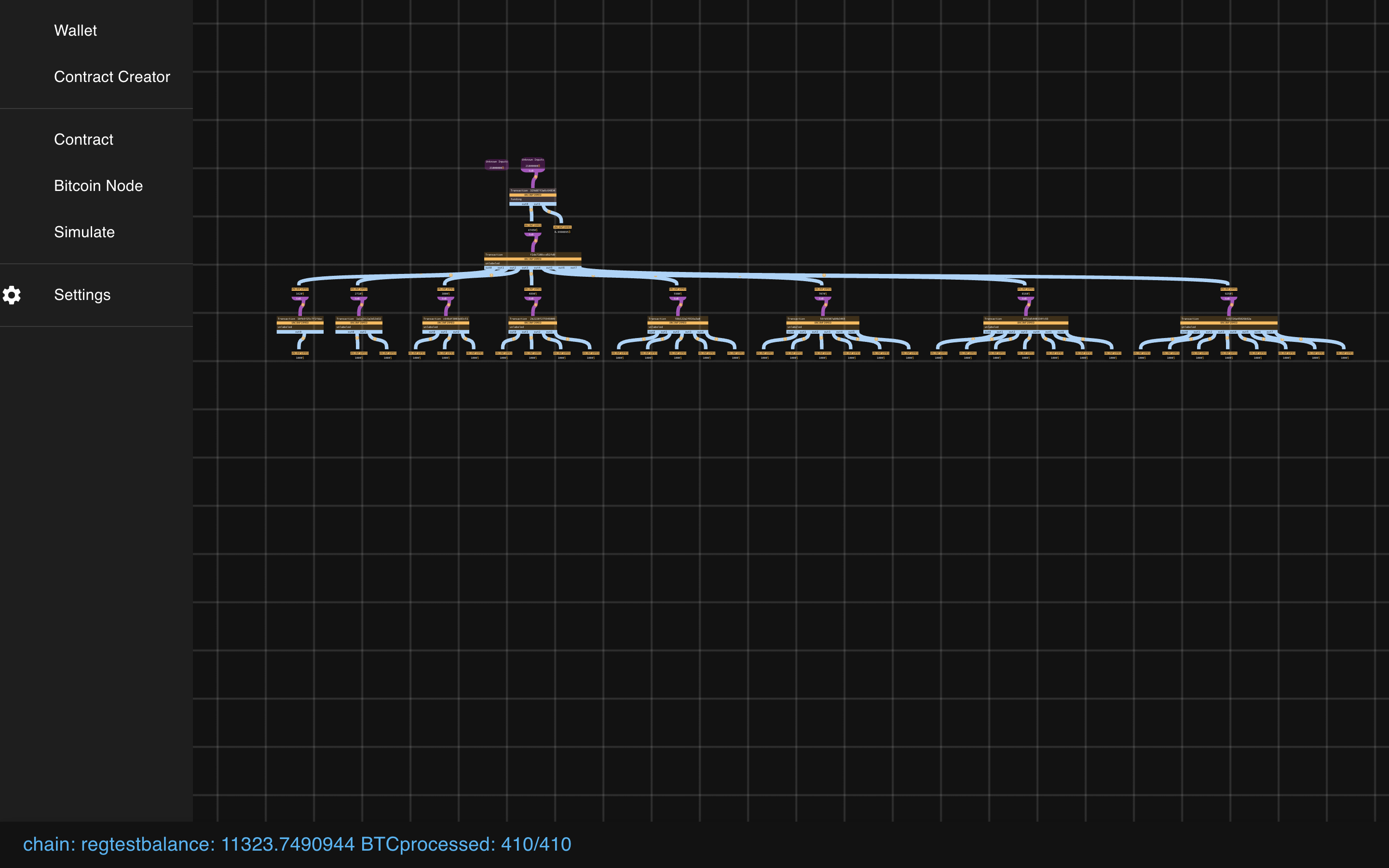

Now we can see the basic structure of the Payment Pool, and how it splits up.

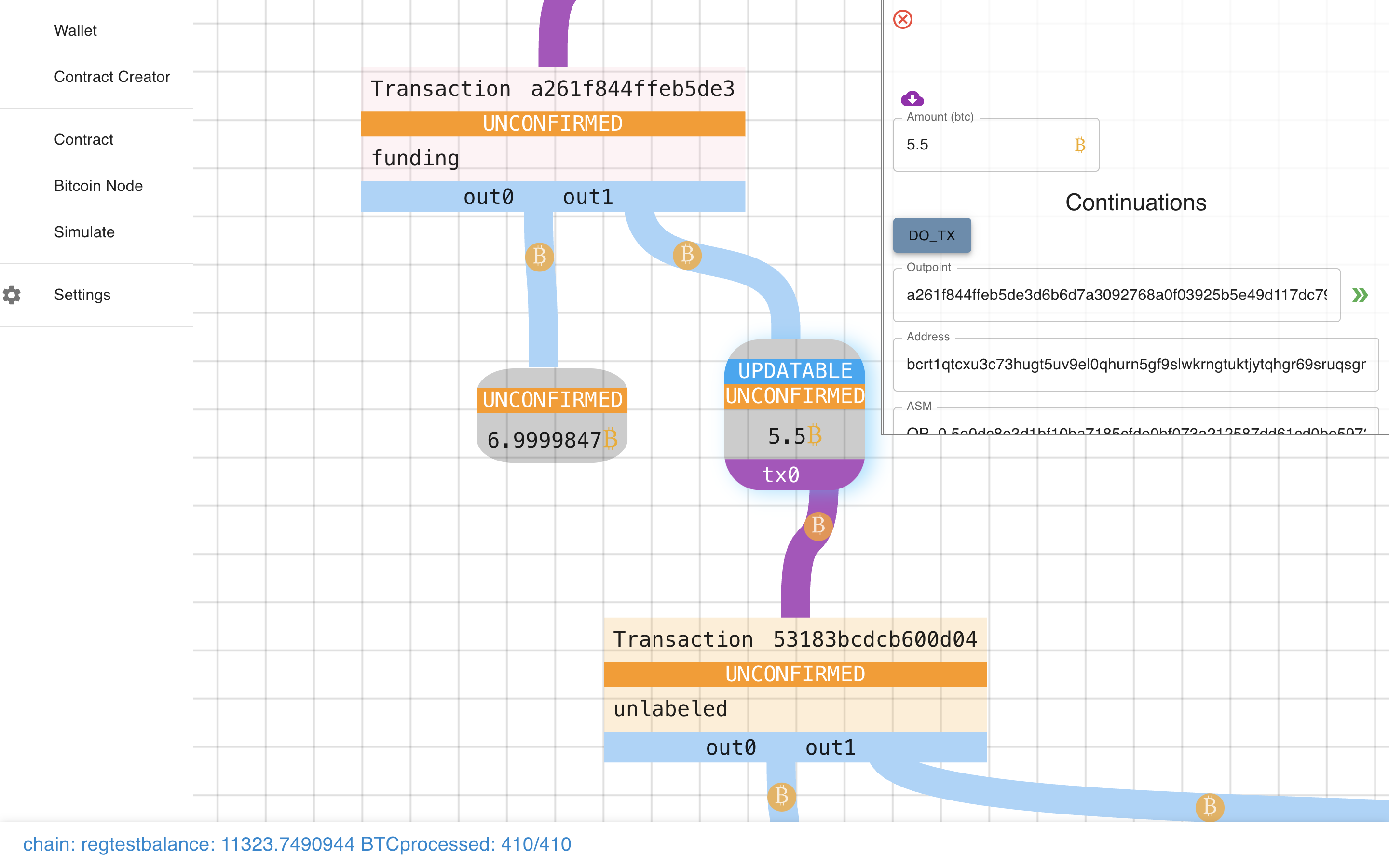

Let’s get a closer look…

Let’s get a closer look…

Let’s zoom out (not helpful!)…

Let’s zoom out (not helpful!)…

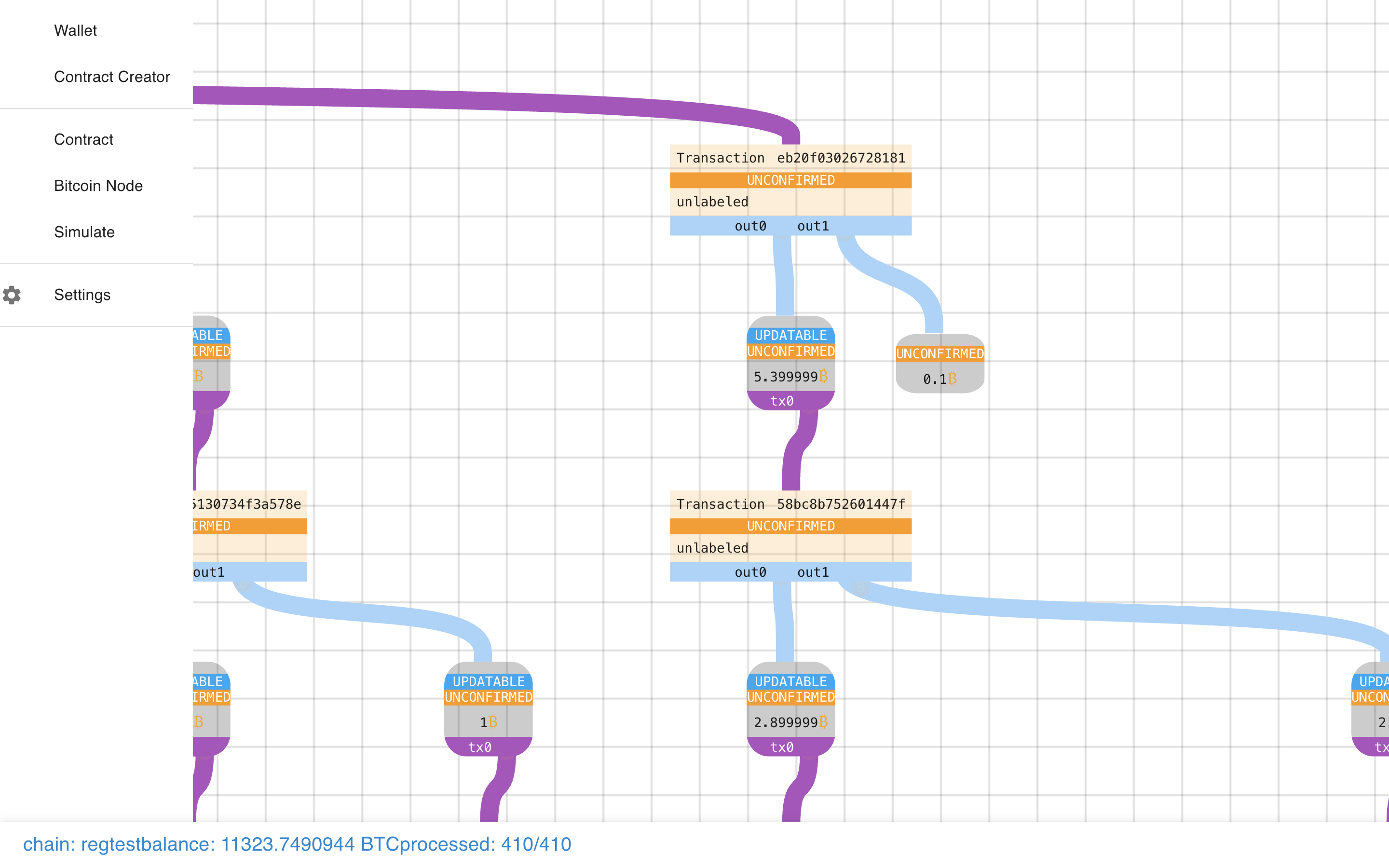

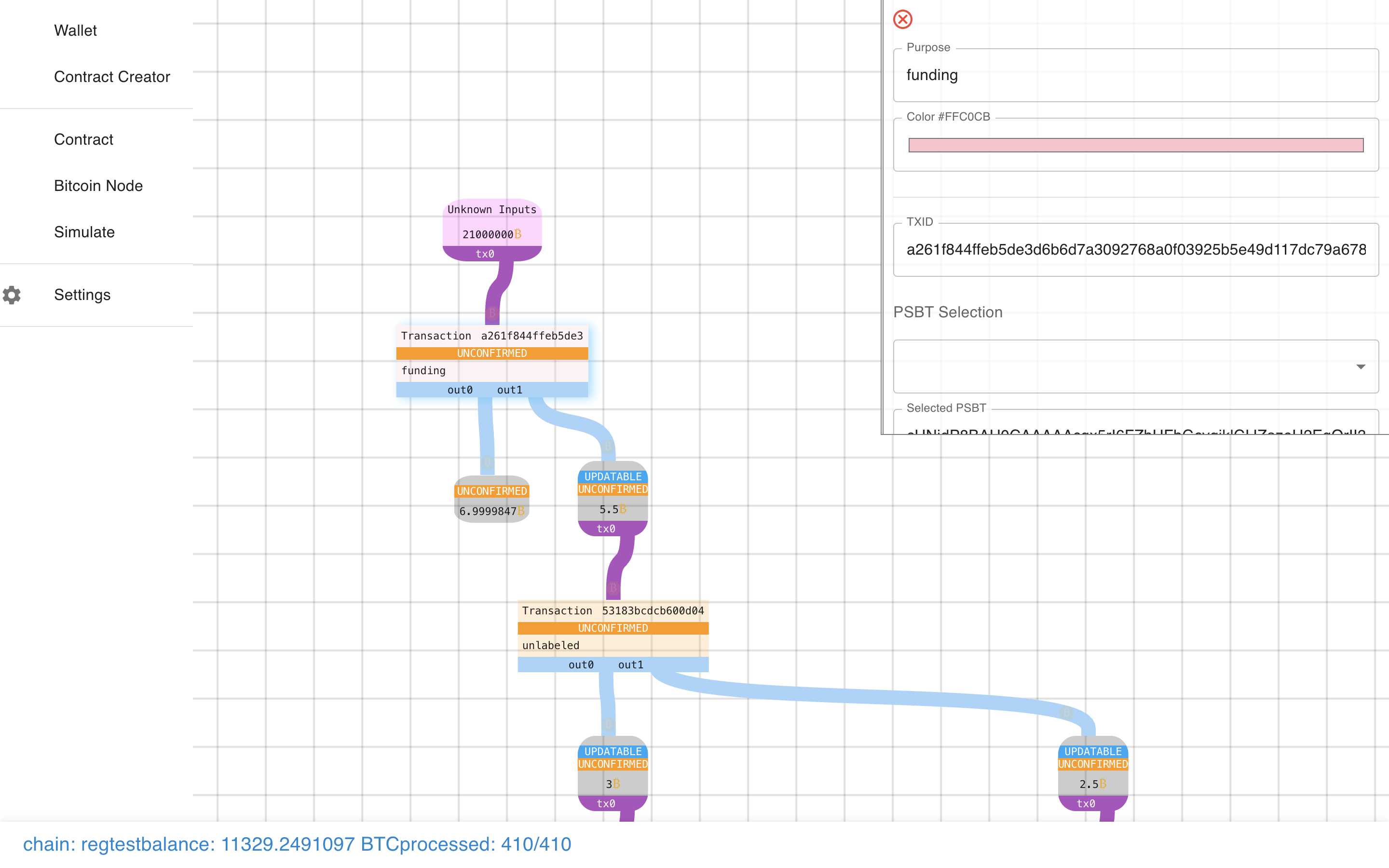

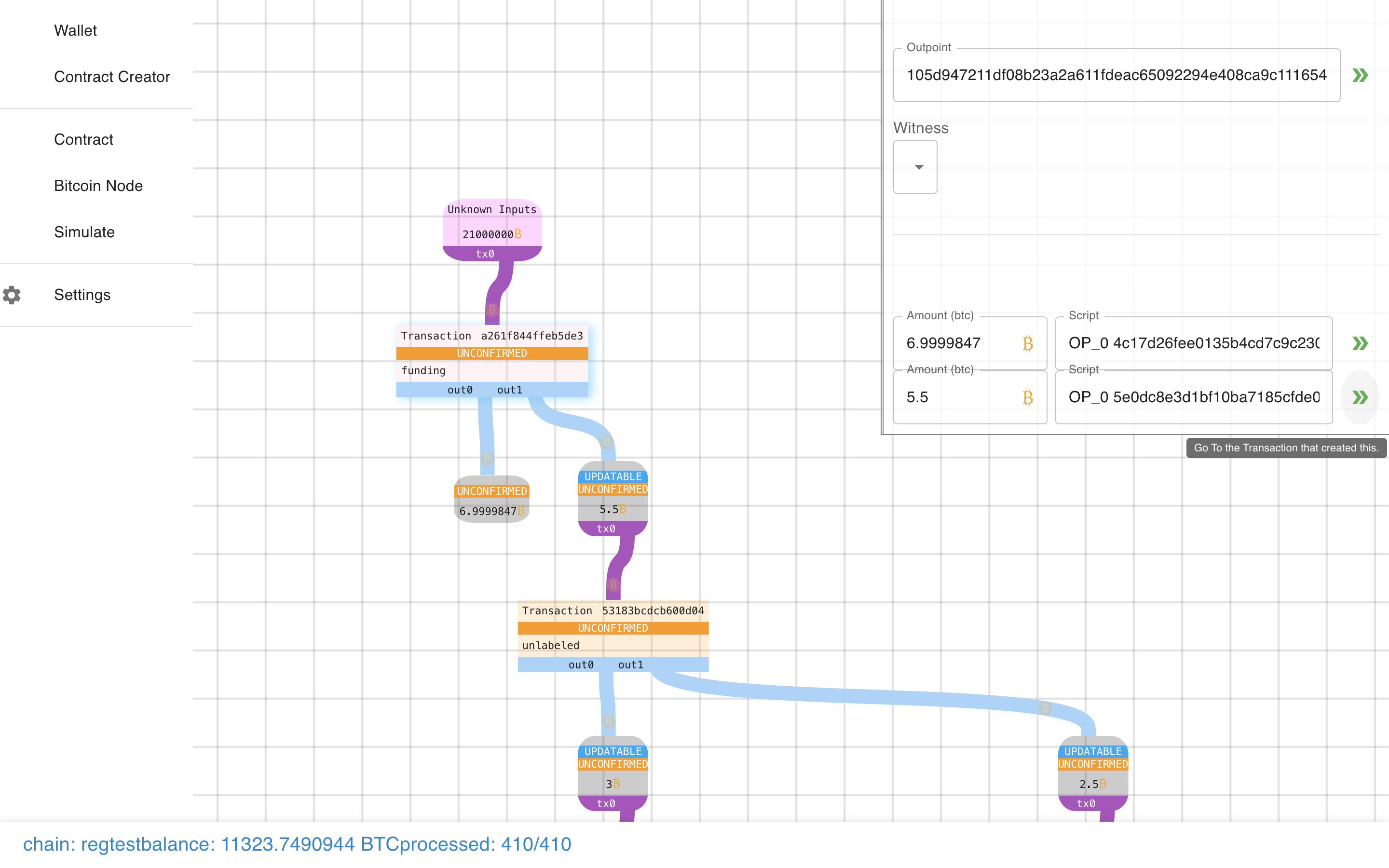

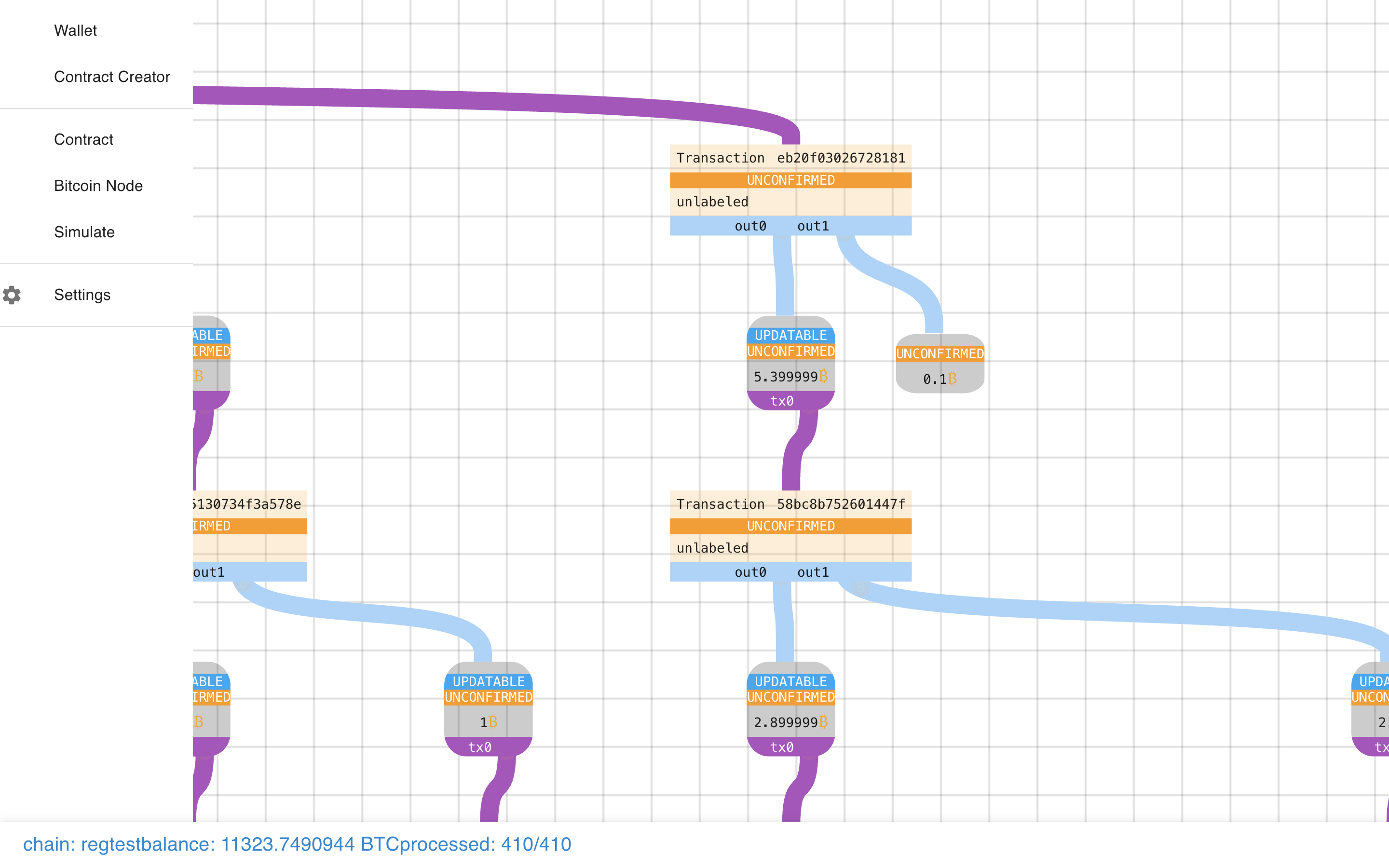

Let’s zoom back in. Note how the transactions are square boxes and the outputs

are rounded rectangles. Blue lines connect transactions to their outputs. Purple lines

connect outputs to their (potential) spends.

Let’s zoom back in. Note how the transactions are square boxes and the outputs

are rounded rectangles. Blue lines connect transactions to their outputs. Purple lines

connect outputs to their (potential) spends.

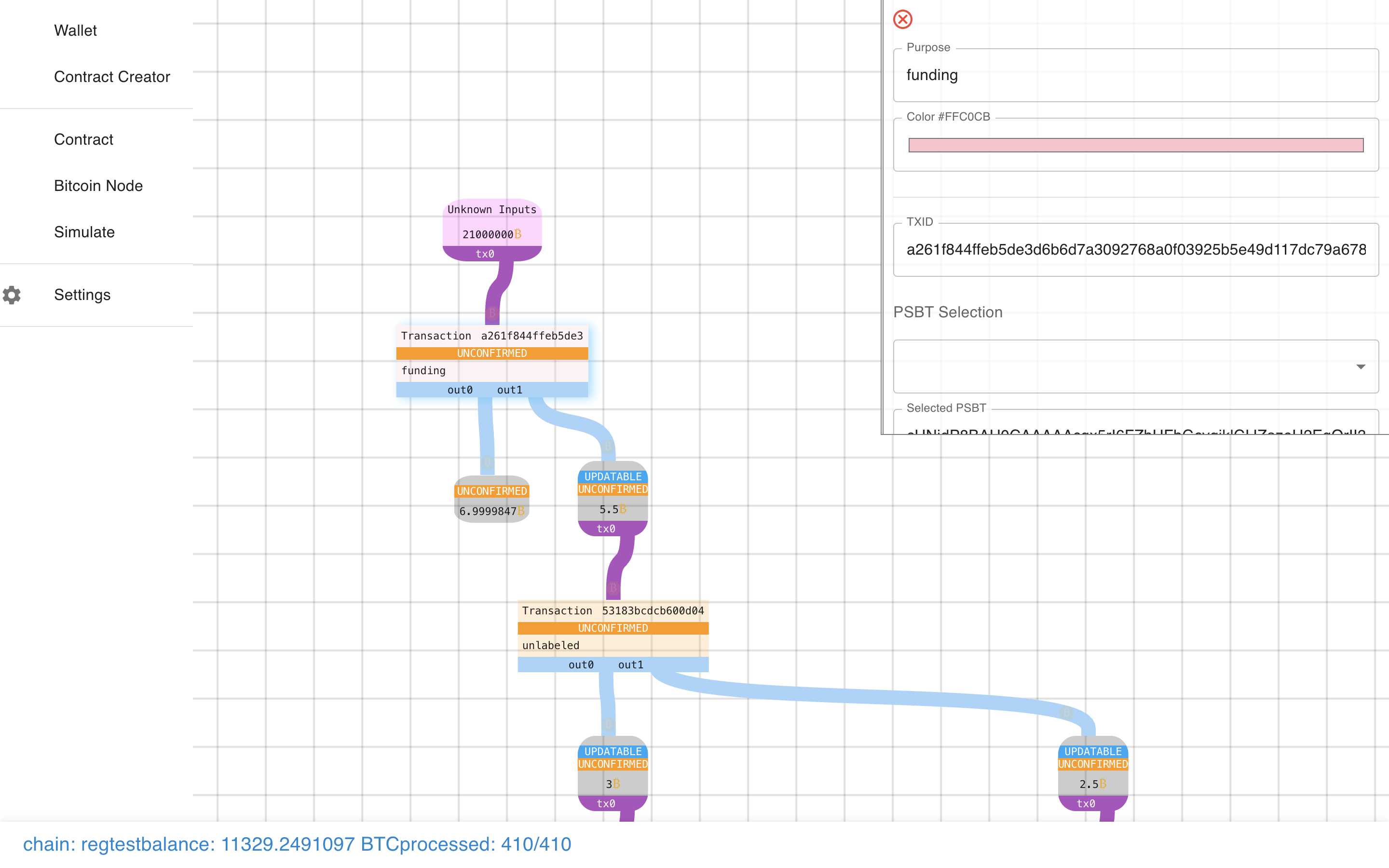

If we click on a transaction we can learn more about it.

If we click on a transaction we can learn more about it.

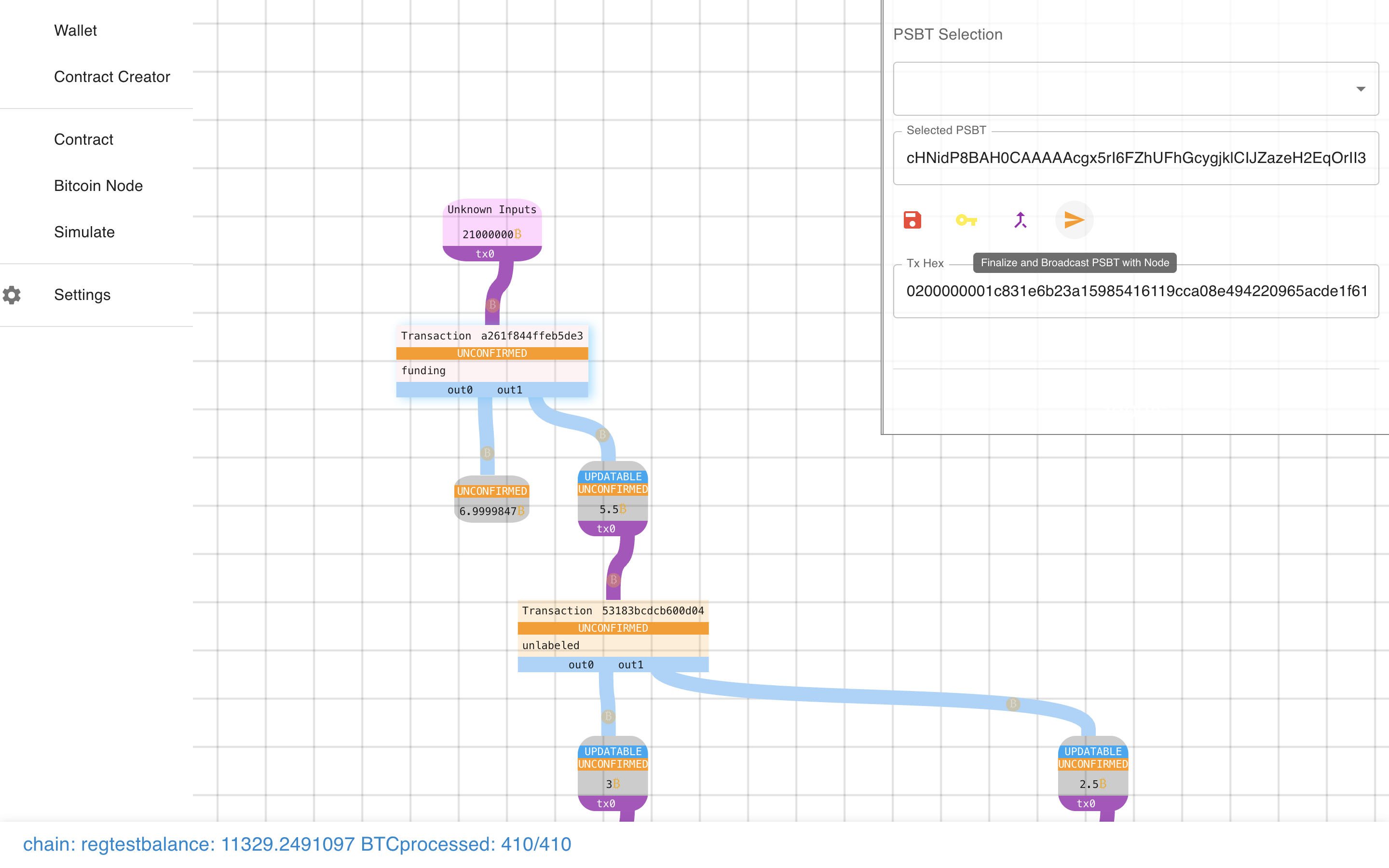

We even have some actions that we can take, like sending it to the network.

We even have some actions that we can take, like sending it to the network.

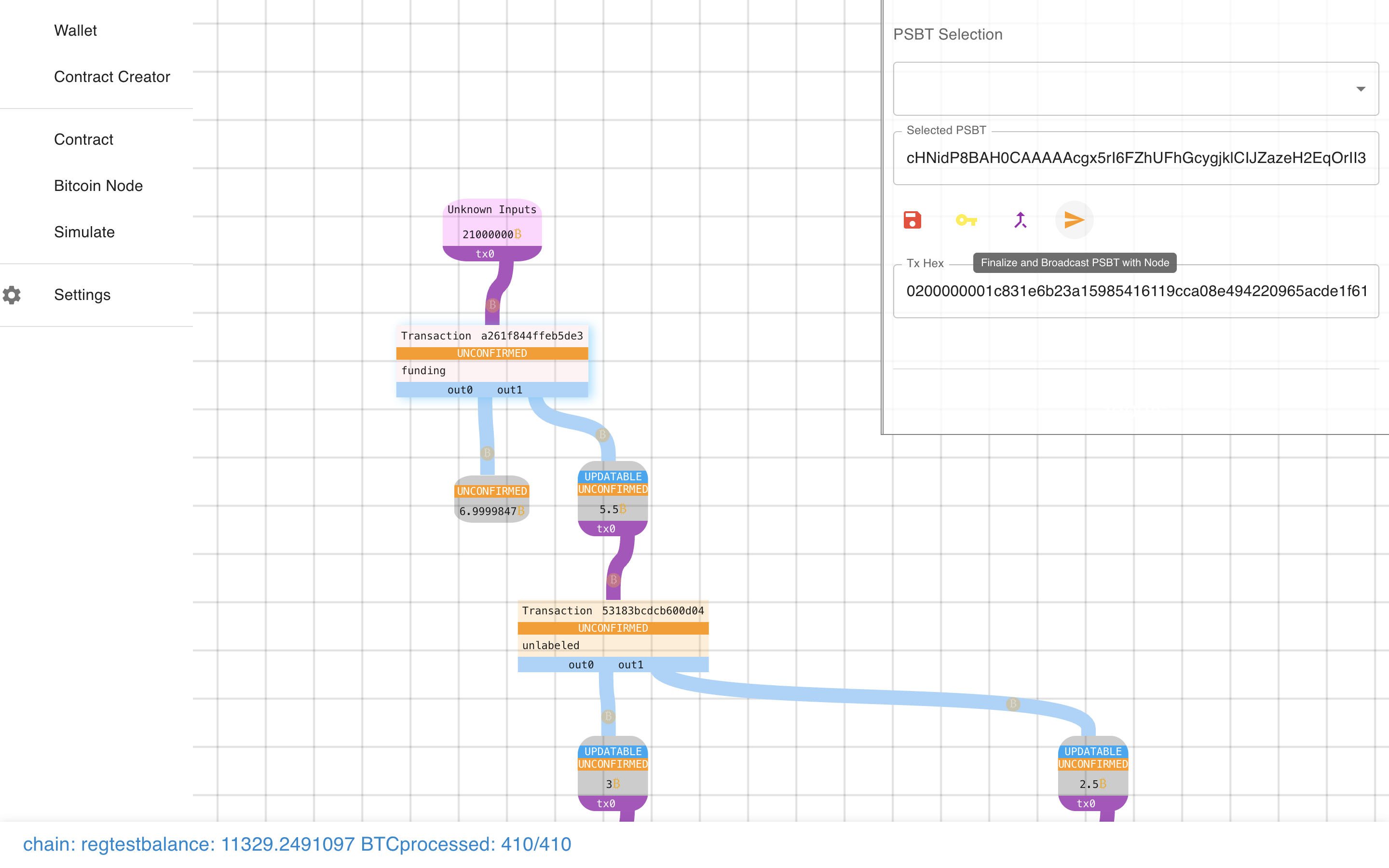

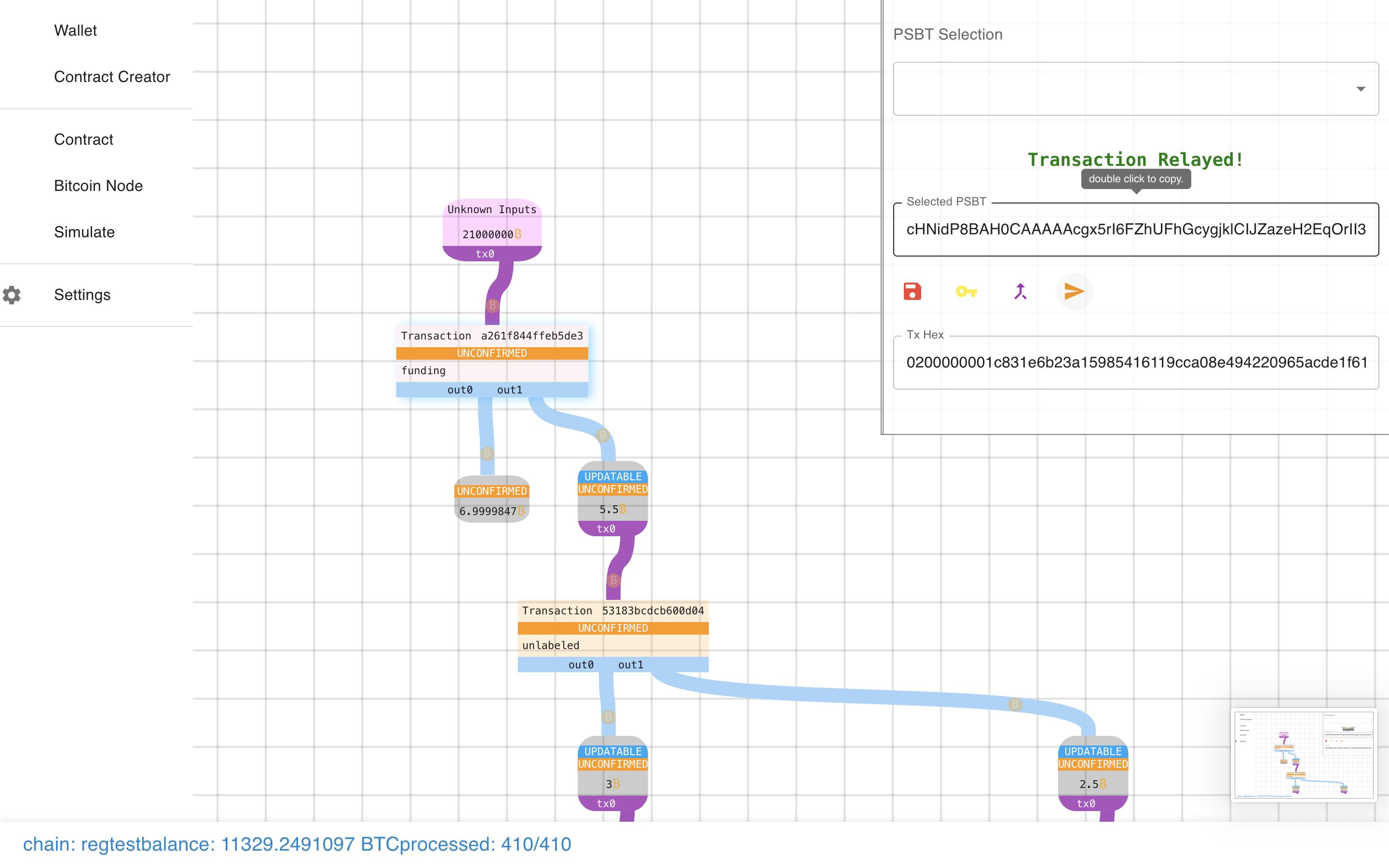

Let’s try it….

Let’s try it….

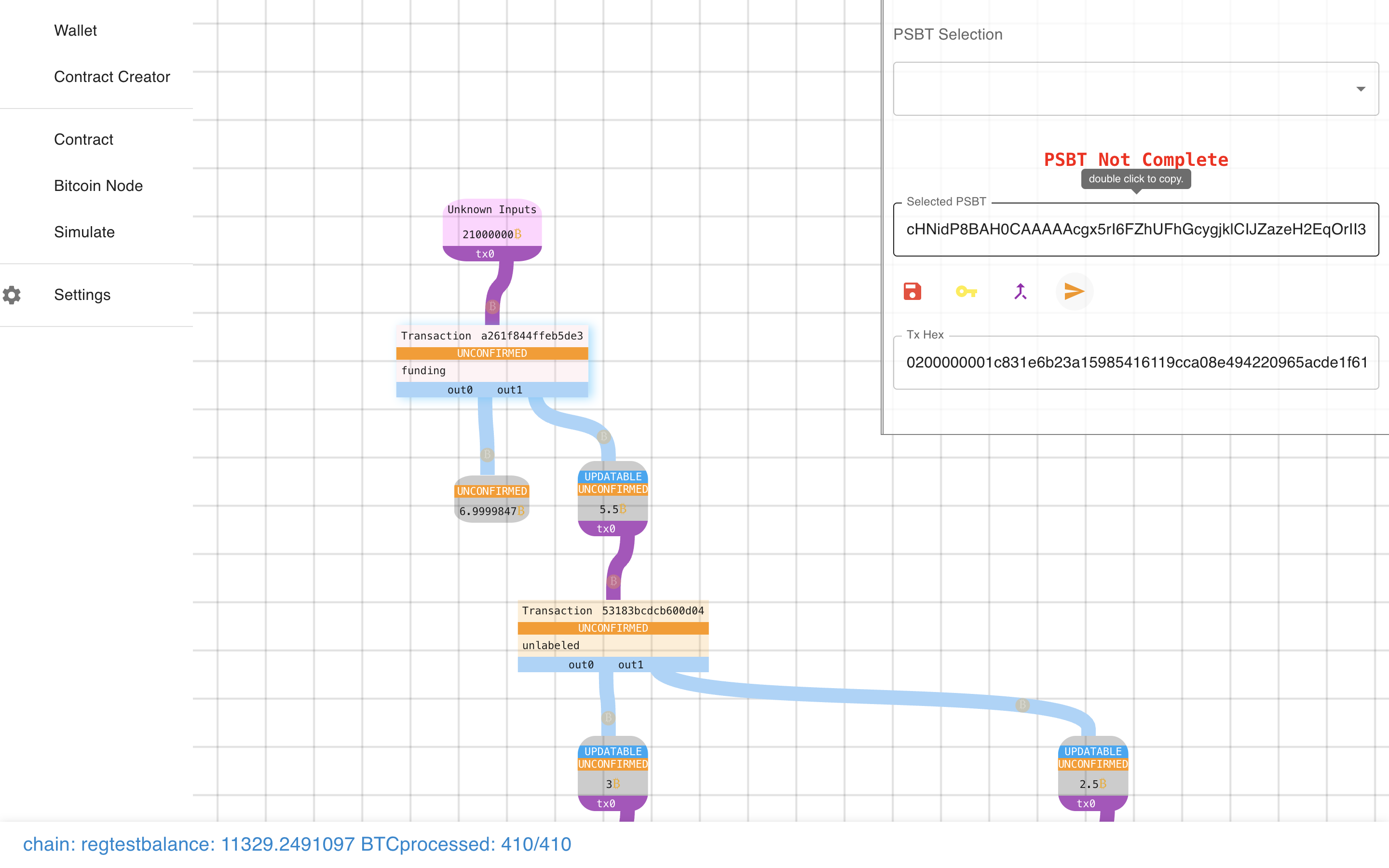

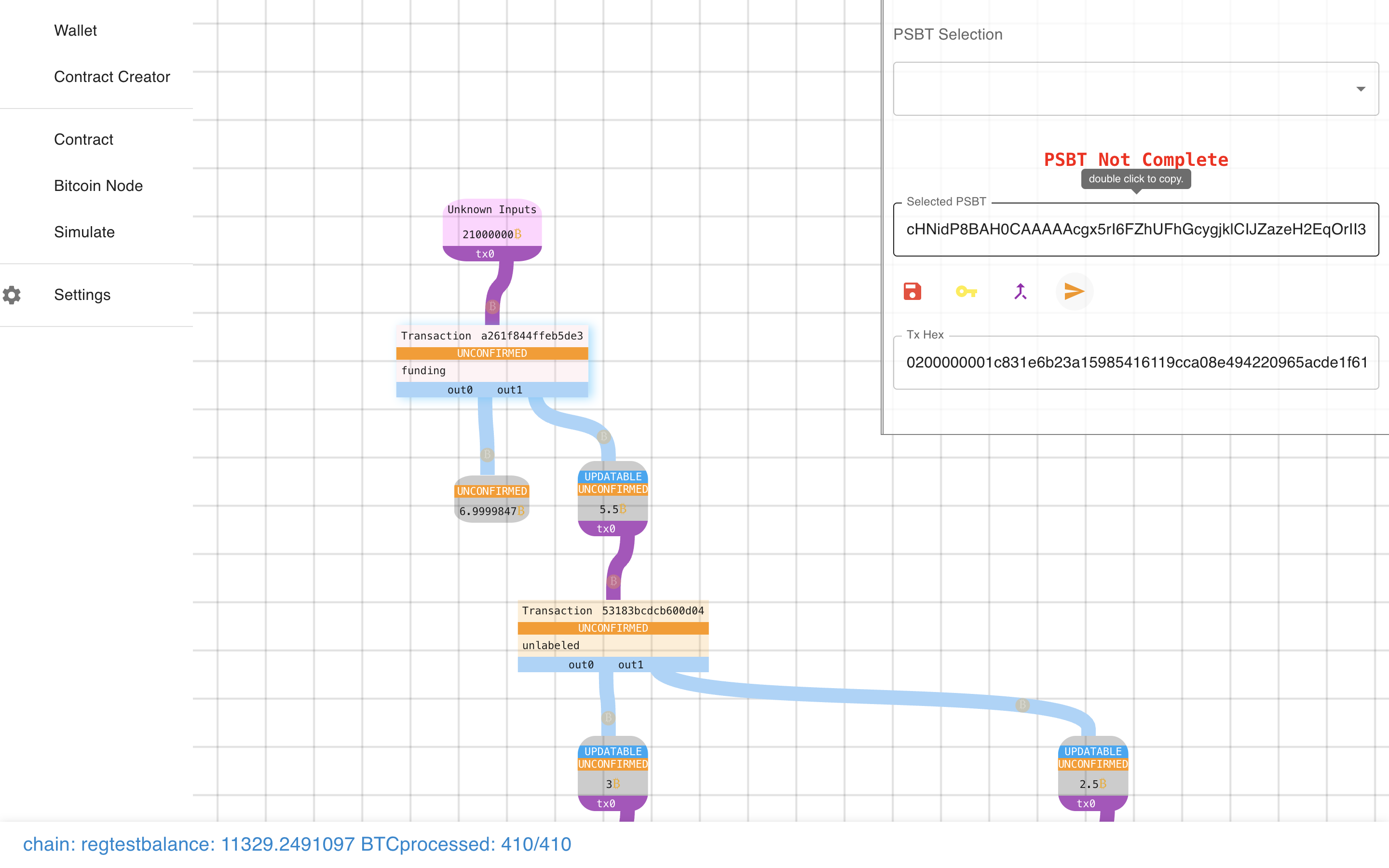

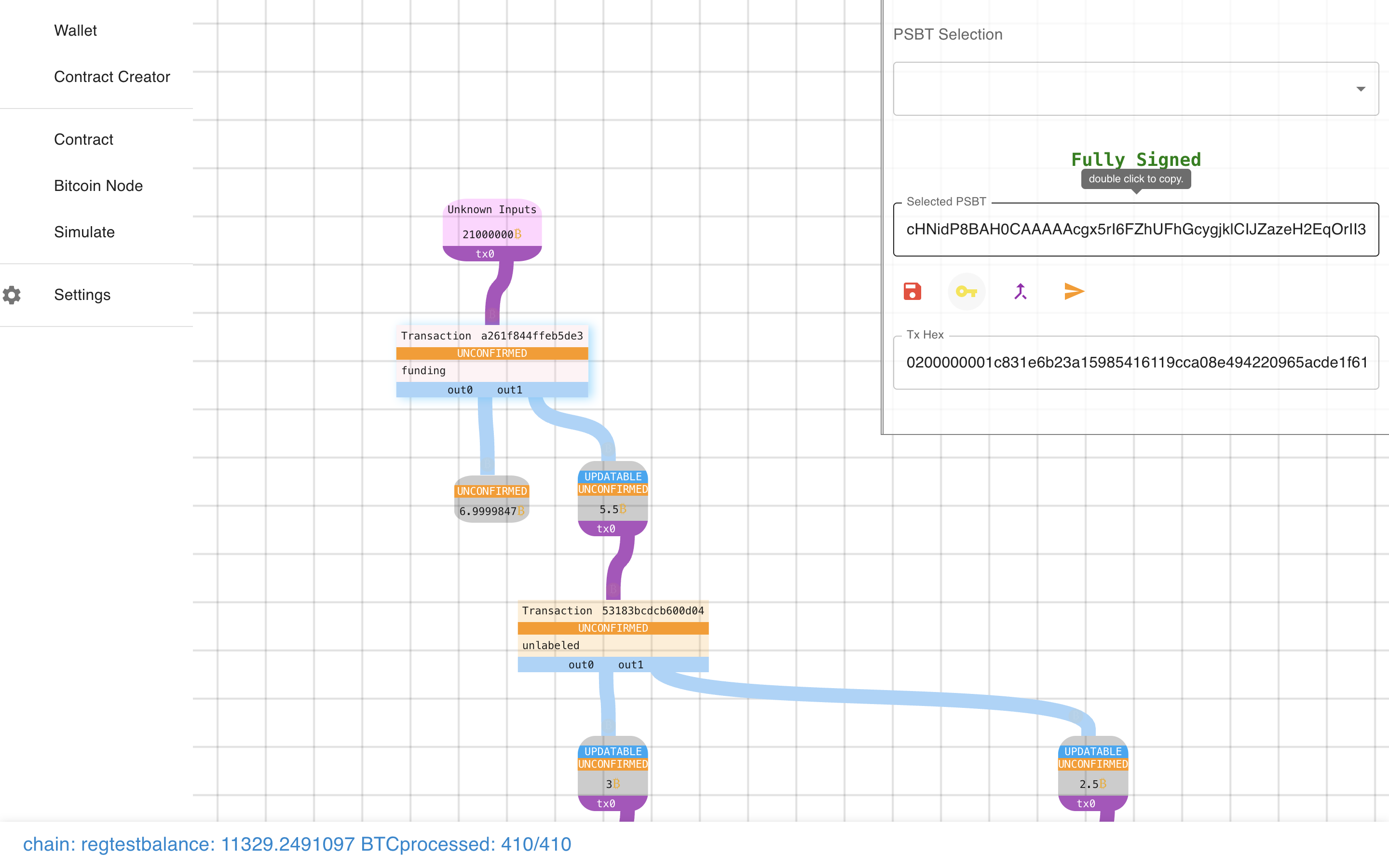

Oops! We need to sign it first…

Oops! We need to sign it first…

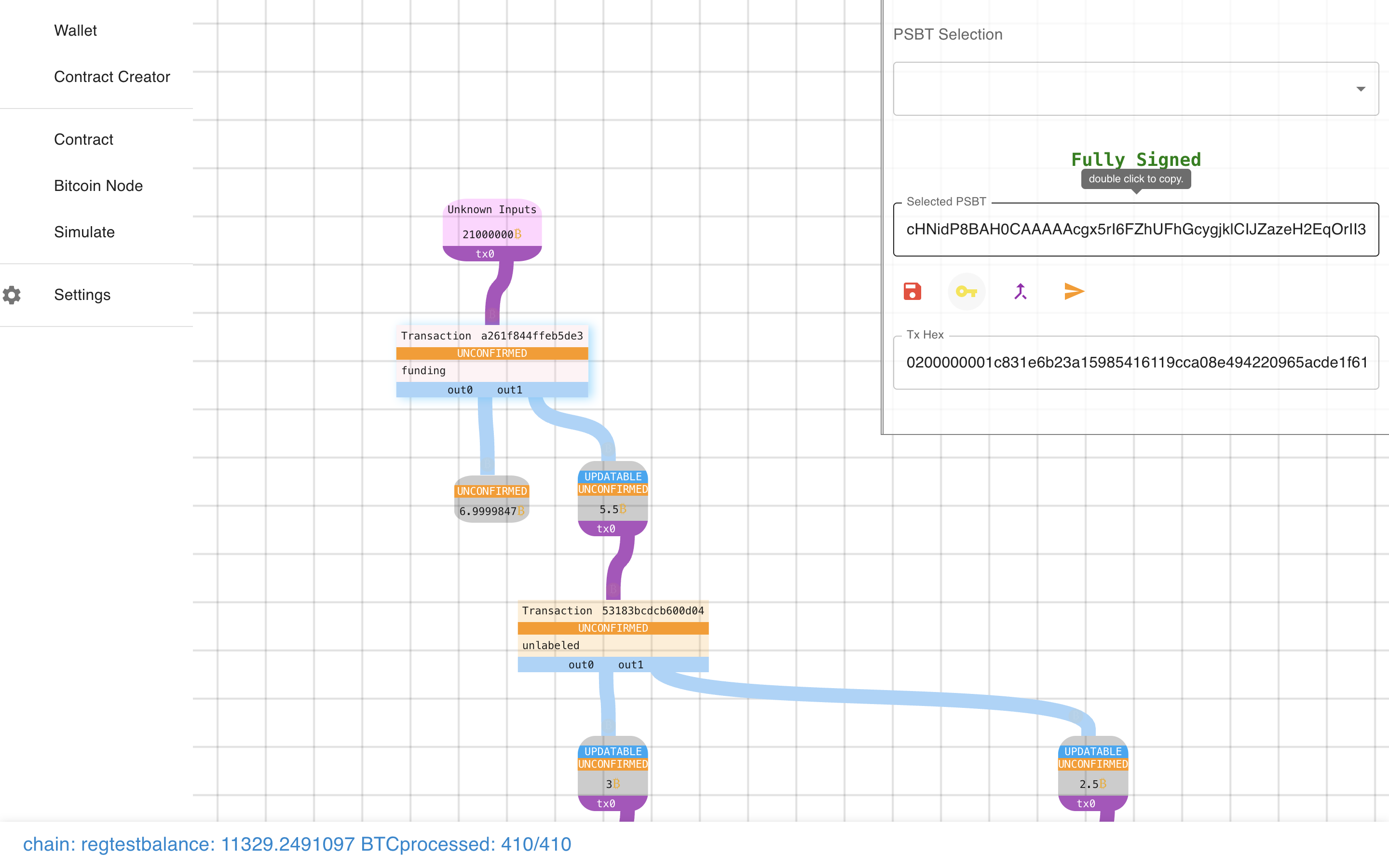

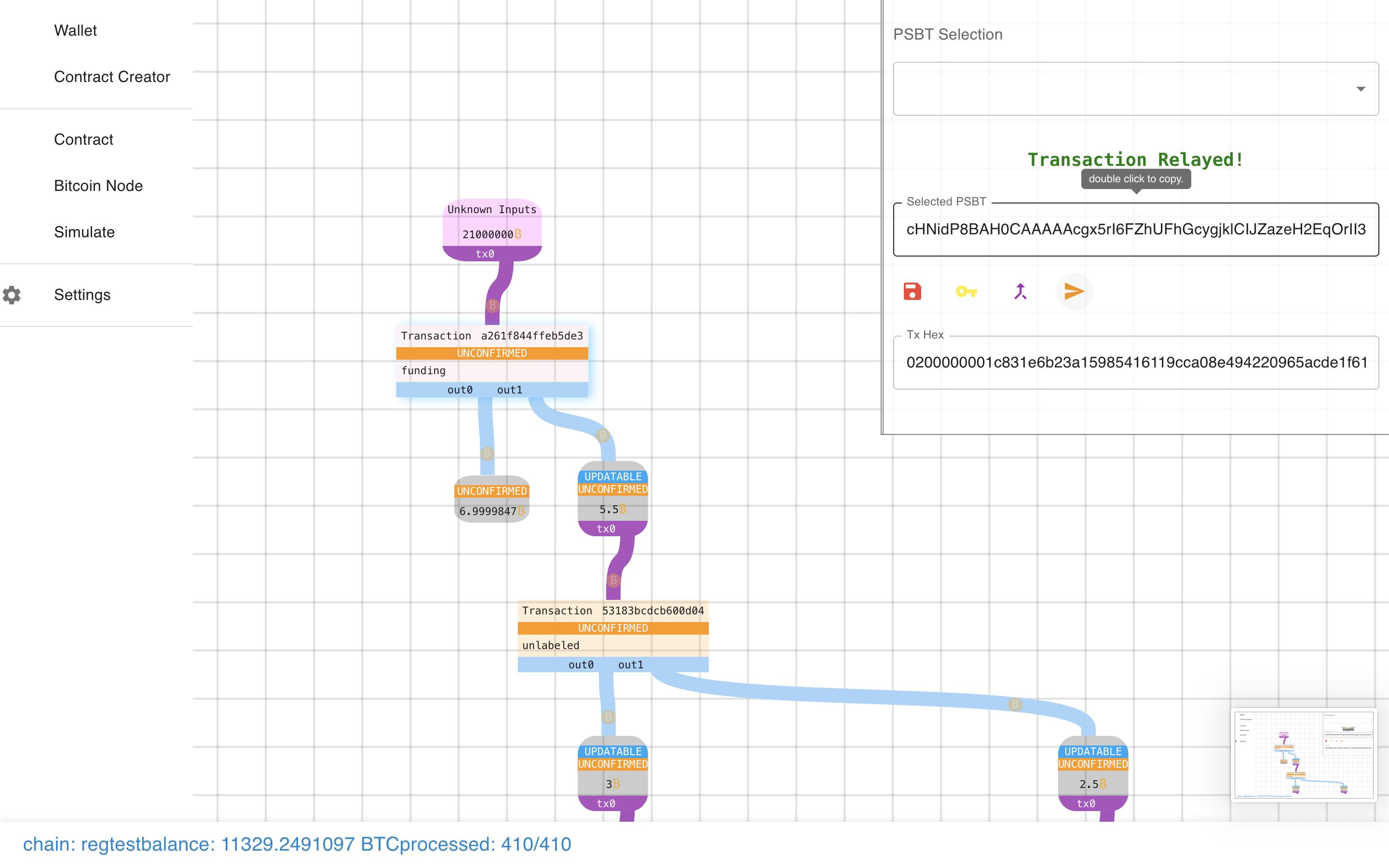

And then we can send it.

And then we can send it.

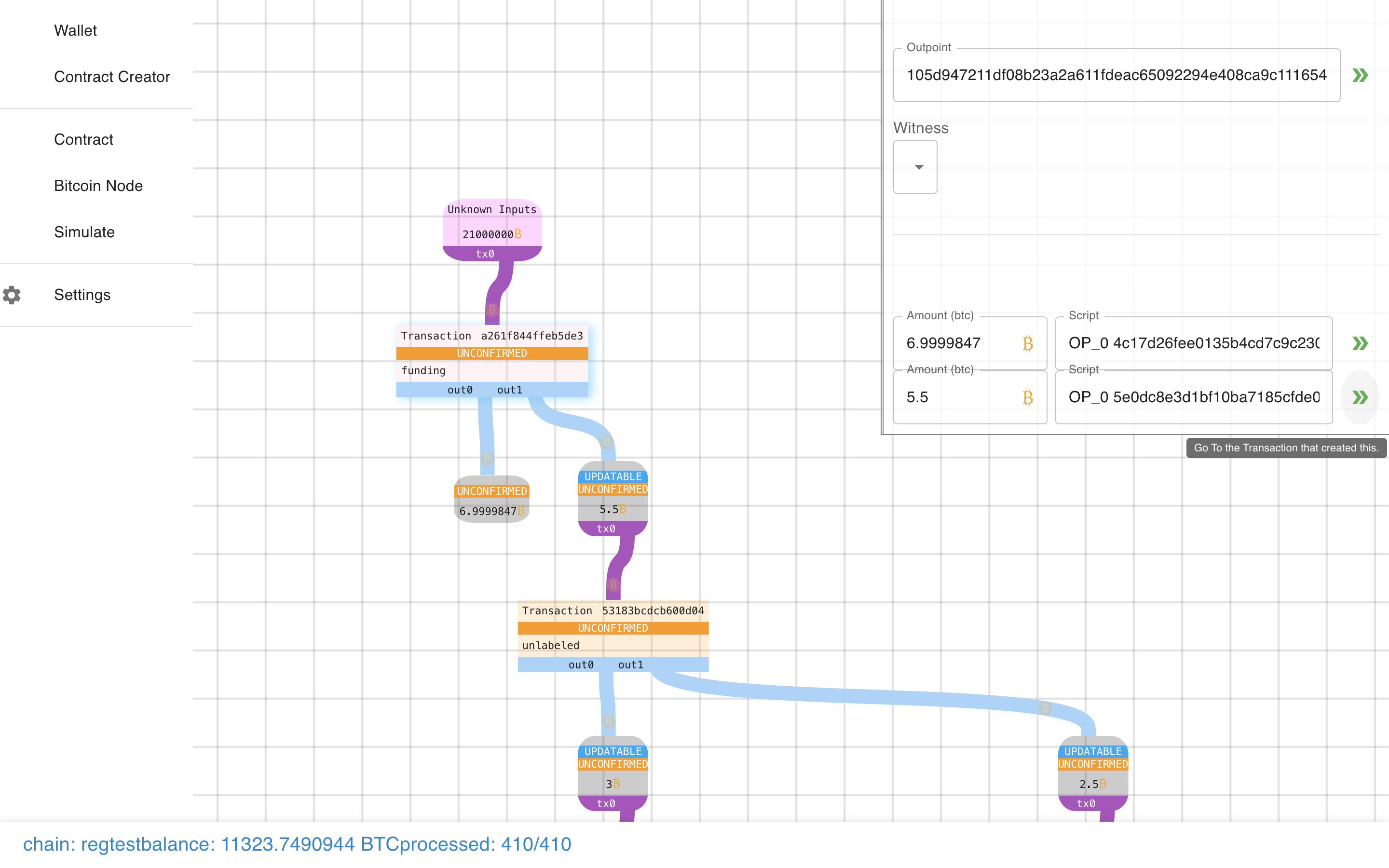

What other buttons do we have? What’s this do?

What other buttons do we have? What’s this do?

It teleports us to the output we are creating!

It teleports us to the output we are creating!

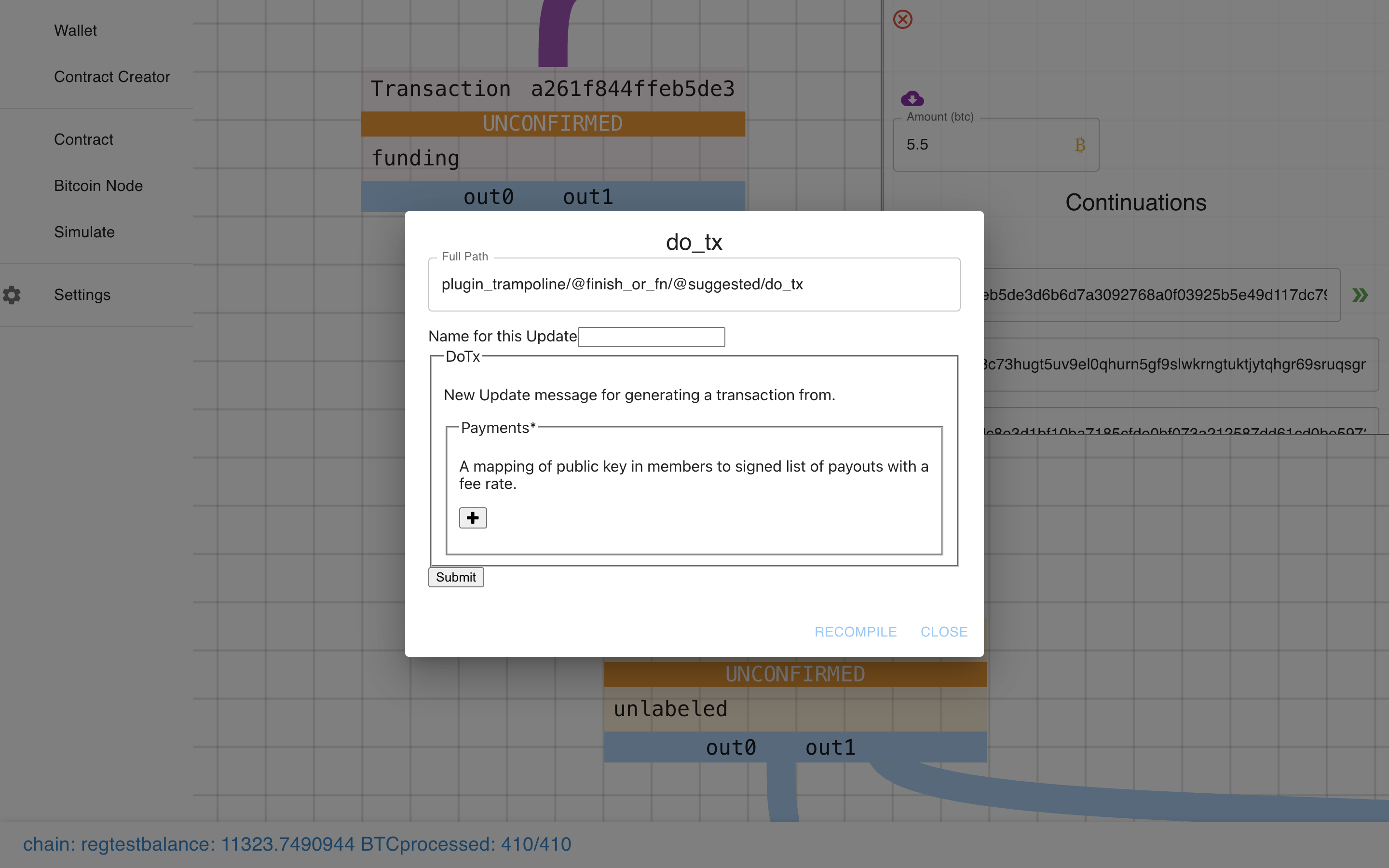

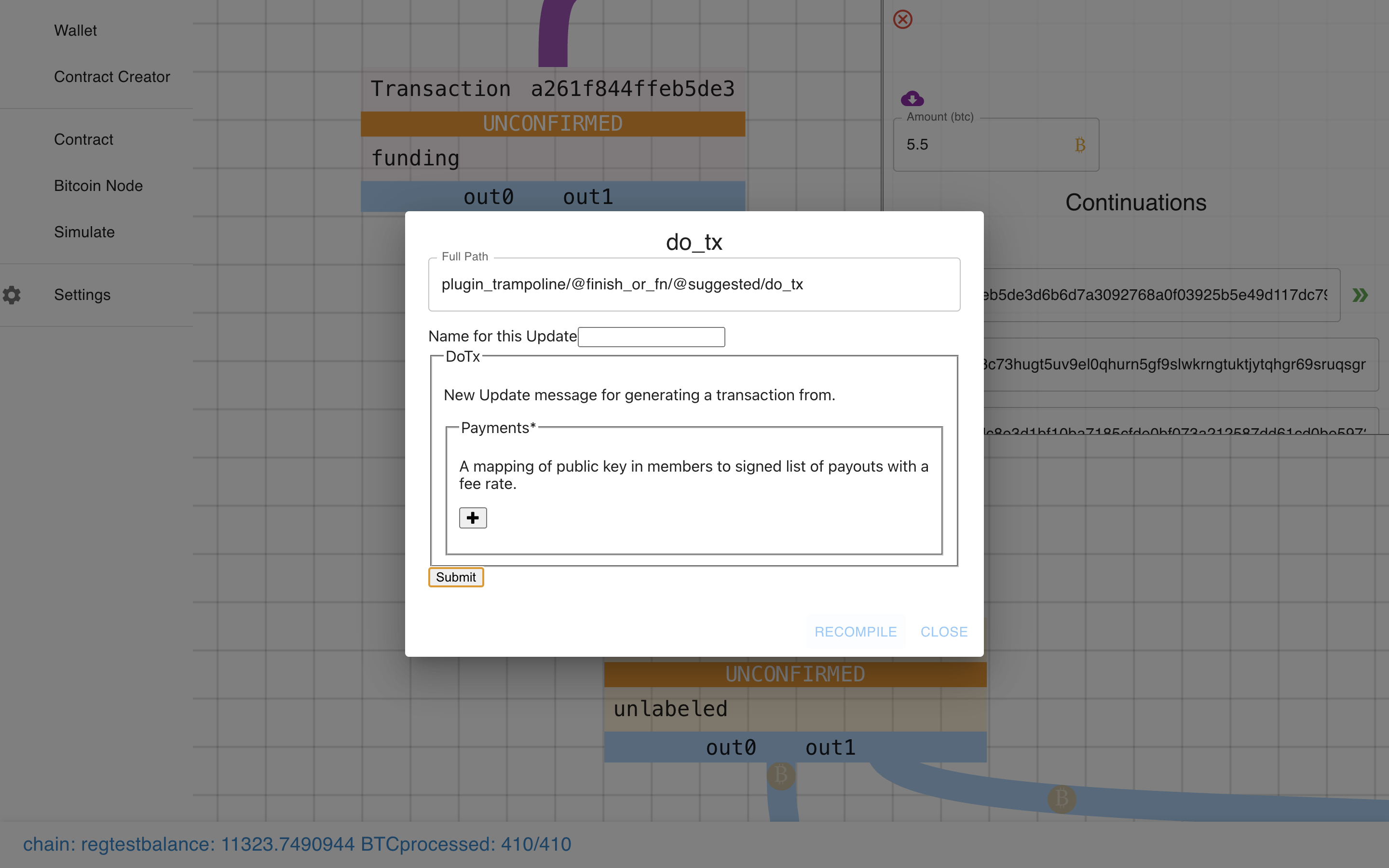

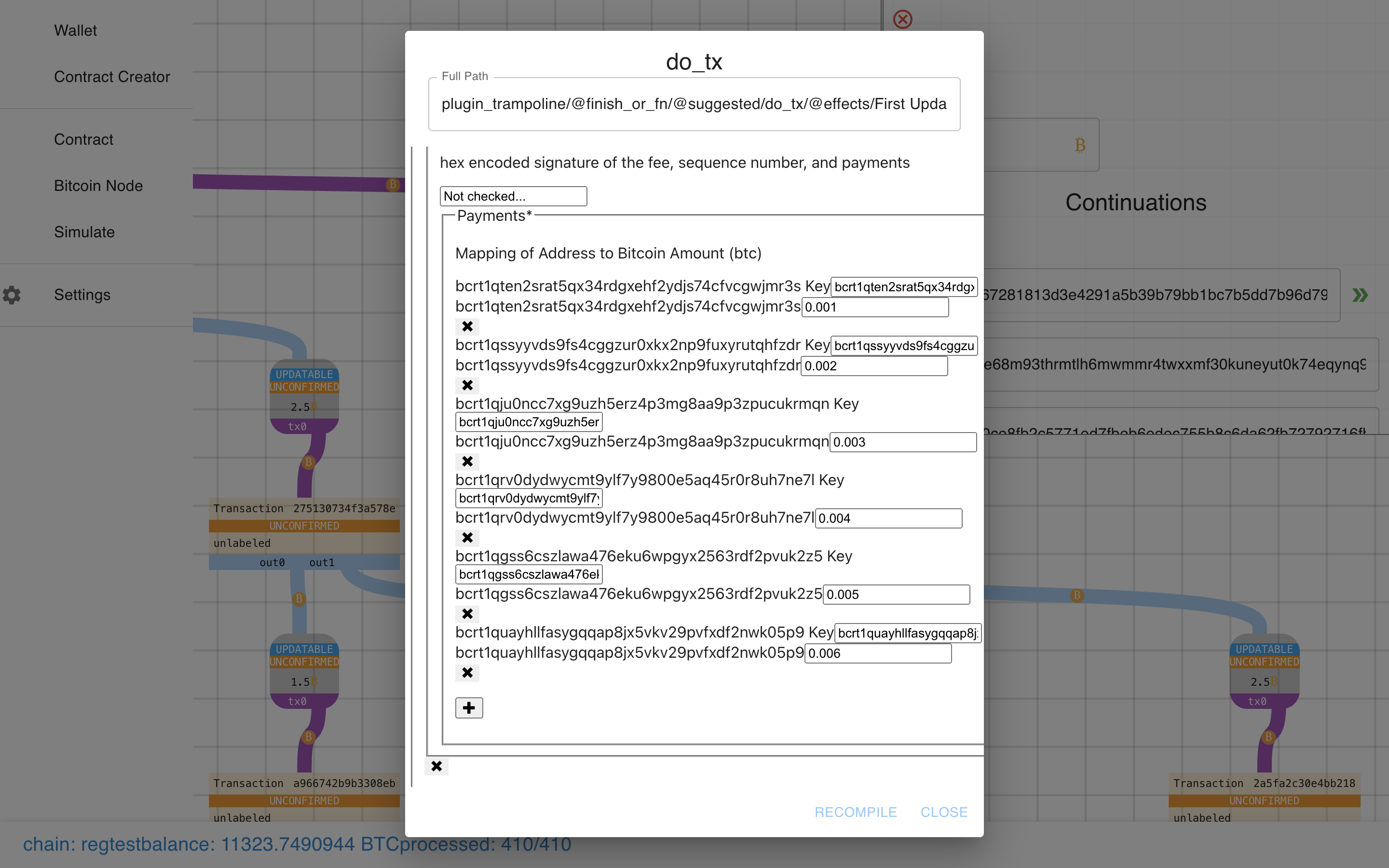

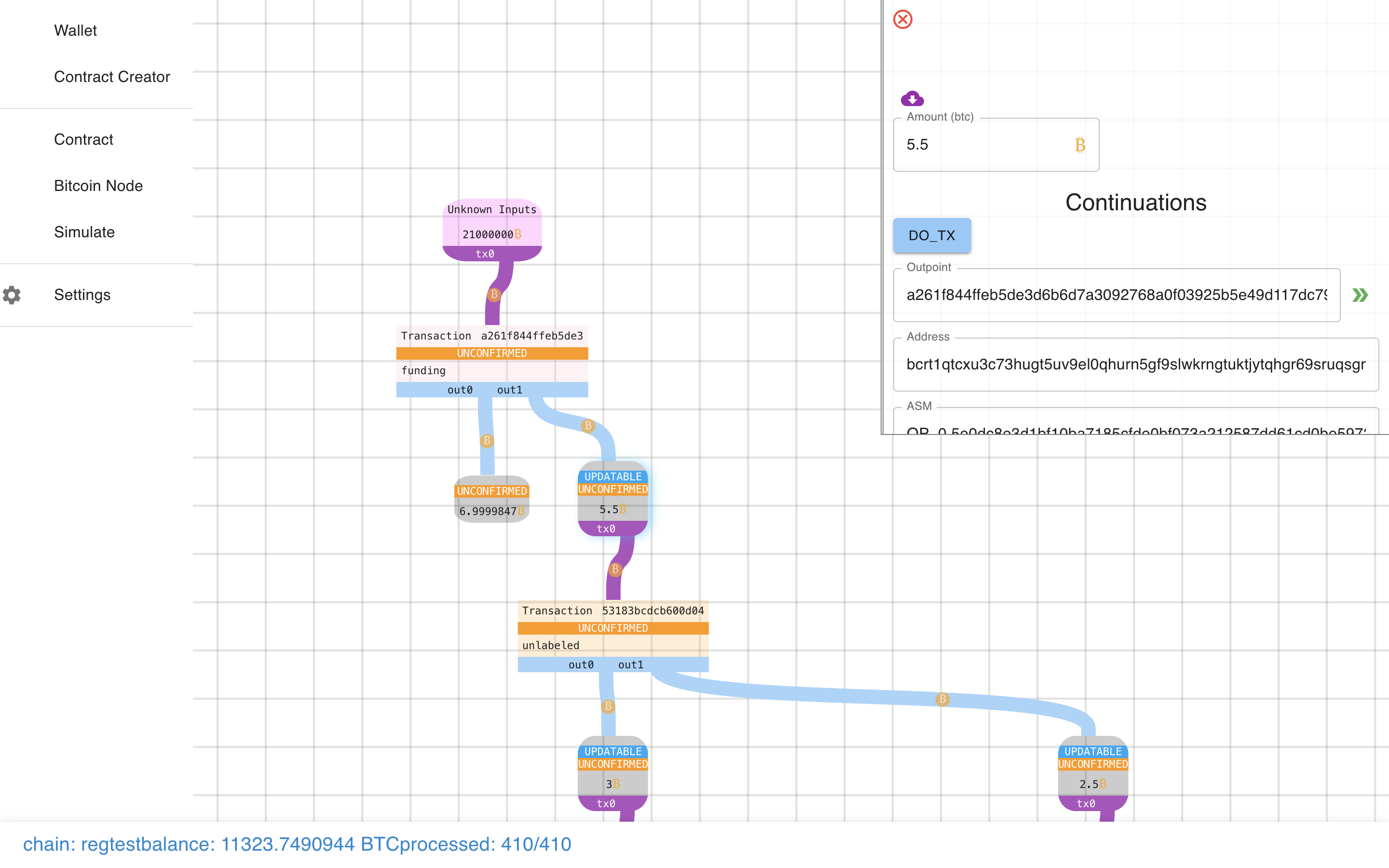

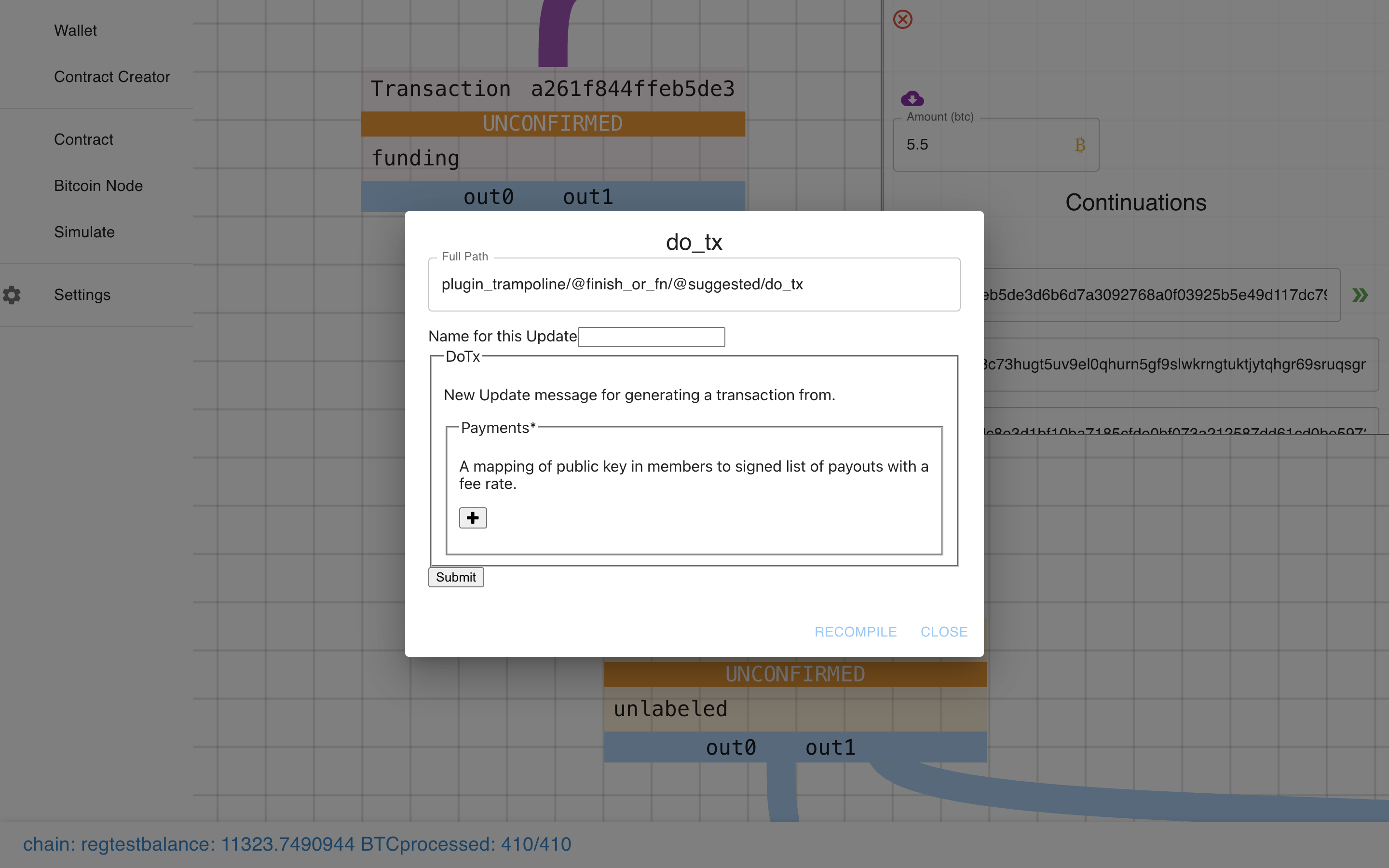

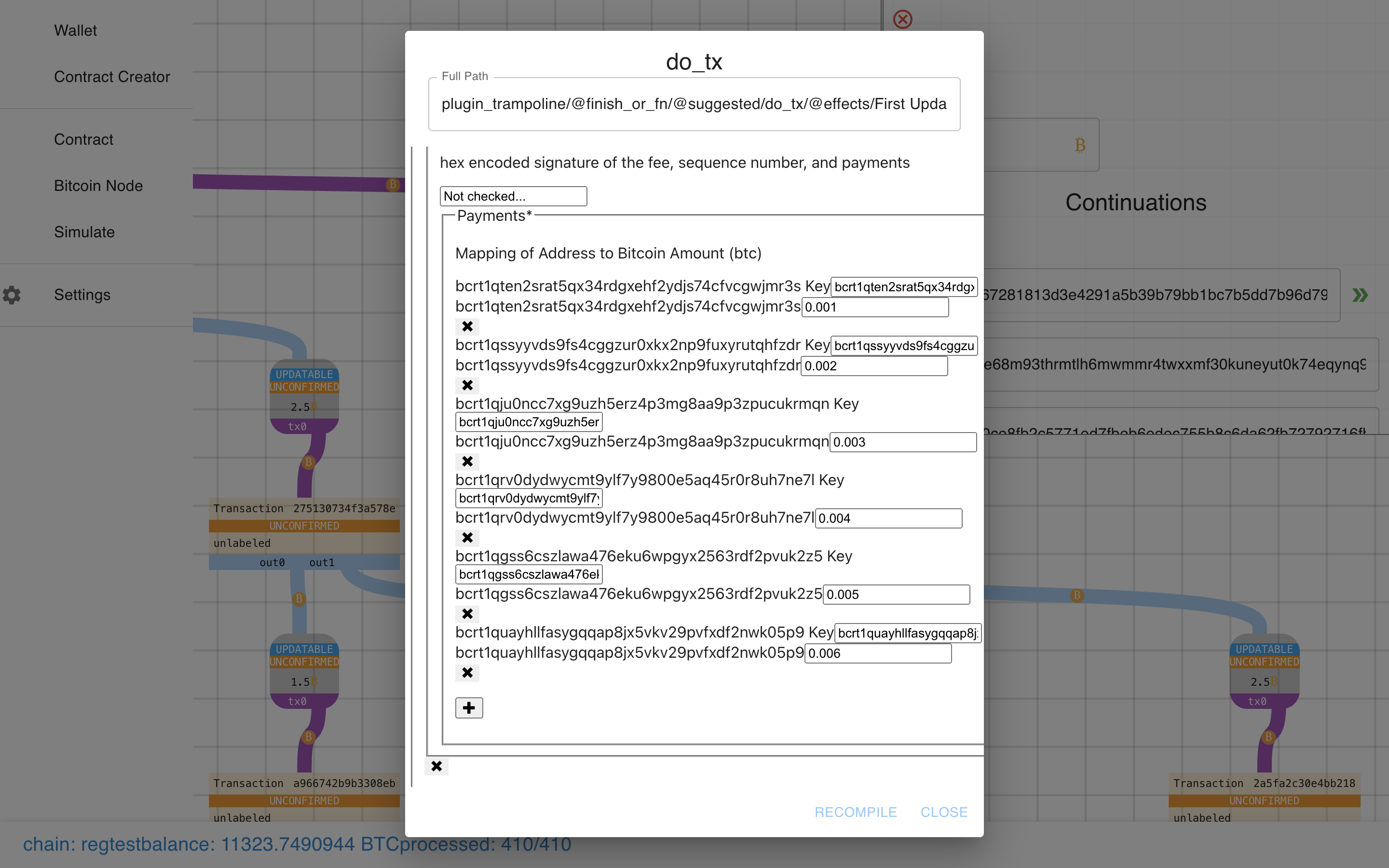

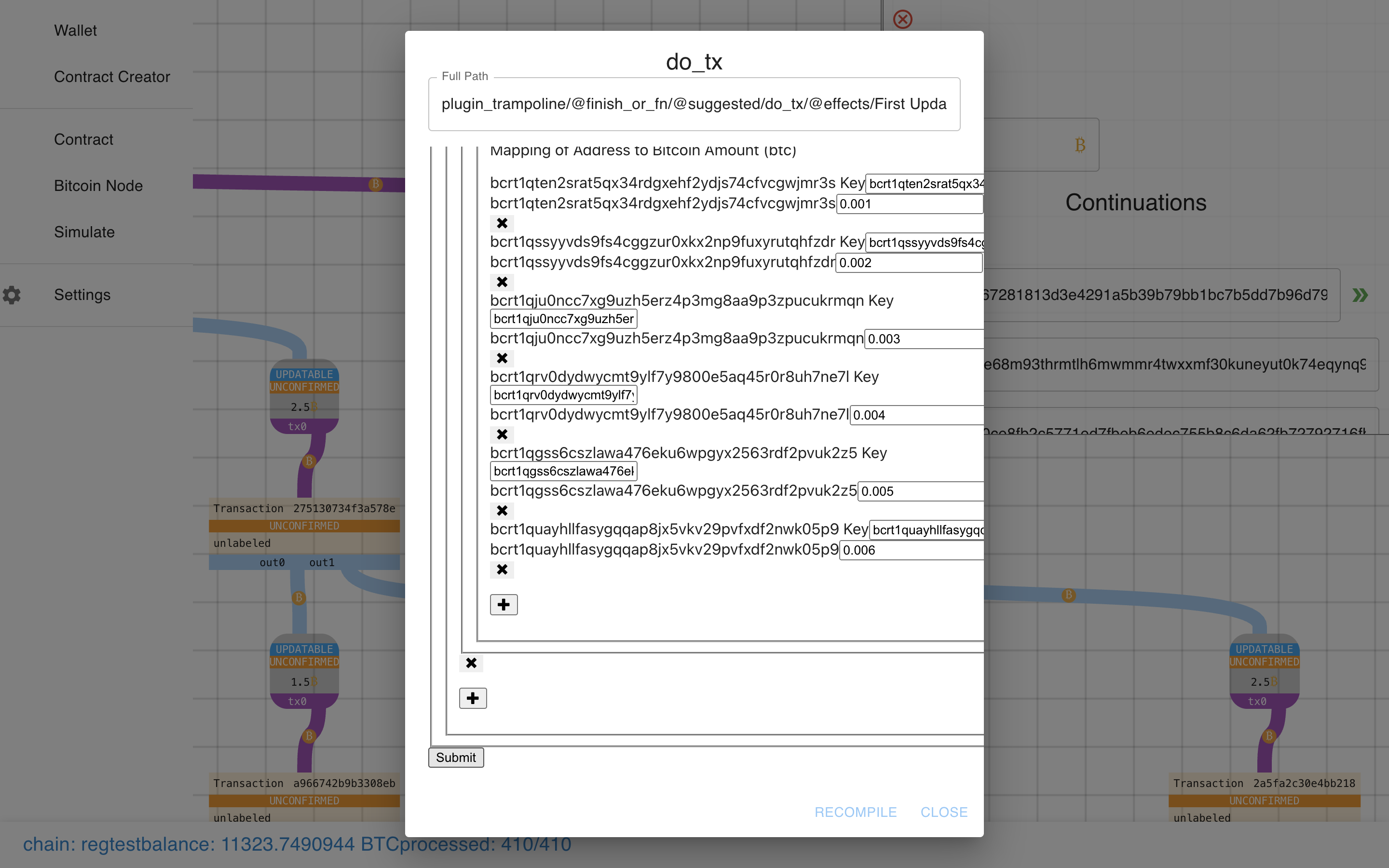

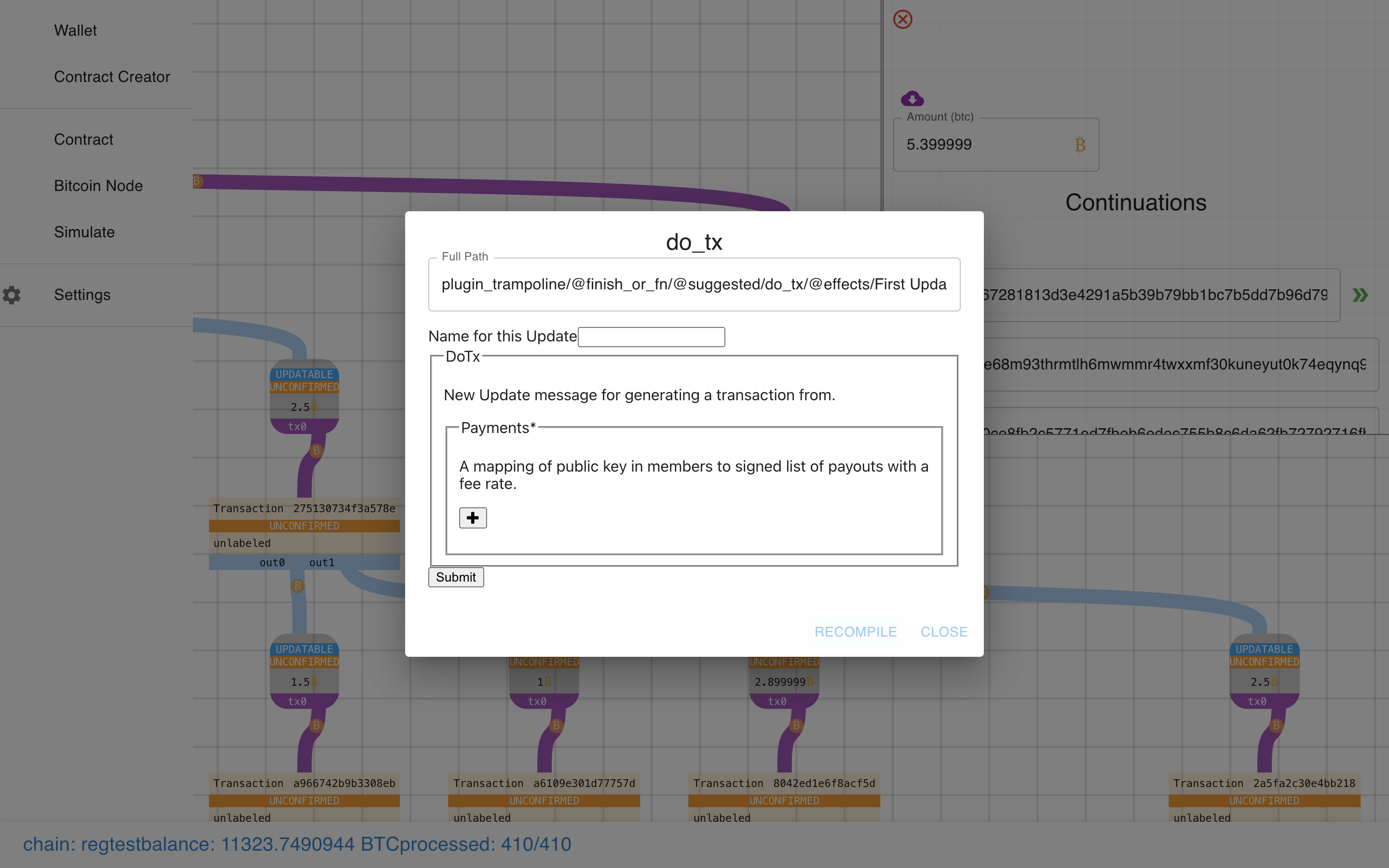

Notice how the output is marked “Updatable”, and there is also a “DO_TX”

button (corresponding to the DO_TX in the Payment Pool). Let’s click that…

Notice how the output is marked “Updatable”, and there is also a “DO_TX”

button (corresponding to the DO_TX in the Payment Pool). Let’s click that…

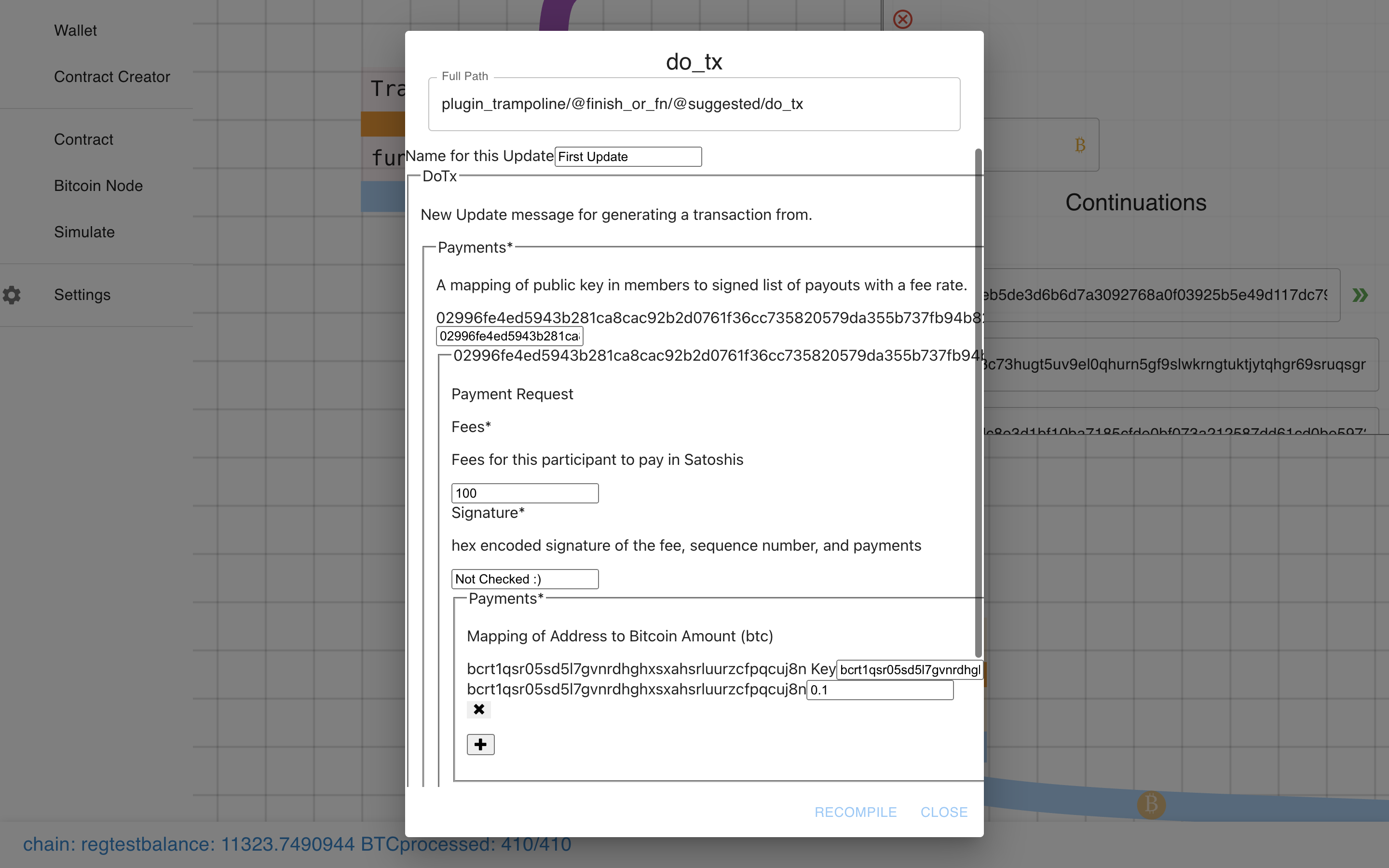

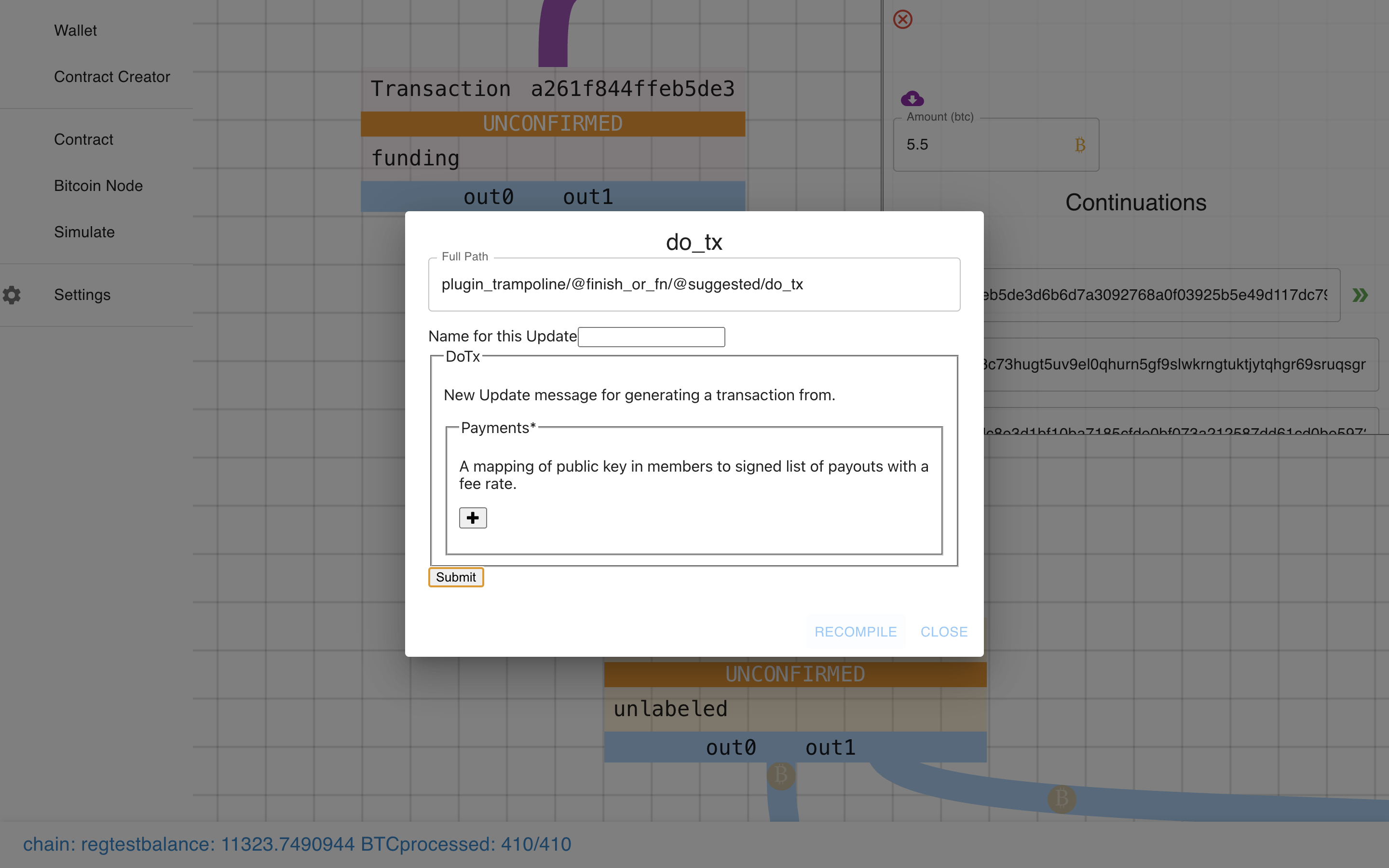

Ooooh. It prompts us with a form to do the transaction!

Ooooh. It prompts us with a form to do the transaction!

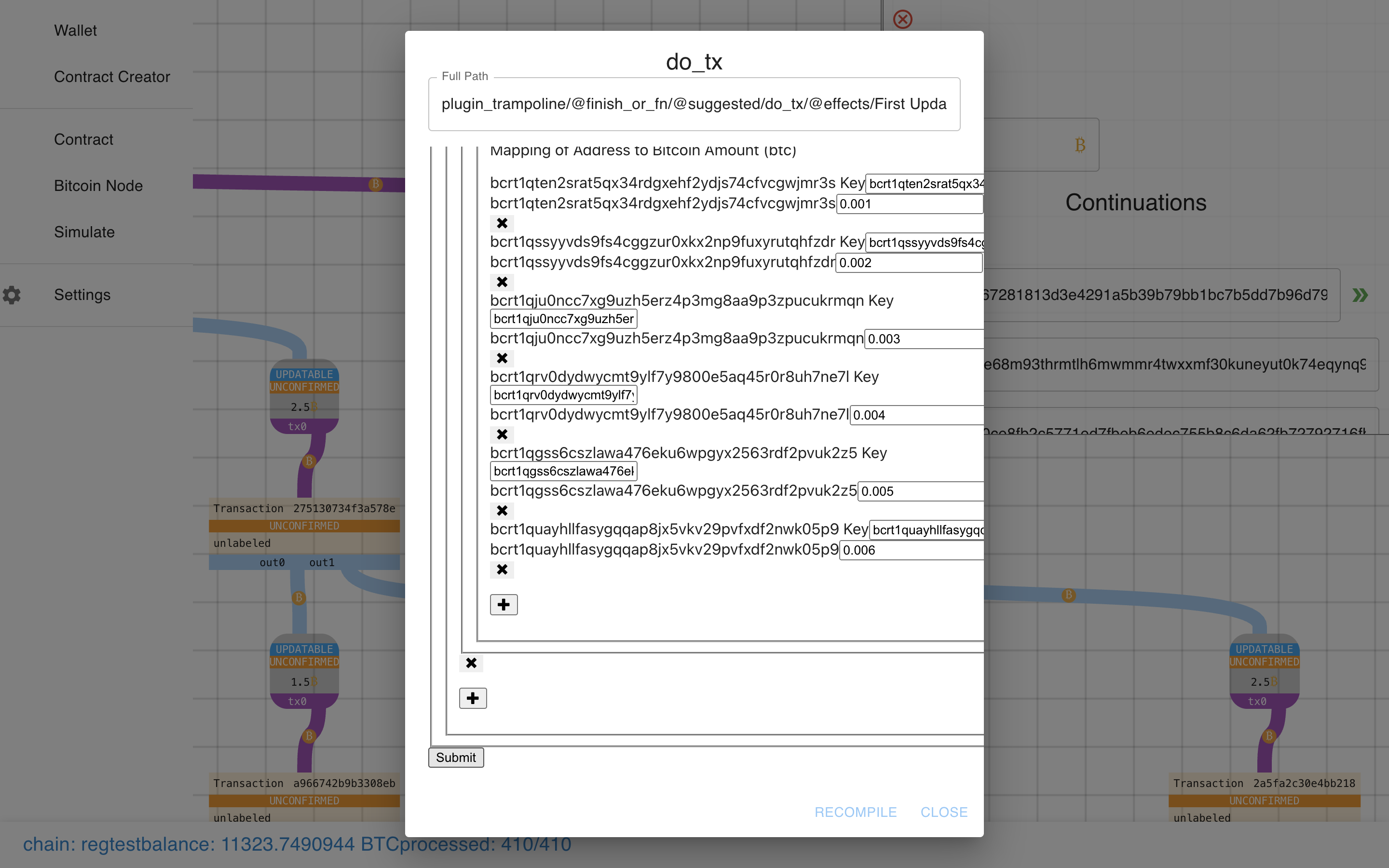

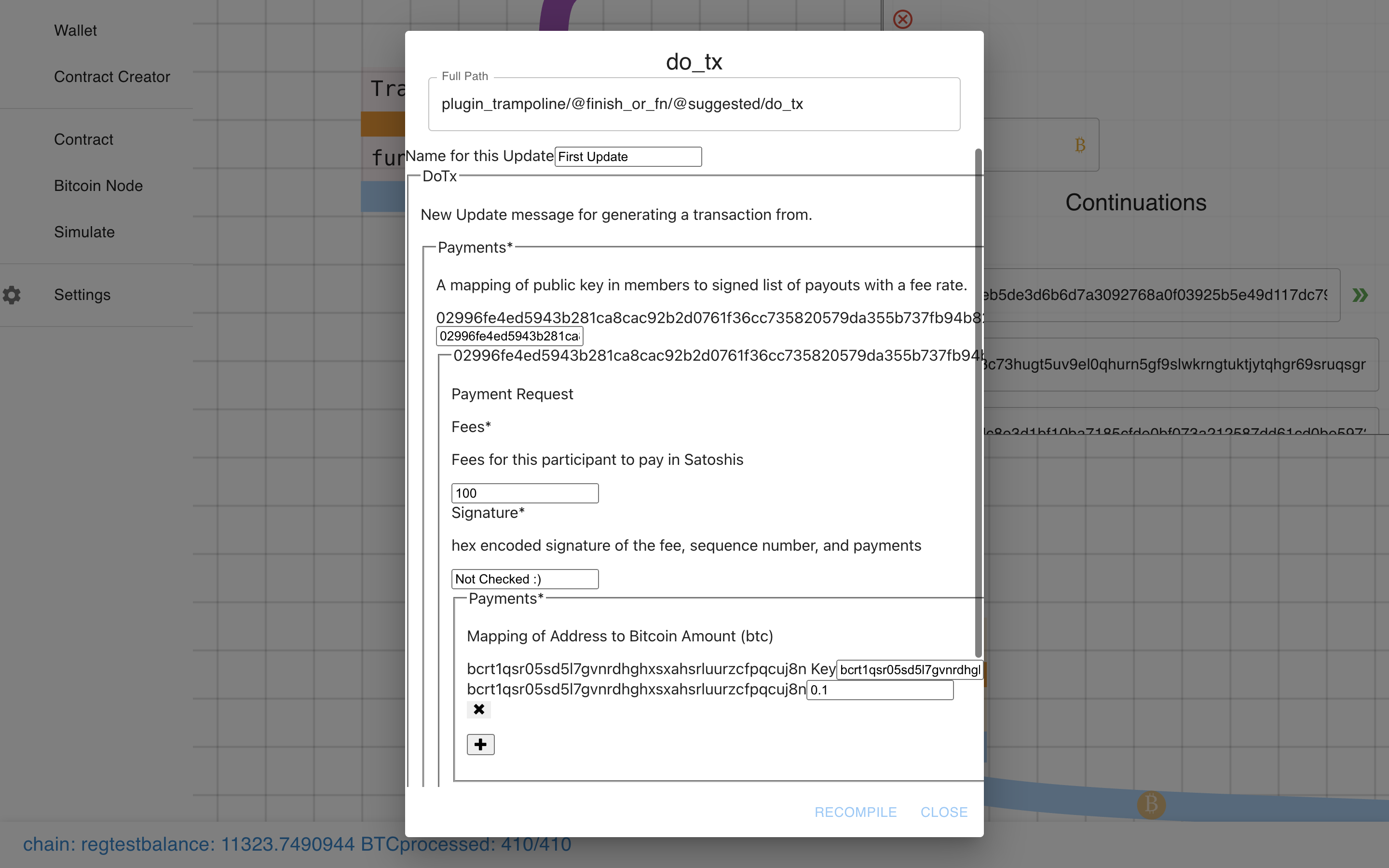

Ok, let’s fill this sucker out…

Ok, let’s fill this sucker out…

Click submit, then recompile (separate actions in case we want to make multiple “moves” before recompiling).

Click submit, then recompile (separate actions in case we want to make multiple “moves” before recompiling).

I really need to fix this bug…

I really need to fix this bug…

Voila!

Voila!

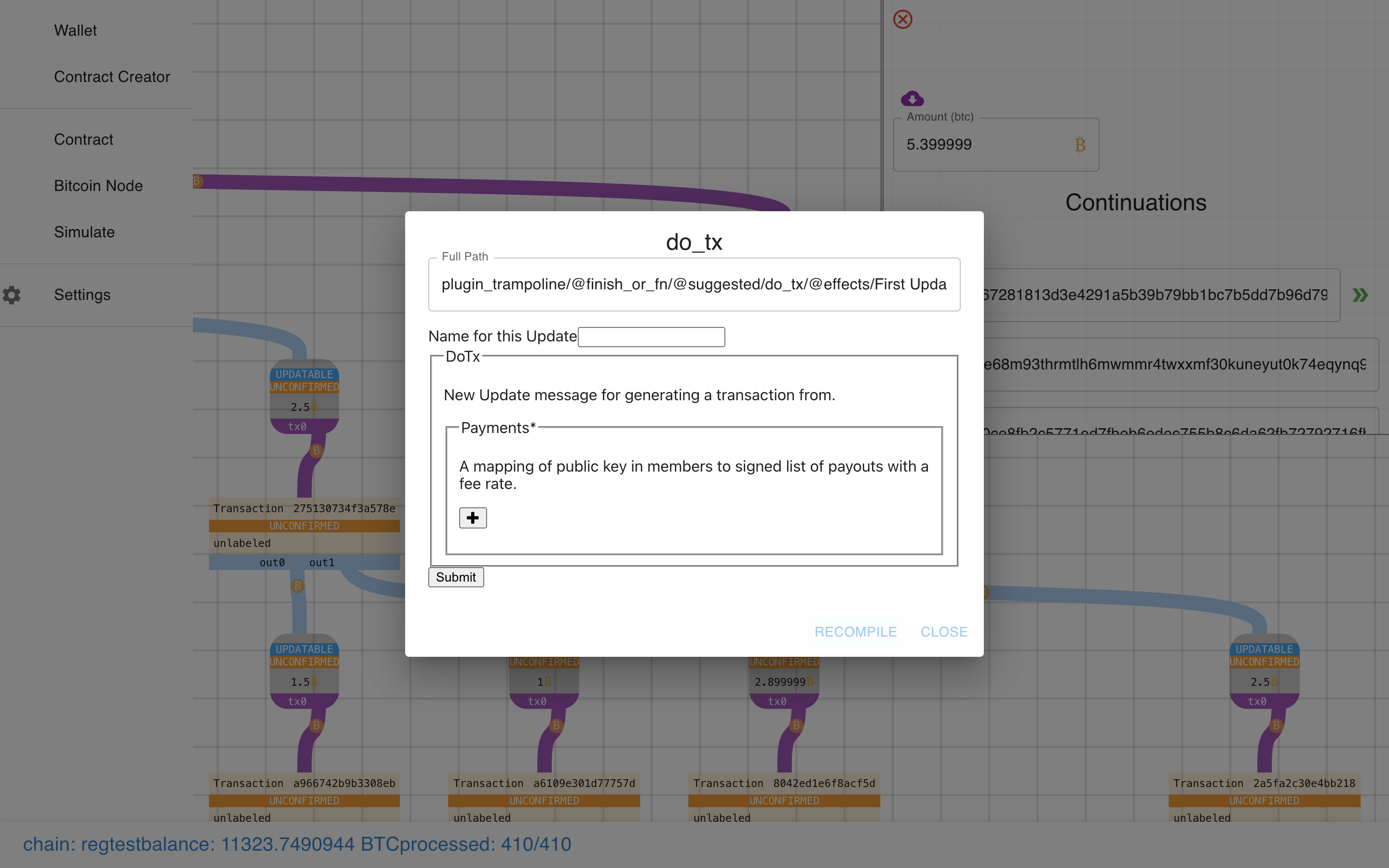

As you can see, the original graph is intact and we’ve augmented onto it the new state transition.

The new part has our 0.1 BTC Spend + the re-creation of the Payment Pool with less funds.

The new part has our 0.1 BTC Spend + the re-creation of the Payment Pool with less funds.

Ok, let’s go nuts and do another state transition off-of the first one? This time more payouts!

Ok, let’s go nuts and do another state transition off-of the first one? This time more payouts!

Submit…

Submit…

And Recompile…

And Recompile…

I skipped showing you the bug this time.

I skipped showing you the bug this time.

Now you can see two state transitions! And because we used more payouts than one, we can see some congestion control at work.

It works! It all really, really works!

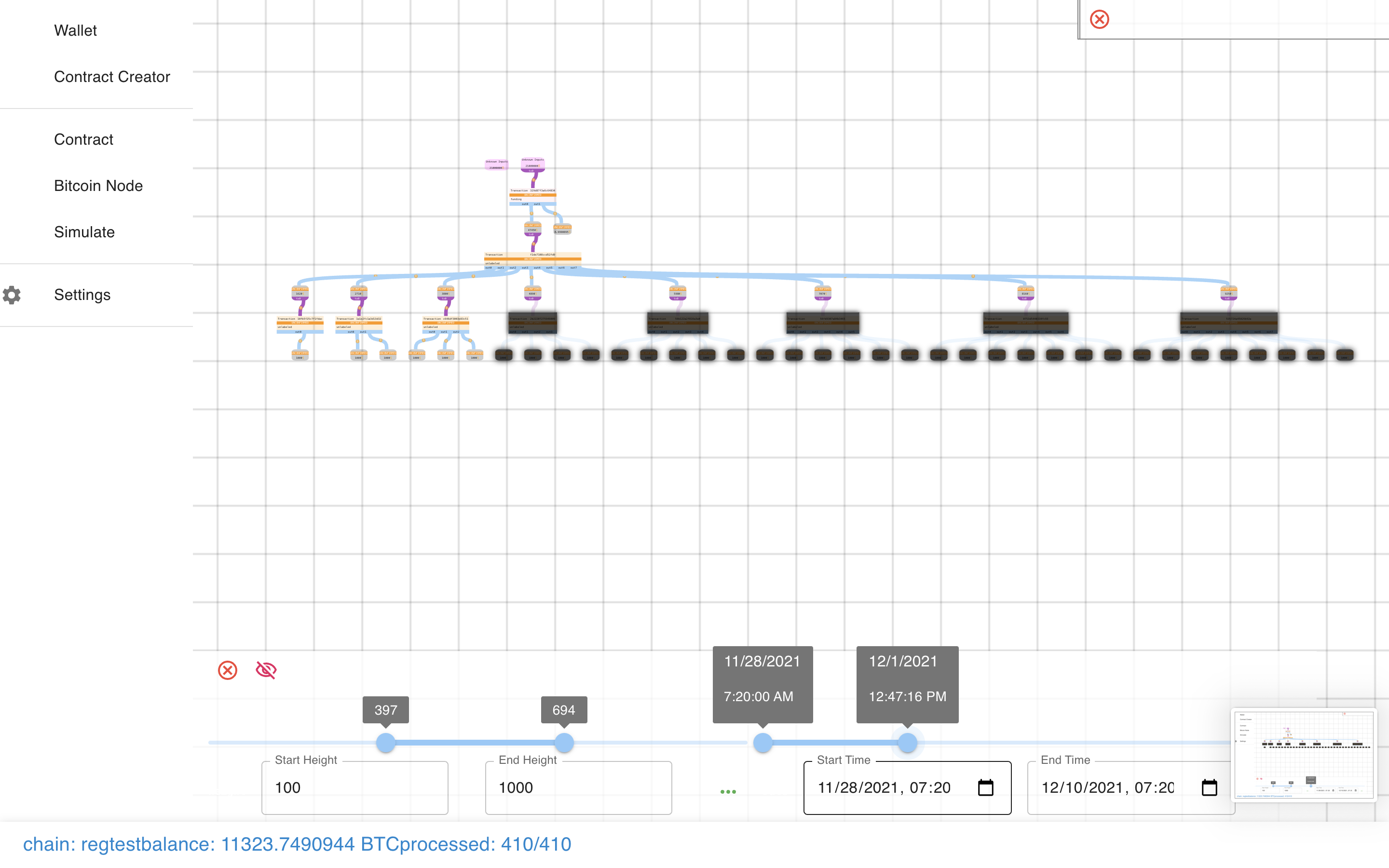

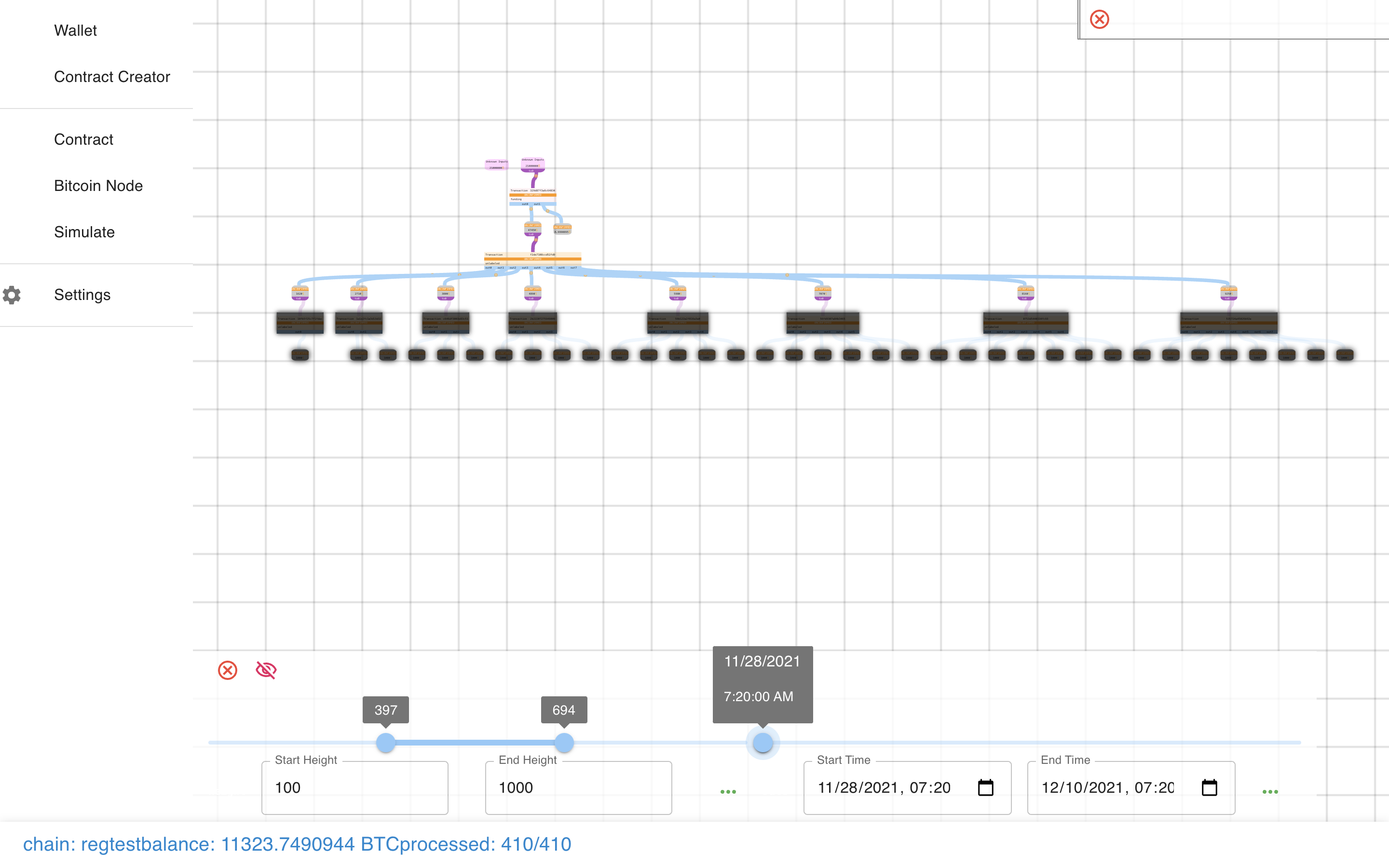

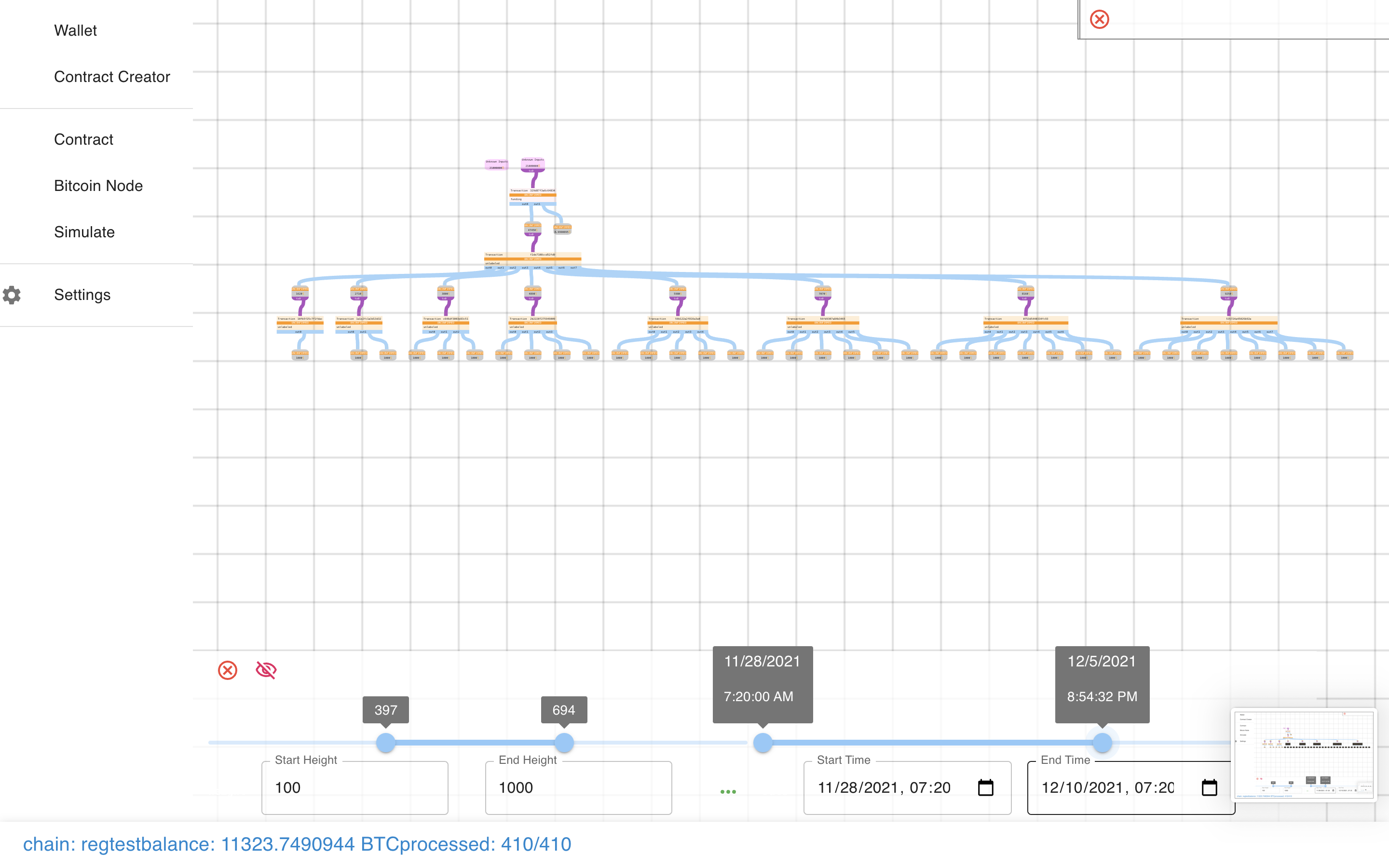

One more thing I can’t show you with this contract is the timing simulator.

This lets you load up a contract (like our Hanukkiah below) and…

Simulate the passing of time (or blocks).

Simulate the passing of time (or blocks).

Pretty cool!

Pretty cool!

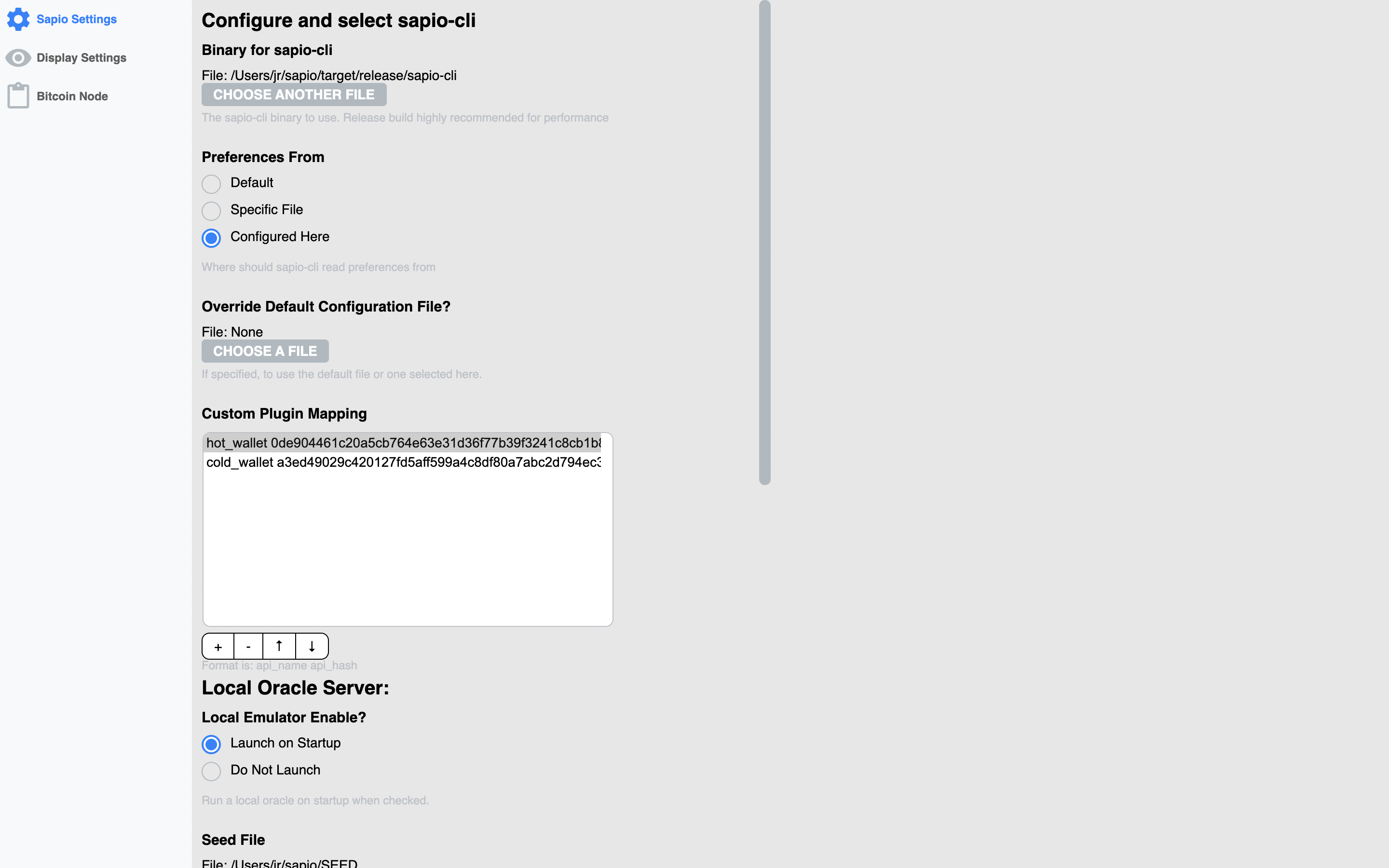

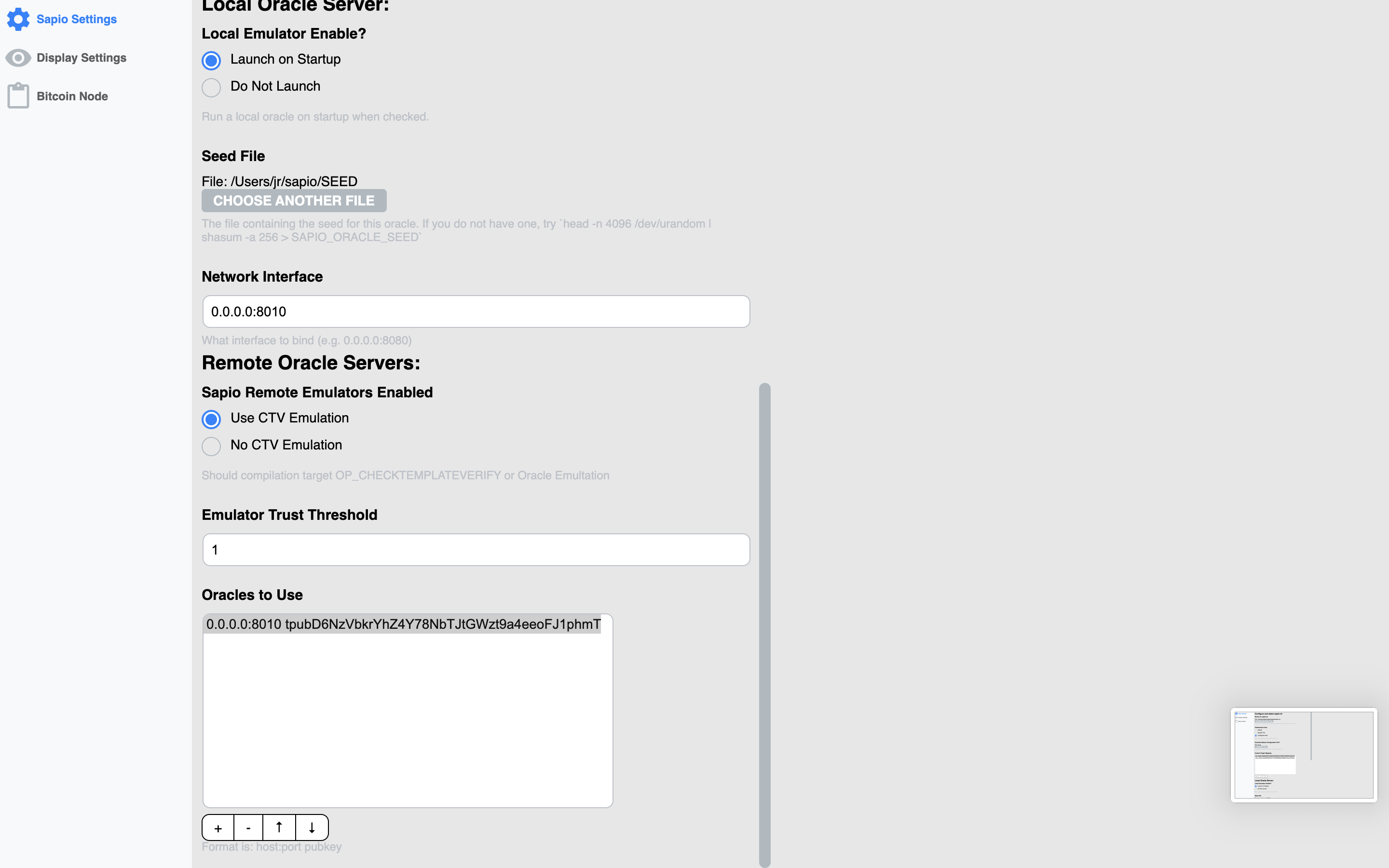

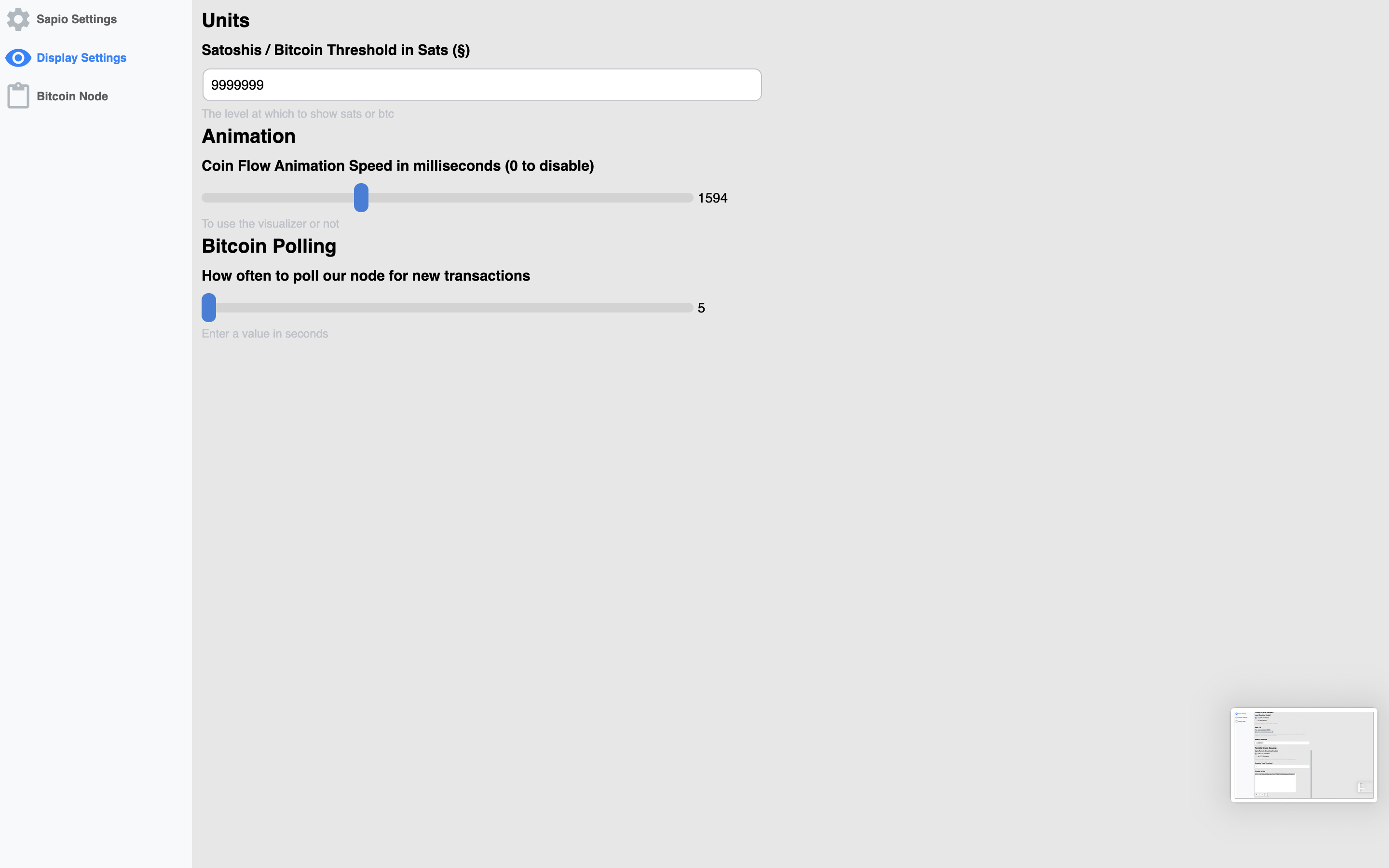

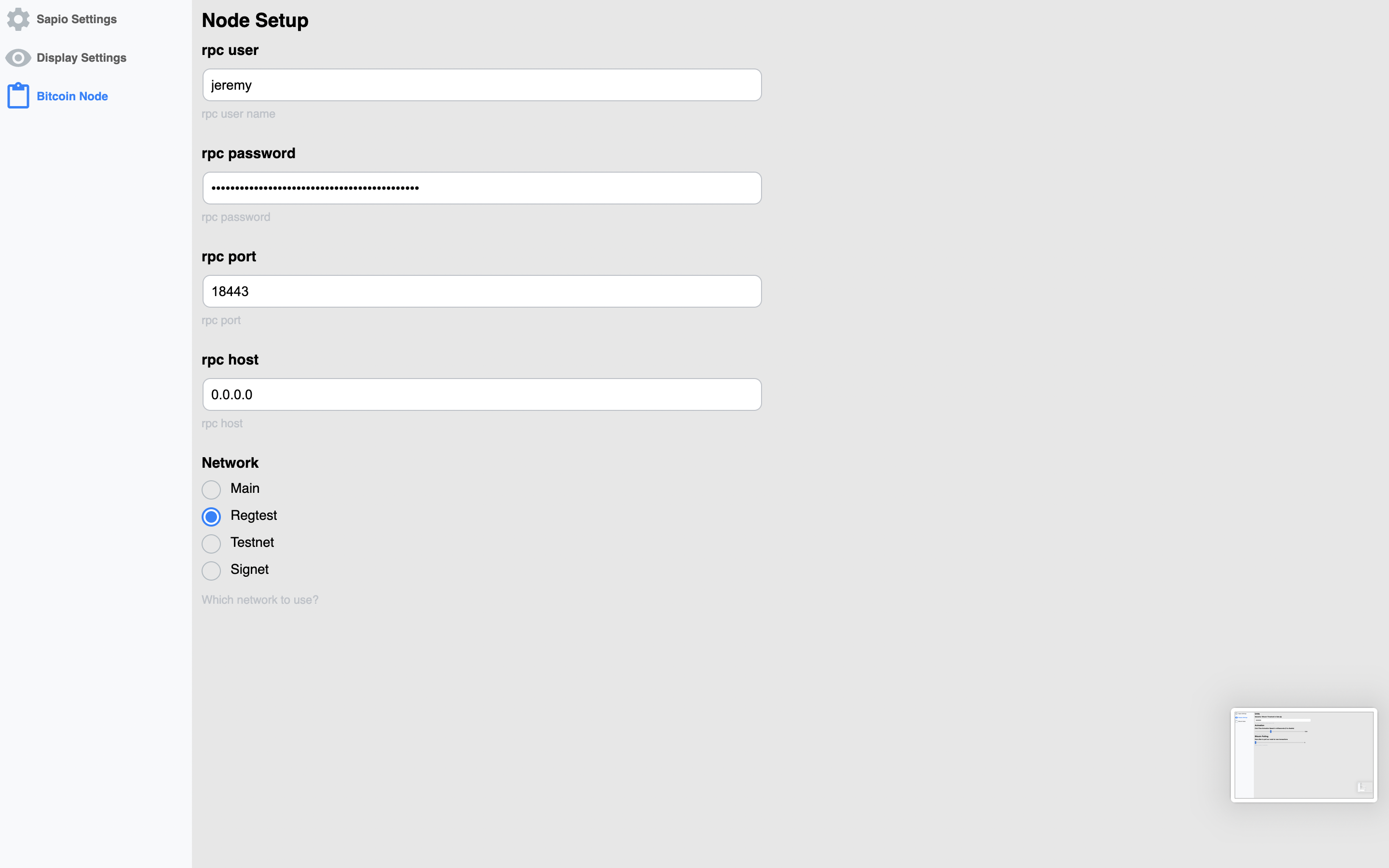

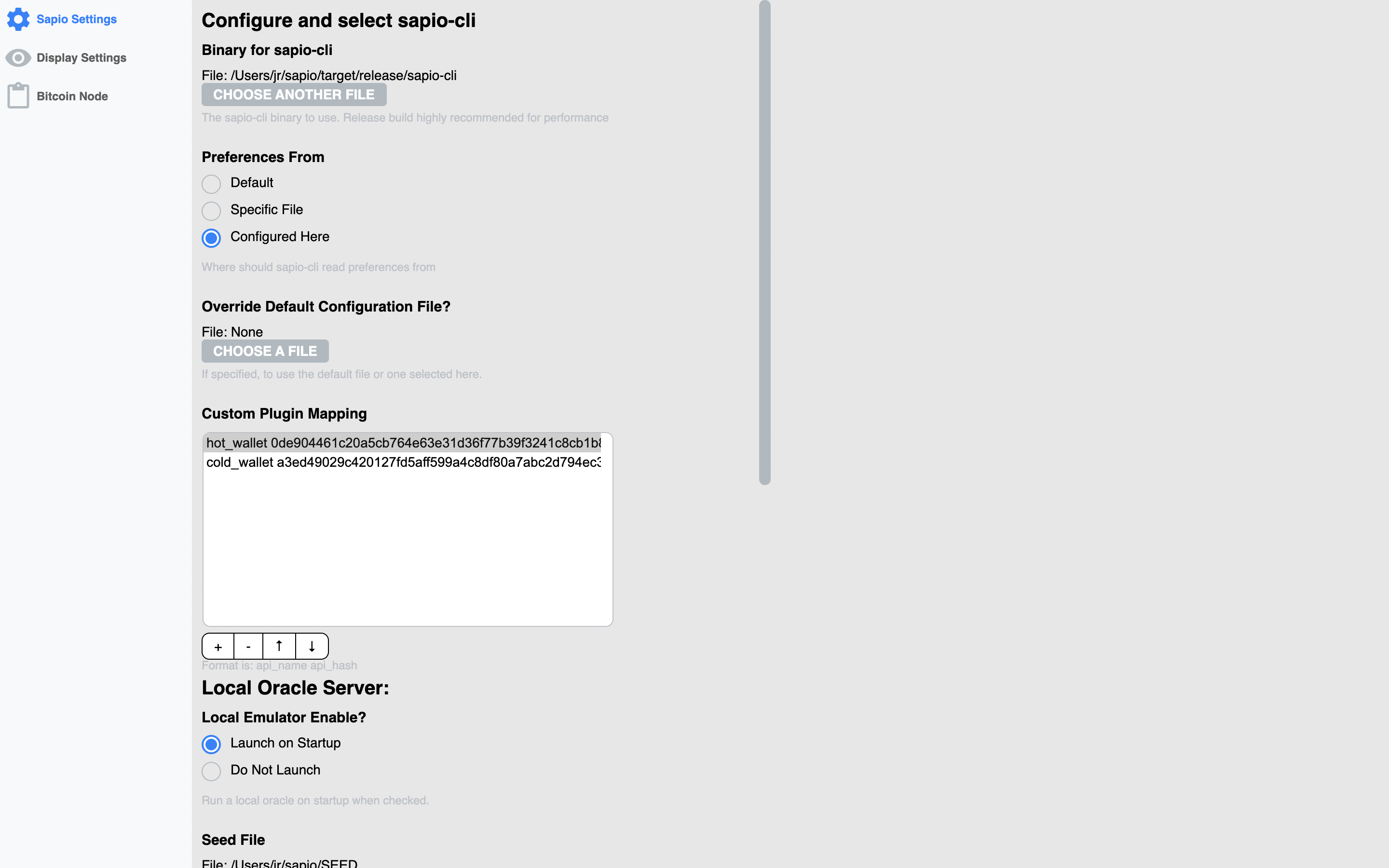

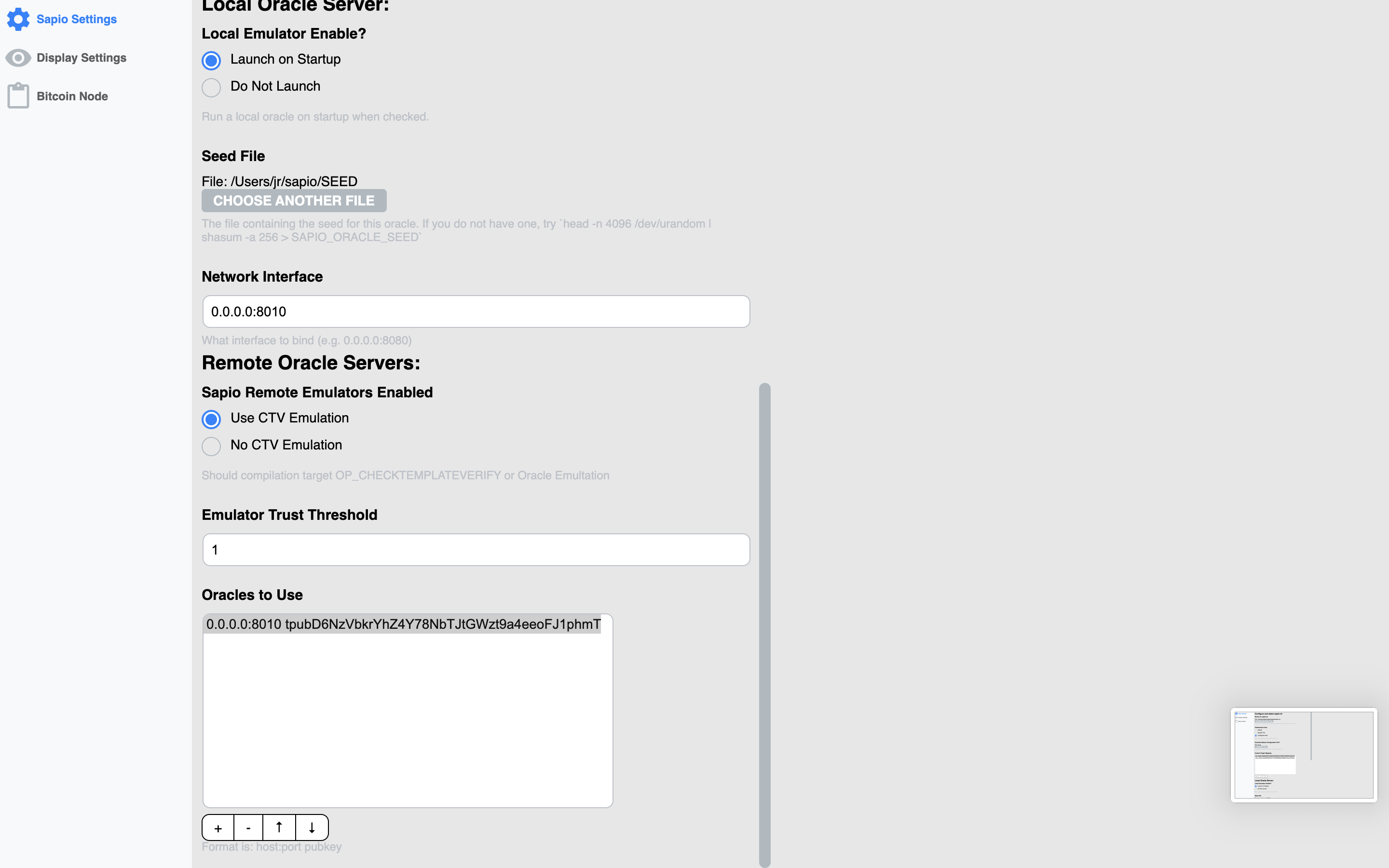

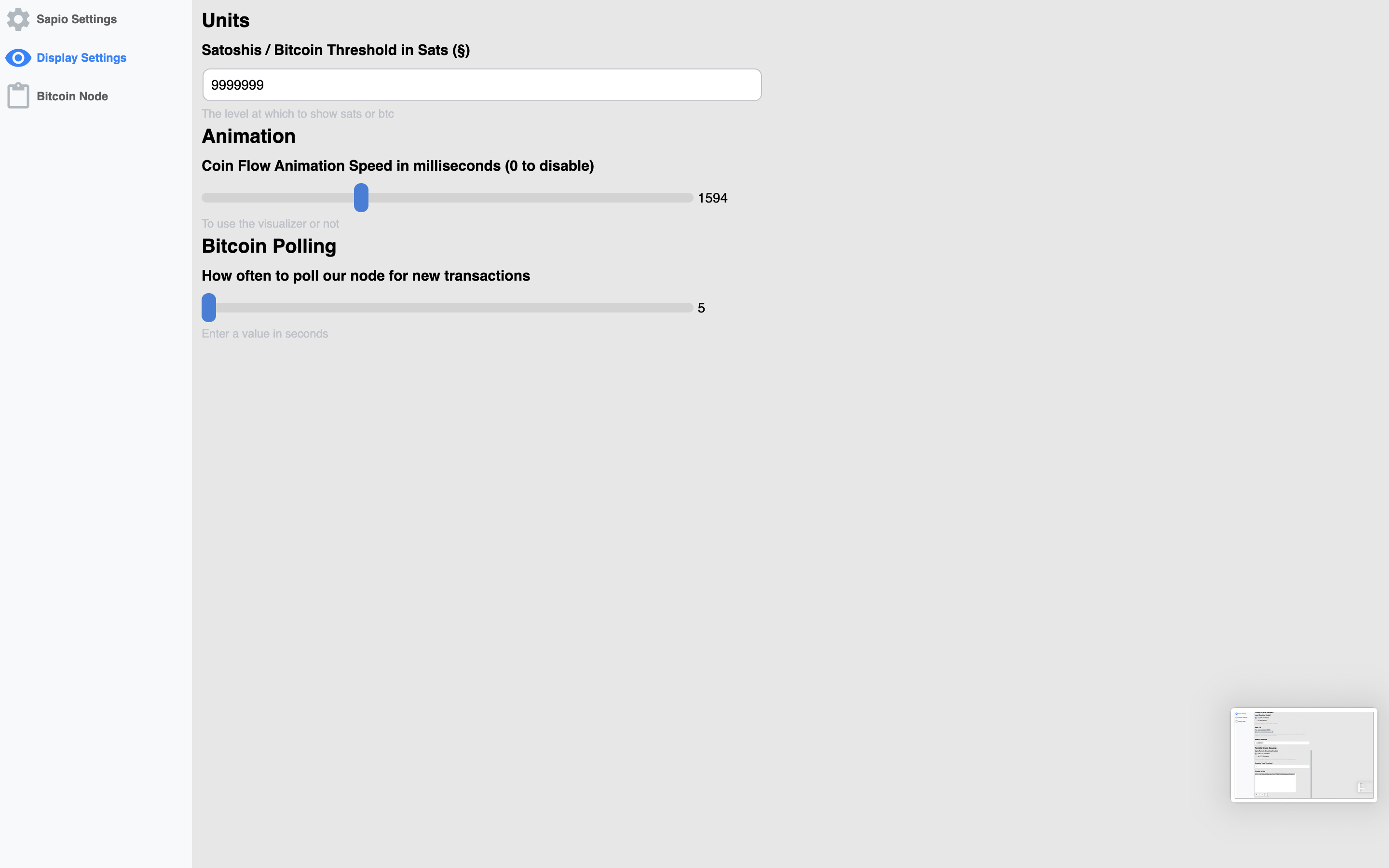

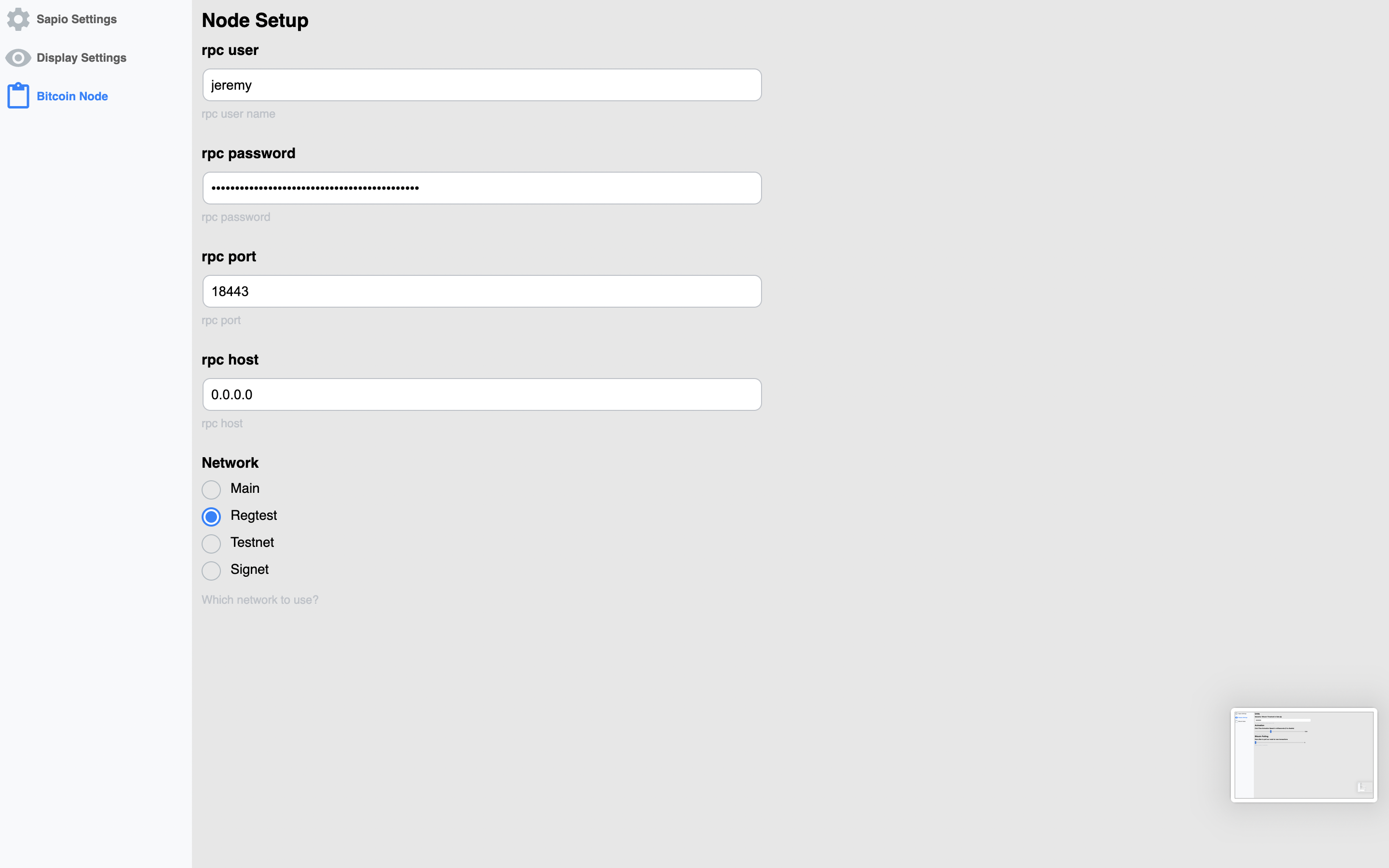

There are also some settings you can configure for display settings, the node,

and for sapio-cli. The first time you run Sapio, you’ll need to get some of

these things configured correctly or else it will be broken. Currently, if you

look here you

can find a template for a script to get everything up and running for a first

shot at it, otherwise you’ll have to do it by hand, or just change your

preferences.json to be similar to mine in the note.

before you ask…

OF COURSE THERE IS DARK MODE

configured by your local system theme preference

I hope you enjoyed this! There’s a metric fuckload of work still to do to make

Sapio Studio & Sapio anywhere near production grade, but I hope this has helped

elucidate how powerful and cool the Sapio approach is, and has inspired you to

build something and try it out! I’d also be really eager for feedback on what

features should be here/are missing.

Lastly, if you’re just excited about this, it’s definitely a project

that could use more experienced react/electron/bitcoin contributors, either

yourself or if you’re interested in sponsoring :).

Day 17: Rubin's Bitcoin Advent Calendar

14 Dec 2021

Welcome to day 17 of my Bitcoin Advent Calendar. You can see an index of all

the posts here or subscribe at

judica.org/join to get new posts in your inbox

A short story. I want to tell first. I recently made friends with

Eugene, this really smart 19 year old Cal

dropout, when I was visiting Miami for the NFT bachanal around Art Basel.

Eugene just dropped a project he’s been working on, and it’s really freakin’

cool. He basically implemented a human v. chess engine in Solidity that mints

beautiful interactive NFTs of representations of the contract’s internal states.

You can play the game / mint one for like 0.1 ETH on his site

here, and see a permanent record of my

embarassingly bad move where I missed a mate-in-one here:

Definitely check out the site and read how it’s implemented. Eugene is very

bright, and a talented hacker. The project? It’s not a get-rich-quick project,

only cost is gas, so Eugene’s not raking it in (altho I think he should have,

but he’ll have many more successes).

So what’s the moral of this story?

Why isn’t Eugene working on Bitcoin? People say that “eth is a scam” and that

“everyone working on it are scammers”. But I don’t see that. I see people, like

Eugene, wanting to build cool shit every day. And wanting to ship cool stuff.

It’s fun. And I like to be friends with creative and curious people.

Working on Bitcoin is can be fun. But mostly it’s not. My post yesterday? The

one describing new techniques to make Bitcoin more decentralized? I had a lot

of fun writing it. And then someone claimed that my work is “very dangerous” to

Bitcoin.

I get it, I truly do. Bitcoin is money for enemies. Don’t trust, verify. In

the IRC channels, twitter spaces, and other forums we hear rants like the below

all the time:

Working on Bitcoin isn’t just a stupid fucking game of chess you idiot, it’s

solving literally every geoscale challenge humanity has ever faced and your work

on that is a bajillion fold more worthwhile. You are an asshole thinking you

deserve to have any fun in your life when you could be a miserable piece of shit

with people shouting at you all the time about how you suck and struggling to

ship small features and hoping how you might ship one bigger project this

decade. Fun? Fun??? You fucking asshole. If I don’t kill you, someone else will.

But developers are people too, and good developers like to build cool shit. If

you haven’t noticed, Bitcoin development has a bit of a burnout problem, with

multiple contributors stepping down their engagement recently. A likely cause

is the struggle it takes to ship even the smallest features, not to mention the

monumental effort it takes to ship a single large project. But the death

threats certainly don’t help either.

It doesn’t have to be this hard

It’s hard to tell people, especially younger folk just entering the space, to

work on Bitcoin full-time. What I say is as follows:

If you have a strong ideological calling to the mission of Bitcoin and sound money,

it’s absolutely the most meaningful project for you to work on. But if that’s not you,

and you want to explore crypto, you should probably play around with Ethereum or

something else. Bitcoin is really tough to get funded for and the community,

while amazing, can be very hostile.

If I were more selfish about the mission, I’d glaze over these details. But I

want folks to decide for themselves and find something that makes them truly

happy. For most “at-heart” Bitcoiners that won’t be a disincentive to taking

the orange-pill, but for some it might. Note: I’m Jewish, so that probably

influence my views on converting people to things (Jews believe in discouraging

converts to find the pure of heart).

Despite my best efforts to convince myself otherwise, I’ve been an audit-the-fed

libertarian since elementary school or something and I have photos of myself

with Ron Paul a decade apart. So I am one of those ideologically drawn to

Bitcoin and not other projects with weaker foundations.

But it doesn’t mean I don’t turn an envious eye to the activity and research

happening in other communities, or get excited about on-chain chess engines even

if they’re impractical for now. And there’s also the magic of a supportive

communtiy that doesn’t threaten to have you beaten up when they disagree with

you about the minutae of a soft fork rollout.

Cool technologies attract nerds like moths to a lamp at night. Smart nerds

trying to solve interesting problems create solutions. Experiments that strain

the limits of a platform expose problems and create demand for solutions.

These solutions often have major positive externalities.

I don’t think I’m going to convince you here to care about NFTs. But I am –

hopefully – going to convince you to care about NFTs the phenomenon.

For example, scaling challenge in Ethereum have led to the development of Zero

Knowledge Roll-Ups, privacy issues things like Tornado Cash, and more. While as

a project Eth might be #ngmi, Bitcoiners have traditionally said that if

anything is worth having we’ll just be able to implement it ourselves in

Bitcoin. But there are certain things that have network effects where it will be

hard for us to replicate. And by the time we do go to replicate, the tooling

that’s been developed for doing these things on Eth might be like a decade ahead

of what we’ve got for Bitcoin. And all of the smart kids are going to become

adults who are bought in technically and socially on things other than Bitcoin.

And that really freakin’ matters.

I’m not advocating that Bitcoiners should embrace full-blown degeneracy. But

also it’s not in particular our job to prevent it technically. And the tools that

are produced when people have fun can lead to major innvoations.

For example, right now I am working on a system for building NFTs for Bitcoin on

Sapio.

Why? It’s fun. It touches on almost all of the infrastructure problems I’m

working on for Sapio right now. And it is low enough risk – in terms of risk of

losing Bitcoin – that I feel comfortable building and experimenting with these

NFT test-subjects. Worst-come-to-worst, the artists can always re-issue anything

corrupted via a software flaw. And then as the software matures with low-risk

but still fun applications, we can apply those learnings to managing Bitcoin as

well.

I also want to note that I really like artists. Artists as a community are

incredible. Artists use NFTs, so I like NFTs. Artists are the voice of the

people. Art can tear down the establishment. Art can change the world. And so

for Bitcoin, whose use is an inherently political message, what better community

to engage than the art world?

Bitcoiners are really fixated on the technical nonsense backing NFTs – yeah,

it’s not ‘literally’ the artwork. But then again, what literally is the

artwork? If you want a photograph and you want to pay the photographer for it,

do you? Do you get a receipt for it? Can you use that bill of sale to sell the

photo later? NFTs are just a better that. And if you don’t like the art that’s

sold as NFTs right now, why not find artists you do like? Why not get them

onboarded onto Bitcoin and fully orange-pilled? Hint: the HRF has a whole Art

in Protest section on their website.

Bitcoin NFTs could enable Bitcoin holders to pay dissident artists for their art

and avoid being shut down by authorities. Why not embrace that culturally? Isn’t

that the group that Bitcoin is for? And if you don’t like that, maybe you just

don’t like art. That’s ok, don’t buy it.

But NFTs are Stupid JPEGs Man

Ok sure. Whatever.

But there are all these contracts (as I showed you in previous posts) that

Bitcoin would benefit from, like inheritence schemes, vaults, decentralized

mining, payment pools, and more. Believe it or not, there tools needed to make

NFTs work well are the exact same tools required to make these work seamlessly.

So why not have a little fun, let people experiment with new ideas, get excited,

grow the community, and convert the big innovations into stable and mature

tooling for the critical infrastructure applications? And maybe we’ll uncover

some upside for brand new things that never occured possible to us before.

Throught the end of this series I’ll have some more posts detailing how to build

NFTs, Derivatives, DAOs, and Bonded Oracles. I hope that you can view them with

an open mind and appreciate how – even if you don’t think they are core to what

Bitcoin has to do – these innovations will fuel development of tools to support

the projects you do like without turning Bitcoin into a shitcoin. Who knows,

maybe you’ll find a new application you like.

Day 16: Rubin's Bitcoin Advent Calendar

13 Dec 2021

Welcome to day 16 of my Bitcoin Advent Calendar. You can see an index of all

the posts here or subscribe at

judica.org/join to get new posts in your inbox

Who here has some ERC-20s or 721s? Anyone? No one? Whatever.

The Punchline is that a lotta fuss goes into Ethereum smart contracts being

Turing Complete but guess what? Neither ERC-20 nor 721 really have anything to

do with being Turing Complete. What they do have to do with is having a

tightly defined interface that can integrate into other applications nicely.

This is great news for Bitcoin. It means that a lot of the cool stuff happening

in eth-land isn’t really about Turing Completeness, it’s about just defining

really kickass interfaces for the things we’re trying to do.

In the last few posts, we already saw examples of composability. We took a bunch

of concepts and were able to nest them inside of each other to make

Decentralized Coordination Free Mining Pools. But we can do a lot more with

composability than just compose ideas togehter by hand. In this post I’ll give

you a little sampler of different types of programmatic composability and interfaces,

like the ERC-20 and 721.

Address Composability

Because many Sapio contracts can be made completely noninteractively (with CTV

or an Oracle you’ll trust to be online later), if you compile a Sapio contract

and get an address you can just plug it in somewhere and it “composes” and you

can link it later. We saw this earlier with the ability to make a channel

address and send it to an exchange.

However, for Sapio if you just do an Address it won’t necessarily have the

understanding of what that address is for so you won’t get any of the Sapio

“rich” features.

Pre-Compiled

You can also take not just an address, but an entire (json-serialized?) Compiled

object that would include all the relevant metadata.

Rust Generic Types Composability

Well, if you’re a rust programmer this basically boils down to rust types rule!

We’ll give a couple examples.

The simplest example is just composing directly in a function:

#[then]

fn some_function(self, ctx: Context) {

ctx.template()

.add_output(ctx.funds(), &SomeOtherContract{/**/}, None)?

.into()

}

What if we want to pass any Contract as an argument for a Contract? Simple:

struct X {

a : Box<dyn Contract>

}

What if we want to restrict it a little bit more? We can use a trait bound.

Now only Y (or anything implementing GoodContract) can be plugged in.

trait GoodContract : Contract {

decl_then!{some_thing}

}

struct Y {

}

impl GoodContract for Y {

#[then]

fn some_thing(self, ctx: Context) {

empty()

}

}

impl Contract for Y {

declare!{then, Self::some_thing}

}

struct X<T: GoodContract> {

a : Box<dyn GoodContract>,

// note the inner type of a and b don't have to match

b : Box<dyn GoodContract>

}

Boxing gives us some power to be Generic at runtime, but we can also do some

more “compile time” logic. This can have some advantages, e.g., if we want to

guarantee that types are the same.

struct X<T : Contract, U: GoodContract> {

a : T,

b : T

// a more specific concrete type -- could be a T even

c: U,

d: U,

}

Sometimes it can be helpful to wrap things in functions, like we saw in the Vaults post.

struct X<T: Contract>

// This lets us stub in whatever we want for a function

a : Box<Fn(Self, Context) -> TxTmplIt>,

// this lets us get back any contract

b : Box<Fn(Self, Context) -> Box<dyn Contract>>,

// this lets us get back a specific contract

c : Box<Fn(Self, Context) -> T,

}

Clearly there’s a lot to do with the rust type system and making components.

It would even be possible to make certain types of ‘unchecked’ type traits,

for example:

trait Reusable {}

struct AlsoReusable<T> {

a: T,

}

// Only reusable if T Reusable

impl<T> Reusable for AlsoReusable<T> where T: Reusable {}

The Reusable tag could be used to tag contract components that would be “reuse

safe”. E.g., an HTLC or HTLC containing component would not be reuse safe since

hashes could be revealed. While reusability isn’t “proven” – that’s up to the

author to check – these types of traits can help us reason about the properties

of compositions of programs more safely. Unfortunately, Rust lacks negative

trait bounds (i.e., Not-Reusable), so you can’t reason about certain types of things.

Inheritence

We don’t have a fantastic way to do inheritence in Sapio presently. But stay

tuned! For now, then best you get is that you can do traits (like

GoodContract).

Cross Module Composability & WASM

One of the goals of Sapio is to be able to create contract modules with a

well-defined API Boundary that communicates with JSONs and is “typed” with

JSONSchema. This means that the Sapio modules can be running anywhere (e.g., a

remote server) and we can treat it like any other component.

Another goal of Sapio is to make it possible to compile modules into standalone

WASM modules. WASM stands for Web Assembly, it’s basically a small deterministic

computer emulator program format so we can compile our programs and run them

anywhere that the WASM interpreter is available.

Combining these two goals, it’s possible for one Sapio program to dynamically

load another as a WASM module. This means we can come up with a component,

compile it, and then link to it later from somewhere else. For example, we could

have a Payment Pool where we make each person’s leaf node a WASM module of their

choice, that could be something like a Channel, a Vault, or anything that

satisfies a “Payment Pool Payout Interface”.

For example, suppose we wanted to a generic API for making a batched

payment.

Defining the Interface

First, we define a payment that we want to batch.

/// A payment to a specific address

pub struct Payment {

/// # Amount (btc)

/// The amount to send

pub amount: AmountF64,

/// # Address

/// The Address to send to

pub address: bitcoin::Address,

}

Next, we define the full API that we want. Naming and versioning is still a

something we need to work on in the Sapio ecosystem, but for now it makes sense

to be verbose and include a version.

pub struct BatchingTraitVersion0_1_1 {

pub payments: Vec<Payment>,

/// # Feerate (Bitcoin per byte)

pub feerate_per_byte: AmountF64

}

Lastly, to finish defining the API, we have to do something really gross looking

in order to make it automatically checkable – this is essentially this is what the

user defined BatchingTraitVersion0_1_1 is going to verify modules are able to

understand. This is going to be improved in Sapio over time for better typechecking!

impl SapioJSONTrait for BatchingTraitVersion0_1_1 {

fn get_example_for_api_checking() -> Value {

#[derive(Serialize)]

enum Versions {

BatchingTraitVersion0_1_1(BatchingTraitVersion0_1_1),

}

serde_json::to_value(Versions::BatchingTraitVersion0_1_1(

BatchingTraitVersion0_1_1 {

payments: vec![],

feerate_per_byte: Amount::from_sat(0).into(),

},

))

.unwrap()

}

}

Implementing the Interface

Let’s say that we want to make a contract like TreePay implement

BatchingTraitVersion0_1_1. What do we need to do?

First, let’s get the boring stuff out of the way, we need to make the TreePay

module understand that it should support BatchingTraitVersion0_1_1.

/// # Different Calling Conventions to create a Treepay

enum Versions {

/// # Standard Tree Pay

TreePay(TreePay),

/// # Batching Trait API

BatchingTraitVersion0_1_1(BatchingTraitVersion0_1_1),

}

REGISTER![[TreePay, Versions], "logo.png"];

Next, we just need to define logic converting the data provided in

BatchingTraitVersion0_1_1 into a TreePay. Since BatchingTraitVersion0_1_1

is really basic, we need to pick values for the other fields.

impl From<BatchingTraitVersion0_1_1> for TreePay {

fn from(args: BatchingTraitVersion0_1_1) -> Self {

TreePay {

participants: args.payments,

radix: 4,

// estimate fees to be 4 outputs and 1 input + change

fee_sats_per_tx: args.feerate_per_byte * ((4 * 41) + 41 + 10),

timelock_backpressure: None,

}

}

}

impl From<Versions> for TreePay {

fn from(v: Versions) -> TreePay {

match v {

Versions::TreePay(v) => v,

Versions::BatchingTraitVersion0_1_1(v) => v.into(),

}

}

}

Using the Interface

To use this BatchingTraitVersion0_1_1, we can just define a struct as follows,

and when we deserialize it will be automatically verified to have declared a

fitting API.

pub struct RequiresABatch {

/// # Which Plugin to Use

/// Specify which contract plugin to call out to.

handle: SapioHostAPI<BatchingTraitVersion0_1_1>,

}

The SapioHostAPI handle can be either a human readable name (like

“user_preferences.batching” or “org.judica.modules.batchpay.latest”) and looked

up locally, or it could be an exact hash of the specific module to use.

We can then use the handle to resolve and compile against the third party module.

Because the module lives in an entirely separate WASM execution context,

we don’t need to worry about it corrupting our module or being able to access

information we don’t provide it.

Call to Action

ARE YOU A BIG BRAIN PROGRAMMING LANGUAGE PERSON?

PLEASE HELP ME MAKE THIS SAPIO

HAVE A COOL AND USEFUL TYPE SYSTEM I AM A SMALL BRAIN BOI AND THIS STUFF IS

HARD AND I NEED FRENZ.

EVEN THE KIND OF “FRENZ” THAT YOU HAVE TO PAY FOR wink.

CLICK HERE

In the posts coming Soon™, we’ll see some more specific examples of contracts

that make heavier use of having interfaces and all the cool shit we can get done.

That’s all I have to say. See you tomorrow.

Day 15: Rubin's Bitcoin Advent Calendar

12 Dec 2021

Welcome to day 15 of my Bitcoin Advent Calendar. You can see an index of all

the posts here or subscribe at

judica.org/join to get new posts in your inbox

Long time no see. You come around these parts often?

Let’s talk mining pools.

First, let’s define some things. What is a pool? A pool is a way to take a

strongly discontinuous income stream and turn it into a smoother income stream.

For example, suppose you are a songwriter. You’re a dime a dozen, there are 1000

songwriters. If you get song of the year, you get $1M Bonus. However, all the

other songwriters are equally pretty good, it’s a crapshoot. So you and half the

other songwriters agree to split the prize money whoever wins. Now, on average,

every other year you get $2000, instead of once every thousand years. Since

you’re only going to work about 50 years, your “expected” amount of winnings

would be $50,000 if you worked alone. But expected winnings don’t buy bread. By

pooling, your expected winnings are $2000 every other year for 50 years, so also

$50,000. But you expect to actually have some spare cash laying around. However,

if you got lucky and won the contest the year you wrote a hit, you’d end up way

richer! but the odds are 1:20 of that ever happening in your life, so there

aren’t that many rich songwriters (50 out of your 1000 peers…).

Mining is basically the same as our songwriter contest, just instead of silver

tongued lyrics, it’s noisy whirring bitcoin mining rigs. Many machines will

never mine a block. Many miners (the people operating it) won’t either! However,

by pooling their efforts together, they can turn a once-in-a-million-years

chance into earning temperatureless immaterial bitcoin day in and day out.

Who Pissed In your Pool?

The problem with pooling is that they take an extremely decentralized process

and add a centralized coordination layer on top. This layer has numerous issues

including but not limited to:

- Weak Infrastructure: What happens if e.g. DNS goes down as it did recently?

- KYC/AML requirements to split the rewards

- Centralized “block policies”

- Bloating chain space with miner payouts

- Getting kicked out of their home country (happened in China recently)

- Custodial hacking risk.

People are working on a lot of these issues with upgrades like “Stratum V2”

which aspire to give the pools less authority.

In theory, mining pool operators should be against things that limit their

business operations. However, we’re in a bit “later stage bitcoin mining”

whereas pooling is seen more as a necessary evil, and most pools are anchored by

big mining operations. And getting rid of pools would be great for Bitcoin,

which would increase the value of folks holdings/mining rigs. So while it might

seem irrational, it’s actually perfectly incentive compatible that mining pools

operators consider mining pools to be something to make less of a centralization

risk. Even if pools don’t exist in their current form, mining service providers

can still make really good business offerring all kinds of support. Forgive me

if i’m speaking out of turn, pool ops!

Making Mining Pools Better

To make mining pools better, we can set some ambitious goals:

- Funds should not be centrally custodied, ever, if at all.

- No KYC/AML.

- No “Extra network” software required.

- No blockchain bloat.

- No extra infrastructure.

- The size of a viable pool should be smaller. Remember our singer – if you

just pool with one other songwriter it doesn’t make your expected time till

payout in your lifetime. So bigger the pools, more regular the payouts. We want

the smallest possible “units of control” with the most regular payouts possible.

Fuck. That’s a huge list of goals.

But if you work with me here, you’ll see how we can nail every last one of them.

And in doing so, we can clear up some major Privacy hurdles and Decentralization

issues.

Building the Decentralized Coordination Free Mining Pool

We’ll build this up step by step. We probably won’t look at any Sapio code

today, but as a precursor I really must insist read the last couple posts first:

- Congestion Control

- Payment Pools

- Channels

You read them, right?

Right?

Ok.

The idea is actually really simple, but we’ll build it up piece by piece by piece.

Part 1: Window Functions over Blocks.

A window function is a little program that operates over the last “N” things and

computes something.

E.g., a window function could operate over the last 5 hours and count how many

carrots you ate. Or over the last 10 cars that pass you on the road.

A window function of bitcoin blocks could operate over a number of different things.

- The last 144 blocks

- The last 24 hours of blocks

- The last 100 blocks that meet some filter function (e.g, of size > 500KB)

A window function could compute lot of different things too:

- The average time difference between blocks

- The amount of fees paid in those blocks

- A subset of the blocks that pass another filter.

A last note: window functions need, for something like Bitcoin, a start height

where we exclude things prior (e.g., last 100 blocks since block 500,000)

Part 2: Giving presents to all our friends

Let’s do a window function over the last 100 Blocks and collect the 1st address

in the output of the coinbase transaction.

Now, in our block, instead of paying ourselves a reward, let’s divvy it up among

the last 100 blocks and pay them out our entire block reward, split up.

We’re so nice!

Part 3: Giving presents to our nice friends only

What if instead of paying everyone, we do a window function over the last 100

blocks and filter for only blocks that followed the same rule that we are

following (being nice). We take the addresses of each of them, and divvy up our

award to them too like before.

We’re so nice to only our nice friends!

Now stop and think a minute. All the “nice” blocks in the last 100 didn’t get a

reward directly, but they got paid by the future nice blocks handsomely. Even

though we don’t get any money from the block we mined, if our nice friends keep

on mining then they’ll pay us too returning the favor.

Re-read the above till it makes sense. This is the big idea. Now onto the “small”

ideas.

Part 4: Deferring Payouts

This is all kinda nice, but now our blocks get really really big since we’re

paying all our friends. Maybe we can be nice, but a little mean too and tell

them to use their own block space to get their gift.

So instead of paying them out directly, we round up all the nice block addresses

like before and we toss it in a Congestion Control Tree.

Now our friends do likewise too. Since the Congestion Control Module is

deterministic, everyone can generate the same tree and both verify that our

payout was received and generate the right transaction.

Now this gift doesn’t take up any of our space!

Part 5: Compacting

But it still takes up space for someone, and that blows.

So let’s do our pals a favor. Instead of just peeping the 1st address (which

really could be anything) in the coinbase transaction, let’s use a good ole

fashioned OP_RETURN (just some extra metadata) with a Taproot Public Key we want

to use in it.

Now let’s collect all the blocks that again follow the rule defined here, and

take all their taproot keys.

Now we gift them into a Payment Pool, instead of into just a Congestion Control

tree with musig aggregated keys at every node. It’s a minor difference – a

Congestion Control tree doesn’t have a taproot key path – but that difference

means the world.

Now instead of having to expand to get everyone paid, they can use it like a

Payment Pool! And Pools from different runs can even do a many-to-one

transaction where they merge balances.

For example, imagine two pools:

UTXO A from Block N: 1BTC Alice, 1BTC Carol, 1BTC Dave

UTXO B Block N+1: 1BTC Alice, 1BTC Carol, 1BTC Bob

We can do a transaction as follows to merge the balances:

Spends:

UTXO A, B

Creates:

UTXO C: 2BTC Alice, 2BTC Carol, 1BTC Dave, 1BTC Bob

Compared to doing the payments directly, fully expanding this creates only 4

outputs instead of 6! It gets even better the more miners are involved.

We could even merge many pools at the same time, and in the future, benefit from

something like cross-input-signature aggregation to make it even cheaper and

create even fewer outputs.

Part 6: Channels

But wait, there’s more!

We can even make the terminal leafs of the Payment Pool be channels instead of direct UTXOs.

This has a few big benefits.

- We don’t need to do any compaction as urgently, we can immediately route funds around.

- We don’t need to necessarily wait 100 blocks to spend out of our coinbase since we can use the channel directly.

- Instead of compaction, we can just “swap” payments around across channels.

How channel balancing might look.

How channel balancing might look.

This should be opt-in (with a tag field to opt-in/out) since if you didn’t want

a channel it could be annoying to have the extra timeout delays, especially if you

wanted e.g. to deposit directly to cold storage.

Part 7: Selecting Window Functions

What’s the best window function?

I got no freakin’ clue. We can window over time, blocks, fee amounts,

participating blocks, non participating blocks, etc.

Picking a good window function is an exercise in itself, and needs to be

scrutinized for game theoretic attacks.

Part 8: Payout Functions

Earlier we showed the rewards as being just evenly split among the last blocks,

but we could also reward people differently. E.g., we could reward miners who

divided more reward to the other miners more (incentivize collecting more fees),

or really anything deterministic that we can come up with.

Again, I don’t know the answer here. It’s a big design space!

Part 9: Voting on Parameters

One last idea: if we had some sort of parameter space for the window functions,

we could perhaps vote on-chain for tweaking it. E.g., each miner could vote to

+1 or -1 from the window length.

I don’t particularly think this is a good idea, because it brings in all sorts

of weird attacks and incentives, but it is a cool case of on-chain governance so

worth thinking more on.

Part 10: End of Transmission?

No more steps. Now we think a bit more about the implications of this.

Solo mining?

Well the bad news about this design is that we can’t really do solo mining.

Remember, most miners probably will never mine a block. So they would never be

able to enter the pool.

We could mess around with including things like partial work shares (just a

few!) into blocks, but I think the best bet is to instead to focus on

micro-pools. Micro-pools would be small units of hashrate (say, 1%?) that are

composed of lots of tiny miners.

The tiny miners can all connect to each other and gossip around their work

shares, use some sort of conesnsus algorithm, or use a pool operator. The blocks

that they mine should use a taproot address/key which is a multisig of some

portion of the workshares, that gets included in the top-level pool as a part of

Payment Pool.

So while we don’t quite make solo mining feasible, the larger the window we use

the tinier the miners can be while getting better de-risking.

Analysis?

A little out of scope for here, but it should work conceptually!

A while back I analyzed this kind of setup, read more

here. Feel free to experiment

with window and payout functions and report back!

chart showing that the rewards are smoother over time

chart showing that the rewards are smoother over time

Now Implement it!

Well we are not gonna do that here, since this is kinda a mangum opus of Sapio

and it would be wayyyy too long. But it should be somewhat conceptually

straightforward if you paid close attention to the “precursor” posts. And you

can see some seeds of progress for an implementation on

github,

although I’ve mostly been focused on simpler applications (e.g. the constituent

components of payment pools and channels) for the time being… contributions welcome!

TL;DR: Sapio + CTV makes pooled mining more decentralized and more private.

Before we can do that, we need to load a WASM Plugin with a compiled contract.

Before we can do that, we need to load a WASM Plugin with a compiled contract.

Let’s load the Payment Pool module. You can see the code for it

Let’s load the Payment Pool module. You can see the code for it

And now we can see we have a module!

And now we can see we have a module!

Let’s load a few more so it doesn’t look lonely.

Let’s load a few more so it doesn’t look lonely.

Now let’s check out the Payment Pool module.

Now let’s check out the Payment Pool module.

Now let’s check out another one – we can see they each have different types of

arguments, auto-generated from the code.

Now let’s check out another one – we can see they each have different types of

arguments, auto-generated from the code.

What’s that??? It’s a small bug I am fixing :/. Not to worry…

What’s that??? It’s a small bug I am fixing :/. Not to worry…

Just click repair layout.

Just click repair layout.

And the presentation resets. I’ll fix it soon, but it can be useful if there’s a

glitch to reset it.

And the presentation resets. I’ll fix it soon, but it can be useful if there’s a

glitch to reset it. Let’s get a closer look…

Let’s get a closer look…

Let’s zoom out (not helpful!)…

Let’s zoom out (not helpful!)…

Let’s zoom back in. Note how the transactions are square boxes and the outputs

are rounded rectangles. Blue lines connect transactions to their outputs. Purple lines

connect outputs to their (potential) spends.

Let’s zoom back in. Note how the transactions are square boxes and the outputs

are rounded rectangles. Blue lines connect transactions to their outputs. Purple lines

connect outputs to their (potential) spends.

We even have some actions that we can take, like sending it to the network.

We even have some actions that we can take, like sending it to the network.

Let’s try it….

Let’s try it….

Oops! We need to sign it first…

Oops! We need to sign it first…

And then we can send it.

And then we can send it.

What other buttons do we have? What’s this do?

What other buttons do we have? What’s this do?

It teleports us to the output we are creating!

It teleports us to the output we are creating!

Notice how the output is marked “Updatable”, and there is also a “DO_TX”

button (corresponding to the DO_TX in the Payment Pool). Let’s click that…

Notice how the output is marked “Updatable”, and there is also a “DO_TX”

button (corresponding to the DO_TX in the Payment Pool). Let’s click that…

Ooooh. It prompts us with a form to do the transaction!

Ooooh. It prompts us with a form to do the transaction!

Ok, let’s fill this sucker out…

Ok, let’s fill this sucker out…

Click submit, then recompile (separate actions in case we want to make multiple “moves” before recompiling).

Click submit, then recompile (separate actions in case we want to make multiple “moves” before recompiling).

I really need to fix this bug…

I really need to fix this bug…

Voila!

Voila! The new part has our 0.1 BTC Spend + the re-creation of the Payment Pool with less funds.

The new part has our 0.1 BTC Spend + the re-creation of the Payment Pool with less funds.

Ok, let’s go nuts and do another state transition off-of the first one? This time more payouts!

Ok, let’s go nuts and do another state transition off-of the first one? This time more payouts!

Submit…

Submit… And Recompile

And Recompile I skipped showing you the bug this time.

I skipped showing you the bug this time.

Simulate the passing of time (or blocks).

Simulate the passing of time (or blocks).

Pretty cool!

Pretty cool!

How channel balancing might look.

How channel balancing might look. chart showing that the rewards are smoother over time

chart showing that the rewards are smoother over time